Browse Source

blockchain: add v2 reactor (#4361)

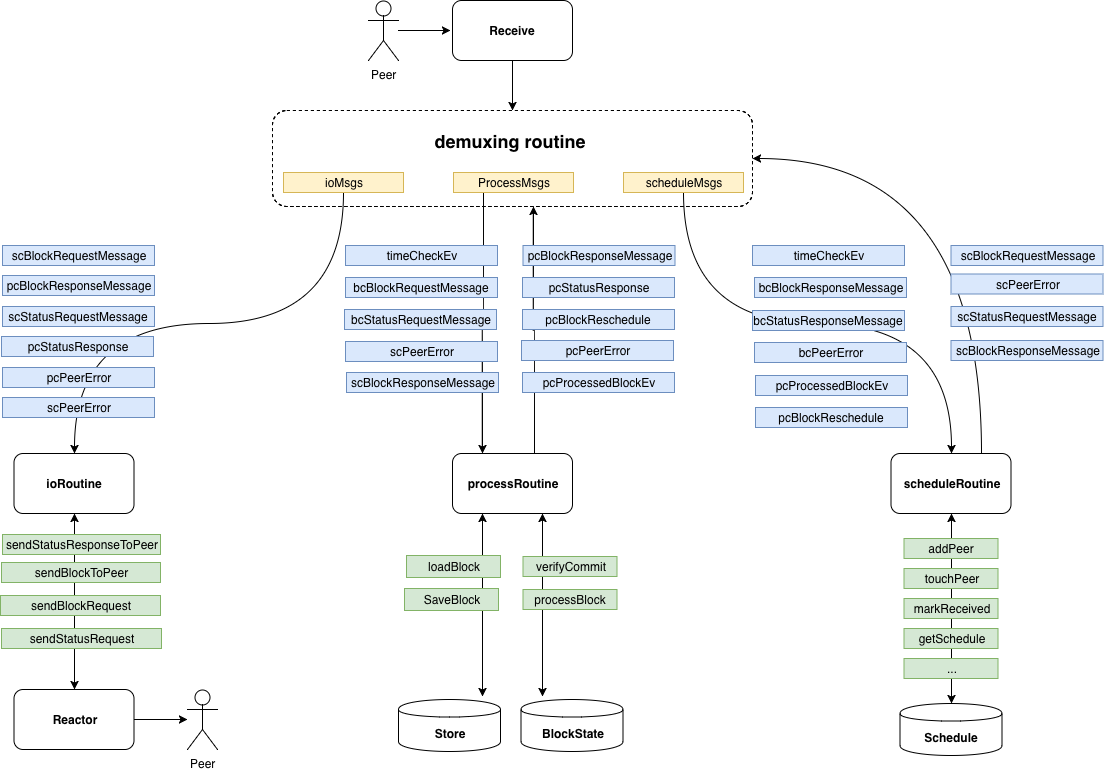

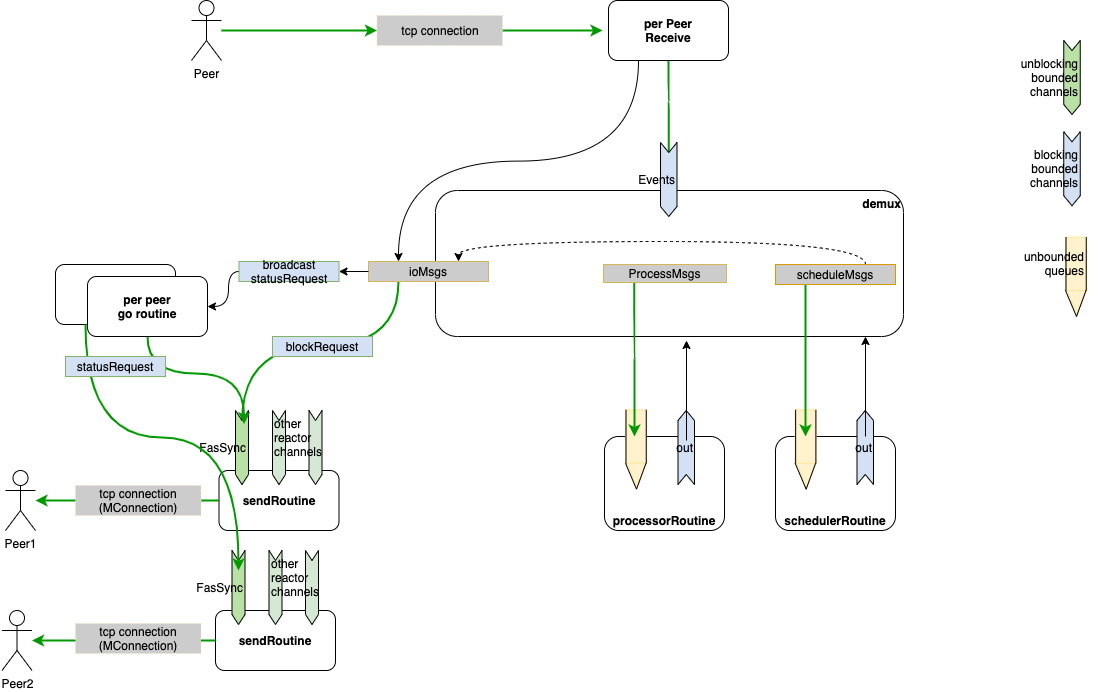

The work includes the reactor which ties together all the seperate routines involved in the design of the blockchain v2 refactor. This PR replaces #4067 which got far too large and messy after a failed attempt to rebase. ## Commits: * Blockchainv 2 reactor: + I cleaner copy of the work done in #4067 which fell too far behind and was a nightmare to rebase. + The work includes the reactor which ties together all the seperate routines involved in the design of the blockchain v2 refactor. * fixes after merge * reorder iIO interface methodset * change iO -> IO * panic before send nil block * rename switchToConsensus -> trySwitchToConsensus * rename tdState -> tmState * Update blockchain/v2/reactor.go Co-Authored-By: Bot from GolangCI <42910462+golangcibot@users.noreply.github.com> * remove peer when it sends a block unsolicited * check for not ready in markReceived * fix error * fix the pcFinished event * typo fix * add documentation for processor fields * simplify time.Since * try and make the linter happy * some doc updates * fix channel diagram * Update adr-043-blockchain-riri-org.md * panic on nil switch * liting fixes * account for nil block in bBlockResponseMessage * panic on duplicate block enqueued by processor * linting * goimport reactor_test.go Co-authored-by: Bot from GolangCI <42910462+golangcibot@users.noreply.github.com> Co-authored-by: Anca Zamfir <ancazamfir@users.noreply.github.com> Co-authored-by: Marko <marbar3778@yahoo.com> Co-authored-by: Anton Kaliaev <anton.kalyaev@gmail.com>pull/4435/head

committed by

GitHub

GitHub

No known key found for this signature in database

GPG Key ID: 4AEE18F83AFDEB23

17 changed files with 1536 additions and 704 deletions

Split View

Diff Options

-

+1 -1behaviour/reporter.go

-

+2 -2blockchain/v1/reactor.go

-

+13 -0blockchain/v2/codec.go

-

+111 -0blockchain/v2/io.go

-

+1 -0blockchain/v2/metrics.go

-

+55 -69blockchain/v2/processor.go

-

+33 -18blockchain/v2/processor_context.go

-

+59 -110blockchain/v2/processor_test.go

-

+462 -51blockchain/v2/reactor.go

-

+504 -9blockchain/v2/reactor_test.go

-

+3 -2blockchain/v2/routine.go

-

+90 -113blockchain/v2/scheduler.go

-

+191 -328blockchain/v2/scheduler_test.go

-

+1 -0blockchain/v2/types.go

-

+10 -1docs/architecture/adr-043-blockchain-riri-org.md

-

BINdocs/architecture/img/blockchain-reactor-v2.png

-

BINdocs/architecture/img/blockchain-v2-channels.png

+ 1

- 1

behaviour/reporter.go

View File

+ 2

- 2

blockchain/v1/reactor.go

View File

+ 13

- 0

blockchain/v2/codec.go

View File

| @ -0,0 +1,13 @@ | |||

| package v2 | |||

| import ( | |||

| amino "github.com/tendermint/go-amino" | |||

| "github.com/tendermint/tendermint/types" | |||

| ) | |||

| var cdc = amino.NewCodec() | |||

| func init() { | |||

| RegisterBlockchainMessages(cdc) | |||

| types.RegisterBlockAmino(cdc) | |||

| } | |||

+ 111

- 0

blockchain/v2/io.go

View File

| @ -0,0 +1,111 @@ | |||

| package v2 | |||

| import ( | |||

| "fmt" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| "github.com/tendermint/tendermint/state" | |||

| "github.com/tendermint/tendermint/types" | |||

| ) | |||

| type iIO interface { | |||

| sendBlockRequest(peerID p2p.ID, height int64) error | |||

| sendBlockToPeer(block *types.Block, peerID p2p.ID) error | |||

| sendBlockNotFound(height int64, peerID p2p.ID) error | |||

| sendStatusResponse(height int64, peerID p2p.ID) error | |||

| broadcastStatusRequest(height int64) | |||

| trySwitchToConsensus(state state.State, blocksSynced int) | |||

| } | |||

| type switchIO struct { | |||

| sw *p2p.Switch | |||

| } | |||

| func newSwitchIo(sw *p2p.Switch) *switchIO { | |||

| return &switchIO{ | |||

| sw: sw, | |||

| } | |||

| } | |||

| const ( | |||

| // BlockchainChannel is a channel for blocks and status updates (`BlockStore` height) | |||

| BlockchainChannel = byte(0x40) | |||

| ) | |||

| type consensusReactor interface { | |||

| // for when we switch from blockchain reactor and fast sync to | |||

| // the consensus machine | |||

| SwitchToConsensus(state.State, int) | |||

| } | |||

| func (sio *switchIO) sendBlockRequest(peerID p2p.ID, height int64) error { | |||

| peer := sio.sw.Peers().Get(peerID) | |||

| if peer == nil { | |||

| return fmt.Errorf("peer not found") | |||

| } | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcBlockRequestMessage{Height: height}) | |||

| queued := peer.TrySend(BlockchainChannel, msgBytes) | |||

| if !queued { | |||

| return fmt.Errorf("send queue full") | |||

| } | |||

| return nil | |||

| } | |||

| func (sio *switchIO) sendStatusResponse(height int64, peerID p2p.ID) error { | |||

| peer := sio.sw.Peers().Get(peerID) | |||

| if peer == nil { | |||

| return fmt.Errorf("peer not found") | |||

| } | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcStatusResponseMessage{Height: height}) | |||

| if queued := peer.TrySend(BlockchainChannel, msgBytes); !queued { | |||

| return fmt.Errorf("peer queue full") | |||

| } | |||

| return nil | |||

| } | |||

| func (sio *switchIO) sendBlockToPeer(block *types.Block, peerID p2p.ID) error { | |||

| peer := sio.sw.Peers().Get(peerID) | |||

| if peer == nil { | |||

| return fmt.Errorf("peer not found") | |||

| } | |||

| if block == nil { | |||

| panic("trying to send nil block") | |||

| } | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcBlockResponseMessage{Block: block}) | |||

| if queued := peer.TrySend(BlockchainChannel, msgBytes); !queued { | |||

| return fmt.Errorf("peer queue full") | |||

| } | |||

| return nil | |||

| } | |||

| func (sio *switchIO) sendBlockNotFound(height int64, peerID p2p.ID) error { | |||

| peer := sio.sw.Peers().Get(peerID) | |||

| if peer == nil { | |||

| return fmt.Errorf("peer not found") | |||

| } | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcNoBlockResponseMessage{Height: height}) | |||

| if queued := peer.TrySend(BlockchainChannel, msgBytes); !queued { | |||

| return fmt.Errorf("peer queue full") | |||

| } | |||

| return nil | |||

| } | |||

| func (sio *switchIO) trySwitchToConsensus(state state.State, blocksSynced int) { | |||

| conR, ok := sio.sw.Reactor("CONSENSUS").(consensusReactor) | |||

| if ok { | |||

| conR.SwitchToConsensus(state, blocksSynced) | |||

| } | |||

| } | |||

| func (sio *switchIO) broadcastStatusRequest(height int64) { | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcStatusRequestMessage{height}) | |||

| // XXX: maybe we should use an io specific peer list here | |||

| sio.sw.Broadcast(BlockchainChannel, msgBytes) | |||

| } | |||

+ 1

- 0

blockchain/v2/metrics.go

View File

+ 55

- 69

blockchain/v2/processor.go

View File

+ 33

- 18

blockchain/v2/processor_context.go

View File

+ 59

- 110

blockchain/v2/processor_test.go

View File

+ 462

- 51

blockchain/v2/reactor.go

View File

| @ -1,118 +1,529 @@ | |||

| package v2 | |||

| import ( | |||

| "errors" | |||

| "fmt" | |||

| "sync" | |||

| "time" | |||

| "github.com/tendermint/go-amino" | |||

| "github.com/tendermint/tendermint/behaviour" | |||

| "github.com/tendermint/tendermint/libs/log" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| "github.com/tendermint/tendermint/state" | |||

| "github.com/tendermint/tendermint/types" | |||

| ) | |||

| type timeCheck struct { | |||

| priorityHigh | |||

| time time.Time | |||

| //------------------------------------- | |||

| type bcBlockRequestMessage struct { | |||

| Height int64 | |||

| } | |||

| // ValidateBasic performs basic validation. | |||

| func (m *bcBlockRequestMessage) ValidateBasic() error { | |||

| if m.Height < 0 { | |||

| return errors.New("negative Height") | |||

| } | |||

| return nil | |||

| } | |||

| func (m *bcBlockRequestMessage) String() string { | |||

| return fmt.Sprintf("[bcBlockRequestMessage %v]", m.Height) | |||

| } | |||

| type bcNoBlockResponseMessage struct { | |||

| Height int64 | |||

| } | |||

| // ValidateBasic performs basic validation. | |||

| func (m *bcNoBlockResponseMessage) ValidateBasic() error { | |||

| if m.Height < 0 { | |||

| return errors.New("negative Height") | |||

| } | |||

| return nil | |||

| } | |||

| func (m *bcNoBlockResponseMessage) String() string { | |||

| return fmt.Sprintf("[bcNoBlockResponseMessage %d]", m.Height) | |||

| } | |||

| //------------------------------------- | |||

| type bcBlockResponseMessage struct { | |||

| Block *types.Block | |||

| } | |||

| // ValidateBasic performs basic validation. | |||

| func (m *bcBlockResponseMessage) ValidateBasic() error { | |||

| if m.Block == nil { | |||

| return errors.New("block response message has nil block") | |||

| } | |||

| return m.Block.ValidateBasic() | |||

| } | |||

| func (m *bcBlockResponseMessage) String() string { | |||

| return fmt.Sprintf("[bcBlockResponseMessage %v]", m.Block.Height) | |||

| } | |||

| func schedulerHandle(event Event) (Event, error) { | |||

| if _, ok := event.(timeCheck); ok { | |||

| fmt.Println("scheduler handle timeCheck") | |||

| //------------------------------------- | |||

| type bcStatusRequestMessage struct { | |||

| Height int64 | |||

| } | |||

| // ValidateBasic performs basic validation. | |||

| func (m *bcStatusRequestMessage) ValidateBasic() error { | |||

| if m.Height < 0 { | |||

| return errors.New("negative Height") | |||

| } | |||

| return noOp, nil | |||

| return nil | |||

| } | |||

| func (m *bcStatusRequestMessage) String() string { | |||

| return fmt.Sprintf("[bcStatusRequestMessage %v]", m.Height) | |||

| } | |||

| //------------------------------------- | |||

| type bcStatusResponseMessage struct { | |||

| Height int64 | |||

| } | |||

| func processorHandle(event Event) (Event, error) { | |||

| if _, ok := event.(timeCheck); ok { | |||

| fmt.Println("processor handle timeCheck") | |||

| // ValidateBasic performs basic validation. | |||

| func (m *bcStatusResponseMessage) ValidateBasic() error { | |||

| if m.Height < 0 { | |||

| return errors.New("negative Height") | |||

| } | |||

| return noOp, nil | |||

| return nil | |||

| } | |||

| func (m *bcStatusResponseMessage) String() string { | |||

| return fmt.Sprintf("[bcStatusResponseMessage %v]", m.Height) | |||

| } | |||

| type blockStore interface { | |||

| LoadBlock(height int64) *types.Block | |||

| SaveBlock(*types.Block, *types.PartSet, *types.Commit) | |||

| Height() int64 | |||

| } | |||

| type Reactor struct { | |||

| events chan Event | |||

| // BlockchainReactor handles fast sync protocol. | |||

| type BlockchainReactor struct { | |||

| p2p.BaseReactor | |||

| events chan Event // XXX: Rename eventsFromPeers | |||

| stopDemux chan struct{} | |||

| scheduler *Routine | |||

| processor *Routine | |||

| ticker *time.Ticker | |||

| logger log.Logger | |||

| mtx sync.RWMutex | |||

| maxPeerHeight int64 | |||

| syncHeight int64 | |||

| reporter behaviour.Reporter | |||

| io iIO | |||

| store blockStore | |||

| } | |||

| //nolint:unused,deadcode | |||

| type blockVerifier interface { | |||

| VerifyCommit(chainID string, blockID types.BlockID, height int64, commit *types.Commit) error | |||

| } | |||

| func NewReactor(bufferSize int) *Reactor { | |||

| return &Reactor{ | |||

| //nolint:deadcode | |||

| type blockApplier interface { | |||

| ApplyBlock(state state.State, blockID types.BlockID, block *types.Block) (state.State, error) | |||

| } | |||

| // XXX: unify naming in this package around tmState | |||

| // XXX: V1 stores a copy of state as initialState, which is never mutated. Is that nessesary? | |||

| func newReactor(state state.State, store blockStore, reporter behaviour.Reporter, | |||

| blockApplier blockApplier, bufferSize int) *BlockchainReactor { | |||

| scheduler := newScheduler(state.LastBlockHeight, time.Now()) | |||

| pContext := newProcessorContext(store, blockApplier, state) | |||

| // TODO: Fix naming to just newProcesssor | |||

| // newPcState requires a processorContext | |||

| processor := newPcState(pContext) | |||

| return &BlockchainReactor{ | |||

| events: make(chan Event, bufferSize), | |||

| stopDemux: make(chan struct{}), | |||

| scheduler: newRoutine("scheduler", schedulerHandle, bufferSize), | |||

| processor: newRoutine("processor", processorHandle, bufferSize), | |||

| ticker: time.NewTicker(1 * time.Second), | |||

| scheduler: newRoutine("scheduler", scheduler.handle, bufferSize), | |||

| processor: newRoutine("processor", processor.handle, bufferSize), | |||

| store: store, | |||

| reporter: reporter, | |||

| logger: log.NewNopLogger(), | |||

| } | |||

| } | |||

| // nolint:unused | |||

| func (r *Reactor) setLogger(logger log.Logger) { | |||

| // NewBlockchainReactor creates a new reactor instance. | |||

| func NewBlockchainReactor( | |||

| state state.State, | |||

| blockApplier blockApplier, | |||

| store blockStore, | |||

| fastSync bool) *BlockchainReactor { | |||

| reporter := behaviour.NewMockReporter() | |||

| return newReactor(state, store, reporter, blockApplier, 1000) | |||

| } | |||

| // SetSwitch implements Reactor interface. | |||

| func (r *BlockchainReactor) SetSwitch(sw *p2p.Switch) { | |||

| if sw == nil { | |||

| panic("set nil switch") | |||

| } | |||

| r.Switch = sw | |||

| r.io = newSwitchIo(sw) | |||

| } | |||

| func (r *BlockchainReactor) setMaxPeerHeight(height int64) { | |||

| r.mtx.Lock() | |||

| defer r.mtx.Unlock() | |||

| if height > r.maxPeerHeight { | |||

| r.maxPeerHeight = height | |||

| } | |||

| } | |||

| func (r *BlockchainReactor) setSyncHeight(height int64) { | |||

| r.mtx.Lock() | |||

| defer r.mtx.Unlock() | |||

| r.syncHeight = height | |||

| } | |||

| // SyncHeight returns the height to which the BlockchainReactor has synced. | |||

| func (r *BlockchainReactor) SyncHeight() int64 { | |||

| r.mtx.RLock() | |||

| defer r.mtx.RUnlock() | |||

| return r.syncHeight | |||

| } | |||

| // SetLogger sets the logger of the reactor. | |||

| func (r *BlockchainReactor) SetLogger(logger log.Logger) { | |||

| r.logger = logger | |||

| r.scheduler.setLogger(logger) | |||

| r.processor.setLogger(logger) | |||

| } | |||

| func (r *Reactor) Start() { | |||

| // Start implements cmn.Service interface | |||

| func (r *BlockchainReactor) Start() error { | |||

| r.reporter = behaviour.NewSwitchReporter(r.BaseReactor.Switch) | |||

| go r.scheduler.start() | |||

| go r.processor.start() | |||

| go r.demux() | |||

| return nil | |||

| } | |||

| <-r.scheduler.ready() | |||

| <-r.processor.ready() | |||

| // reactor generated ticker events: | |||

| // ticker for cleaning peers | |||

| type rTryPrunePeer struct { | |||

| priorityHigh | |||

| time time.Time | |||

| } | |||

| go func() { | |||

| for t := range r.ticker.C { | |||

| r.events <- timeCheck{time: t} | |||

| } | |||

| }() | |||

| func (e rTryPrunePeer) String() string { | |||

| return fmt.Sprintf(": %v", e.time) | |||

| } | |||

| // XXX: How to make this deterministic? | |||

| // XXX: Would it be possible here to provide some kind of type safety for the types | |||

| // of events that each routine can produce and consume? | |||

| func (r *Reactor) demux() { | |||

| // ticker event for scheduling block requests | |||

| type rTrySchedule struct { | |||

| priorityHigh | |||

| time time.Time | |||

| } | |||

| func (e rTrySchedule) String() string { | |||

| return fmt.Sprintf(": %v", e.time) | |||

| } | |||

| // ticker for block processing | |||

| type rProcessBlock struct { | |||

| priorityNormal | |||

| } | |||

| // reactor generated events based on blockchain related messages from peers: | |||

| // blockResponse message received from a peer | |||

| type bcBlockResponse struct { | |||

| priorityNormal | |||

| time time.Time | |||

| peerID p2p.ID | |||

| size int64 | |||

| block *types.Block | |||

| } | |||

| // blockNoResponse message received from a peer | |||

| type bcNoBlockResponse struct { | |||

| priorityNormal | |||

| time time.Time | |||

| peerID p2p.ID | |||

| height int64 | |||

| } | |||

| // statusResponse message received from a peer | |||

| type bcStatusResponse struct { | |||

| priorityNormal | |||

| time time.Time | |||

| peerID p2p.ID | |||

| height int64 | |||

| } | |||

| // new peer is connected | |||

| type bcAddNewPeer struct { | |||

| priorityNormal | |||

| peerID p2p.ID | |||

| } | |||

| // existing peer is removed | |||

| type bcRemovePeer struct { | |||

| priorityHigh | |||

| peerID p2p.ID | |||

| reason interface{} | |||

| } | |||

| func (r *BlockchainReactor) demux() { | |||

| var lastRate = 0.0 | |||

| var lastHundred = time.Now() | |||

| var ( | |||

| processBlockFreq = 20 * time.Millisecond | |||

| doProcessBlockCh = make(chan struct{}, 1) | |||

| doProcessBlockTk = time.NewTicker(processBlockFreq) | |||

| prunePeerFreq = 1 * time.Second | |||

| doPrunePeerCh = make(chan struct{}, 1) | |||

| doPrunePeerTk = time.NewTicker(prunePeerFreq) | |||

| scheduleFreq = 20 * time.Millisecond | |||

| doScheduleCh = make(chan struct{}, 1) | |||

| doScheduleTk = time.NewTicker(scheduleFreq) | |||

| statusFreq = 10 * time.Second | |||

| doStatusCh = make(chan struct{}, 1) | |||

| doStatusTk = time.NewTicker(statusFreq) | |||

| ) | |||

| // XXX: Extract timers to make testing atemporal | |||

| for { | |||

| select { | |||

| // Pacers: send at most per frequency but don't saturate | |||

| case <-doProcessBlockTk.C: | |||

| select { | |||

| case doProcessBlockCh <- struct{}{}: | |||

| default: | |||

| } | |||

| case <-doPrunePeerTk.C: | |||

| select { | |||

| case doPrunePeerCh <- struct{}{}: | |||

| default: | |||

| } | |||

| case <-doScheduleTk.C: | |||

| select { | |||

| case doScheduleCh <- struct{}{}: | |||

| default: | |||

| } | |||

| case <-doStatusTk.C: | |||

| select { | |||

| case doStatusCh <- struct{}{}: | |||

| default: | |||

| } | |||

| // Tickers: perform tasks periodically | |||

| case <-doScheduleCh: | |||

| r.scheduler.send(rTrySchedule{time: time.Now()}) | |||

| case <-doPrunePeerCh: | |||

| r.scheduler.send(rTryPrunePeer{time: time.Now()}) | |||

| case <-doProcessBlockCh: | |||

| r.processor.send(rProcessBlock{}) | |||

| case <-doStatusCh: | |||

| r.io.broadcastStatusRequest(r.SyncHeight()) | |||

| // Events from peers | |||

| case event := <-r.events: | |||

| // XXX: check for backpressure | |||

| r.scheduler.send(event) | |||

| r.processor.send(event) | |||

| case <-r.stopDemux: | |||

| r.logger.Info("demuxing stopped") | |||

| return | |||

| switch event := event.(type) { | |||

| case bcStatusResponse: | |||

| r.setMaxPeerHeight(event.height) | |||

| r.scheduler.send(event) | |||

| case bcAddNewPeer, bcRemovePeer, bcBlockResponse, bcNoBlockResponse: | |||

| r.scheduler.send(event) | |||

| } | |||

| // Incremental events form scheduler | |||

| case event := <-r.scheduler.next(): | |||

| r.processor.send(event) | |||

| switch event := event.(type) { | |||

| case scBlockReceived: | |||

| r.processor.send(event) | |||

| case scPeerError: | |||

| r.processor.send(event) | |||

| r.reporter.Report(behaviour.BadMessage(event.peerID, "scPeerError")) | |||

| case scBlockRequest: | |||

| r.io.sendBlockRequest(event.peerID, event.height) | |||

| case scFinishedEv: | |||

| r.processor.send(event) | |||

| r.scheduler.stop() | |||

| } | |||

| // Incremental events from processor | |||

| case event := <-r.processor.next(): | |||

| r.scheduler.send(event) | |||

| switch event := event.(type) { | |||

| case pcBlockProcessed: | |||

| r.setSyncHeight(event.height) | |||

| if r.syncHeight%100 == 0 { | |||

| lastRate = 0.9*lastRate + 0.1*(100/time.Since(lastHundred).Seconds()) | |||

| r.logger.Info("Fast Syncc Rate", "height", r.syncHeight, | |||

| "max_peer_height", r.maxPeerHeight, "blocks/s", lastRate) | |||

| lastHundred = time.Now() | |||

| } | |||

| r.scheduler.send(event) | |||

| case pcBlockVerificationFailure: | |||

| r.scheduler.send(event) | |||

| case pcFinished: | |||

| r.io.trySwitchToConsensus(event.tmState, event.blocksSynced) | |||

| r.processor.stop() | |||

| } | |||

| // Terminal events from scheduler | |||

| case err := <-r.scheduler.final(): | |||

| r.logger.Info(fmt.Sprintf("scheduler final %s", err)) | |||

| case err := <-r.processor.final(): | |||

| r.logger.Info(fmt.Sprintf("processor final %s", err)) | |||

| // XXX: switch to consensus | |||

| // send the processor stop? | |||

| // Terminal event from processor | |||

| case event := <-r.processor.final(): | |||

| r.logger.Info(fmt.Sprintf("processor final %s", event)) | |||

| case <-r.stopDemux: | |||

| r.logger.Info("demuxing stopped") | |||

| return | |||

| } | |||

| } | |||

| } | |||

| func (r *Reactor) Stop() { | |||

| // Stop implements cmn.Service interface. | |||

| func (r *BlockchainReactor) Stop() error { | |||

| r.logger.Info("reactor stopping") | |||

| r.ticker.Stop() | |||

| r.scheduler.stop() | |||

| r.processor.stop() | |||

| close(r.stopDemux) | |||

| close(r.events) | |||

| r.logger.Info("reactor stopped") | |||

| return nil | |||

| } | |||

| const ( | |||

| // NOTE: keep up to date with bcBlockResponseMessage | |||

| bcBlockResponseMessagePrefixSize = 4 | |||

| bcBlockResponseMessageFieldKeySize = 1 | |||

| maxMsgSize = types.MaxBlockSizeBytes + | |||

| bcBlockResponseMessagePrefixSize + | |||

| bcBlockResponseMessageFieldKeySize | |||

| ) | |||

| // BlockchainMessage is a generic message for this reactor. | |||

| type BlockchainMessage interface { | |||

| ValidateBasic() error | |||

| } | |||

| // RegisterBlockchainMessages registers the fast sync messages for amino encoding. | |||

| func RegisterBlockchainMessages(cdc *amino.Codec) { | |||

| cdc.RegisterInterface((*BlockchainMessage)(nil), nil) | |||

| cdc.RegisterConcrete(&bcBlockRequestMessage{}, "tendermint/blockchain/BlockRequest", nil) | |||

| cdc.RegisterConcrete(&bcBlockResponseMessage{}, "tendermint/blockchain/BlockResponse", nil) | |||

| cdc.RegisterConcrete(&bcNoBlockResponseMessage{}, "tendermint/blockchain/NoBlockResponse", nil) | |||

| cdc.RegisterConcrete(&bcStatusResponseMessage{}, "tendermint/blockchain/StatusResponse", nil) | |||

| cdc.RegisterConcrete(&bcStatusRequestMessage{}, "tendermint/blockchain/StatusRequest", nil) | |||

| } | |||

| func (r *Reactor) Receive(event Event) { | |||

| // XXX: decode and serialize write events | |||

| // TODO: backpressure | |||

| func decodeMsg(bz []byte) (msg BlockchainMessage, err error) { | |||

| if len(bz) > maxMsgSize { | |||

| return msg, fmt.Errorf("msg exceeds max size (%d > %d)", len(bz), maxMsgSize) | |||

| } | |||

| err = cdc.UnmarshalBinaryBare(bz, &msg) | |||

| return | |||

| } | |||

| // Receive implements Reactor by handling different message types. | |||

| func (r *BlockchainReactor) Receive(chID byte, src p2p.Peer, msgBytes []byte) { | |||

| msg, err := decodeMsg(msgBytes) | |||

| if err != nil { | |||

| r.logger.Error("error decoding message", | |||

| "src", src.ID(), "chId", chID, "msg", msg, "err", err, "bytes", msgBytes) | |||

| _ = r.reporter.Report(behaviour.BadMessage(src.ID(), err.Error())) | |||

| return | |||

| } | |||

| if err = msg.ValidateBasic(); err != nil { | |||

| r.logger.Error("peer sent us invalid msg", "peer", src, "msg", msg, "err", err) | |||

| _ = r.reporter.Report(behaviour.BadMessage(src.ID(), err.Error())) | |||

| return | |||

| } | |||

| r.logger.Debug("Receive", "src", src.ID(), "chID", chID, "msg", msg) | |||

| switch msg := msg.(type) { | |||

| case *bcStatusRequestMessage: | |||

| if err := r.io.sendStatusResponse(r.store.Height(), src.ID()); err != nil { | |||

| r.logger.Error("Could not send status message to peer", "src", src) | |||

| } | |||

| case *bcBlockRequestMessage: | |||

| block := r.store.LoadBlock(msg.Height) | |||

| if block != nil { | |||

| if err = r.io.sendBlockToPeer(block, src.ID()); err != nil { | |||

| r.logger.Error("Could not send block message to peer: ", err) | |||

| } | |||

| } else { | |||

| r.logger.Info("peer asking for a block we don't have", "src", src, "height", msg.Height) | |||

| peerID := src.ID() | |||

| if err = r.io.sendBlockNotFound(msg.Height, peerID); err != nil { | |||

| r.logger.Error("Couldn't send block not found: ", err) | |||

| } | |||

| } | |||

| case *bcStatusResponseMessage: | |||

| r.events <- bcStatusResponse{peerID: src.ID(), height: msg.Height} | |||

| case *bcBlockResponseMessage: | |||

| r.events <- bcBlockResponse{ | |||

| peerID: src.ID(), | |||

| block: msg.Block, | |||

| size: int64(len(msgBytes)), | |||

| time: time.Now(), | |||

| } | |||

| case *bcNoBlockResponseMessage: | |||

| r.events <- bcNoBlockResponse{peerID: src.ID(), height: msg.Height, time: time.Now()} | |||

| } | |||

| } | |||

| // AddPeer implements Reactor interface | |||

| func (r *BlockchainReactor) AddPeer(peer p2p.Peer) { | |||

| err := r.io.sendStatusResponse(r.store.Height(), peer.ID()) | |||

| if err != nil { | |||

| r.logger.Error("Could not send status message to peer new", "src", peer.ID, "height", r.SyncHeight()) | |||

| } | |||

| r.events <- bcAddNewPeer{peerID: peer.ID()} | |||

| } | |||

| // RemovePeer implements Reactor interface. | |||

| func (r *BlockchainReactor) RemovePeer(peer p2p.Peer, reason interface{}) { | |||

| event := bcRemovePeer{ | |||

| peerID: peer.ID(), | |||

| reason: reason, | |||

| } | |||

| r.events <- event | |||

| } | |||

| func (r *Reactor) AddPeer() { | |||

| // TODO: add peer event and send to demuxer | |||

| // GetChannels implements Reactor | |||

| func (r *BlockchainReactor) GetChannels() []*p2p.ChannelDescriptor { | |||

| return []*p2p.ChannelDescriptor{ | |||

| { | |||

| ID: BlockchainChannel, | |||

| Priority: 10, | |||

| SendQueueCapacity: 2000, | |||

| RecvBufferCapacity: 50 * 4096, | |||

| RecvMessageCapacity: maxMsgSize, | |||

| }, | |||

| } | |||

| } | |||

+ 504

- 9

blockchain/v2/reactor_test.go

View File

| @ -1,22 +1,517 @@ | |||

| package v2 | |||

| import ( | |||

| "net" | |||

| "os" | |||

| "sort" | |||

| "sync" | |||

| "testing" | |||

| "time" | |||

| "github.com/pkg/errors" | |||

| "github.com/stretchr/testify/assert" | |||

| abci "github.com/tendermint/tendermint/abci/types" | |||

| "github.com/tendermint/tendermint/behaviour" | |||

| cfg "github.com/tendermint/tendermint/config" | |||

| "github.com/tendermint/tendermint/libs/log" | |||

| "github.com/tendermint/tendermint/libs/service" | |||

| "github.com/tendermint/tendermint/mock" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| "github.com/tendermint/tendermint/p2p/conn" | |||

| "github.com/tendermint/tendermint/proxy" | |||

| sm "github.com/tendermint/tendermint/state" | |||

| "github.com/tendermint/tendermint/store" | |||

| "github.com/tendermint/tendermint/types" | |||

| tmtime "github.com/tendermint/tendermint/types/time" | |||

| dbm "github.com/tendermint/tm-db" | |||

| ) | |||

| func TestReactor(t *testing.T) { | |||

| type mockPeer struct { | |||

| service.Service | |||

| id p2p.ID | |||

| } | |||

| func (mp mockPeer) FlushStop() {} | |||

| func (mp mockPeer) ID() p2p.ID { return mp.id } | |||

| func (mp mockPeer) RemoteIP() net.IP { return net.IP{} } | |||

| func (mp mockPeer) RemoteAddr() net.Addr { return &net.TCPAddr{IP: mp.RemoteIP(), Port: 8800} } | |||

| func (mp mockPeer) IsOutbound() bool { return true } | |||

| func (mp mockPeer) IsPersistent() bool { return true } | |||

| func (mp mockPeer) CloseConn() error { return nil } | |||

| func (mp mockPeer) NodeInfo() p2p.NodeInfo { | |||

| return p2p.DefaultNodeInfo{ | |||

| DefaultNodeID: "", | |||

| ListenAddr: "", | |||

| } | |||

| } | |||

| func (mp mockPeer) Status() conn.ConnectionStatus { return conn.ConnectionStatus{} } | |||

| func (mp mockPeer) SocketAddr() *p2p.NetAddress { return &p2p.NetAddress{} } | |||

| func (mp mockPeer) Send(byte, []byte) bool { return true } | |||

| func (mp mockPeer) TrySend(byte, []byte) bool { return true } | |||

| func (mp mockPeer) Set(string, interface{}) {} | |||

| func (mp mockPeer) Get(string) interface{} { return struct{}{} } | |||

| //nolint:unused | |||

| type mockBlockStore struct { | |||

| blocks map[int64]*types.Block | |||

| } | |||

| func (ml *mockBlockStore) Height() int64 { | |||

| return int64(len(ml.blocks)) | |||

| } | |||

| func (ml *mockBlockStore) LoadBlock(height int64) *types.Block { | |||

| return ml.blocks[height] | |||

| } | |||

| func (ml *mockBlockStore) SaveBlock(block *types.Block, part *types.PartSet, commit *types.Commit) { | |||

| ml.blocks[block.Height] = block | |||

| } | |||

| type mockBlockApplier struct { | |||

| } | |||

| // XXX: Add whitelist/blacklist? | |||

| func (mba *mockBlockApplier) ApplyBlock(state sm.State, blockID types.BlockID, block *types.Block) (sm.State, error) { | |||

| state.LastBlockHeight++ | |||

| return state, nil | |||

| } | |||

| type mockSwitchIo struct { | |||

| mtx sync.Mutex | |||

| switchedToConsensus bool | |||

| numStatusResponse int | |||

| numBlockResponse int | |||

| numNoBlockResponse int | |||

| } | |||

| func (sio *mockSwitchIo) sendBlockRequest(peerID p2p.ID, height int64) error { | |||

| return nil | |||

| } | |||

| func (sio *mockSwitchIo) sendStatusResponse(height int64, peerID p2p.ID) error { | |||

| sio.mtx.Lock() | |||

| defer sio.mtx.Unlock() | |||

| sio.numStatusResponse++ | |||

| return nil | |||

| } | |||

| func (sio *mockSwitchIo) sendBlockToPeer(block *types.Block, peerID p2p.ID) error { | |||

| sio.mtx.Lock() | |||

| defer sio.mtx.Unlock() | |||

| sio.numBlockResponse++ | |||

| return nil | |||

| } | |||

| func (sio *mockSwitchIo) sendBlockNotFound(height int64, peerID p2p.ID) error { | |||

| sio.mtx.Lock() | |||

| defer sio.mtx.Unlock() | |||

| sio.numNoBlockResponse++ | |||

| return nil | |||

| } | |||

| func (sio *mockSwitchIo) trySwitchToConsensus(state sm.State, blocksSynced int) { | |||

| sio.mtx.Lock() | |||

| defer sio.mtx.Unlock() | |||

| sio.switchedToConsensus = true | |||

| } | |||

| func (sio *mockSwitchIo) hasSwitchedToConsensus() bool { | |||

| sio.mtx.Lock() | |||

| defer sio.mtx.Unlock() | |||

| return sio.switchedToConsensus | |||

| } | |||

| func (sio *mockSwitchIo) broadcastStatusRequest(height int64) { | |||

| } | |||

| type testReactorParams struct { | |||

| logger log.Logger | |||

| genDoc *types.GenesisDoc | |||

| privVals []types.PrivValidator | |||

| startHeight int64 | |||

| bufferSize int | |||

| mockA bool | |||

| } | |||

| func newTestReactor(p testReactorParams) *BlockchainReactor { | |||

| store, state, _ := newReactorStore(p.genDoc, p.privVals, p.startHeight) | |||

| reporter := behaviour.NewMockReporter() | |||

| var appl blockApplier | |||

| if p.mockA { | |||

| appl = &mockBlockApplier{} | |||

| } else { | |||

| app := &testApp{} | |||

| cc := proxy.NewLocalClientCreator(app) | |||

| proxyApp := proxy.NewAppConns(cc) | |||

| err := proxyApp.Start() | |||

| if err != nil { | |||

| panic(errors.Wrap(err, "error start app")) | |||

| } | |||

| db := dbm.NewMemDB() | |||

| appl = sm.NewBlockExecutor(db, p.logger, proxyApp.Consensus(), mock.Mempool{}, sm.MockEvidencePool{}) | |||

| sm.SaveState(db, state) | |||

| } | |||

| r := newReactor(state, store, reporter, appl, p.bufferSize) | |||

| logger := log.TestingLogger() | |||

| r.SetLogger(logger.With("module", "blockchain")) | |||

| return r | |||

| } | |||

| func TestReactorTerminationScenarios(t *testing.T) { | |||

| config := cfg.ResetTestRoot("blockchain_reactor_v2_test") | |||

| defer os.RemoveAll(config.RootDir) | |||

| genDoc, privVals := randGenesisDoc(config.ChainID(), 1, false, 30) | |||

| refStore, _, _ := newReactorStore(genDoc, privVals, 20) | |||

| params := testReactorParams{ | |||

| logger: log.TestingLogger(), | |||

| genDoc: genDoc, | |||

| privVals: privVals, | |||

| startHeight: 10, | |||

| bufferSize: 100, | |||

| mockA: true, | |||

| } | |||

| type testEvent struct { | |||

| evType string | |||

| peer string | |||

| height int64 | |||

| } | |||

| tests := []struct { | |||

| name string | |||

| params testReactorParams | |||

| msgs []testEvent | |||

| }{ | |||

| { | |||

| name: "simple termination on max peer height - one peer", | |||

| params: params, | |||

| msgs: []testEvent{ | |||

| {evType: "AddPeer", peer: "P1"}, | |||

| {evType: "ReceiveS", peer: "P1", height: 13}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P1", height: 11}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P1", height: 12}, | |||

| {evType: "Process"}, | |||

| {evType: "ReceiveB", peer: "P1", height: 13}, | |||

| {evType: "Process"}, | |||

| }, | |||

| }, | |||

| { | |||

| name: "simple termination on max peer height - two peers", | |||

| params: params, | |||

| msgs: []testEvent{ | |||

| {evType: "AddPeer", peer: "P1"}, | |||

| {evType: "AddPeer", peer: "P2"}, | |||

| {evType: "ReceiveS", peer: "P1", height: 13}, | |||

| {evType: "ReceiveS", peer: "P2", height: 15}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P1", height: 11}, | |||

| {evType: "ReceiveB", peer: "P2", height: 12}, | |||

| {evType: "Process"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P1", height: 13}, | |||

| {evType: "Process"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 14}, | |||

| {evType: "Process"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 15}, | |||

| {evType: "Process"}, | |||

| }, | |||

| }, | |||

| { | |||

| name: "termination on max peer height - two peers, noBlock error", | |||

| params: params, | |||

| msgs: []testEvent{ | |||

| {evType: "AddPeer", peer: "P1"}, | |||

| {evType: "AddPeer", peer: "P2"}, | |||

| {evType: "ReceiveS", peer: "P1", height: 13}, | |||

| {evType: "ReceiveS", peer: "P2", height: 15}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveNB", peer: "P1", height: 11}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 12}, | |||

| {evType: "ReceiveB", peer: "P2", height: 11}, | |||

| {evType: "Process"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 13}, | |||

| {evType: "Process"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 14}, | |||

| {evType: "Process"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 15}, | |||

| {evType: "Process"}, | |||

| }, | |||

| }, | |||

| { | |||

| name: "termination on max peer height - two peers, remove one peer", | |||

| params: params, | |||

| msgs: []testEvent{ | |||

| {evType: "AddPeer", peer: "P1"}, | |||

| {evType: "AddPeer", peer: "P2"}, | |||

| {evType: "ReceiveS", peer: "P1", height: 13}, | |||

| {evType: "ReceiveS", peer: "P2", height: 15}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "RemovePeer", peer: "P1"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 12}, | |||

| {evType: "ReceiveB", peer: "P2", height: 11}, | |||

| {evType: "Process"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 13}, | |||

| {evType: "Process"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 14}, | |||

| {evType: "Process"}, | |||

| {evType: "BlockReq"}, | |||

| {evType: "ReceiveB", peer: "P2", height: 15}, | |||

| {evType: "Process"}, | |||

| }, | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| tt := tt | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| reactor := newTestReactor(params) | |||

| reactor.Start() | |||

| reactor.reporter = behaviour.NewMockReporter() | |||

| mockSwitch := &mockSwitchIo{switchedToConsensus: false} | |||

| reactor.io = mockSwitch | |||

| // time for go routines to start | |||

| time.Sleep(time.Millisecond) | |||

| for _, step := range tt.msgs { | |||

| switch step.evType { | |||

| case "AddPeer": | |||

| reactor.scheduler.send(bcAddNewPeer{peerID: p2p.ID(step.peer)}) | |||

| case "RemovePeer": | |||

| reactor.scheduler.send(bcRemovePeer{peerID: p2p.ID(step.peer)}) | |||

| case "ReceiveS": | |||

| reactor.scheduler.send(bcStatusResponse{ | |||

| peerID: p2p.ID(step.peer), | |||

| height: step.height, | |||

| time: time.Now(), | |||

| }) | |||

| case "ReceiveB": | |||

| reactor.scheduler.send(bcBlockResponse{ | |||

| peerID: p2p.ID(step.peer), | |||

| block: refStore.LoadBlock(step.height), | |||

| size: 10, | |||

| time: time.Now(), | |||

| }) | |||

| case "ReceiveNB": | |||

| reactor.scheduler.send(bcNoBlockResponse{ | |||

| peerID: p2p.ID(step.peer), | |||

| height: step.height, | |||

| time: time.Now(), | |||

| }) | |||

| case "BlockReq": | |||

| reactor.scheduler.send(rTrySchedule{time: time.Now()}) | |||

| case "Process": | |||

| reactor.processor.send(rProcessBlock{}) | |||

| } | |||

| // give time for messages to propagate between routines | |||

| time.Sleep(time.Millisecond) | |||

| } | |||

| // time for processor to finish and reactor to switch to consensus | |||

| time.Sleep(20 * time.Millisecond) | |||

| assert.True(t, mockSwitch.hasSwitchedToConsensus()) | |||

| reactor.Stop() | |||

| }) | |||

| } | |||

| } | |||

| func TestReactorHelperMode(t *testing.T) { | |||

| var ( | |||

| bufferSize = 10 | |||

| reactor = NewReactor(bufferSize) | |||

| channelID = byte(0x40) | |||

| ) | |||

| reactor.Start() | |||

| script := []Event{ | |||

| // TODO | |||

| config := cfg.ResetTestRoot("blockchain_reactor_v2_test") | |||

| defer os.RemoveAll(config.RootDir) | |||

| genDoc, privVals := randGenesisDoc(config.ChainID(), 1, false, 30) | |||

| params := testReactorParams{ | |||

| logger: log.TestingLogger(), | |||

| genDoc: genDoc, | |||

| privVals: privVals, | |||

| startHeight: 20, | |||

| bufferSize: 100, | |||

| mockA: true, | |||

| } | |||

| type testEvent struct { | |||

| peer string | |||

| event interface{} | |||

| } | |||

| tests := []struct { | |||

| name string | |||

| params testReactorParams | |||

| msgs []testEvent | |||

| }{ | |||

| { | |||

| name: "status request", | |||

| params: params, | |||

| msgs: []testEvent{ | |||

| {"P1", bcStatusRequestMessage{}}, | |||

| {"P1", bcBlockRequestMessage{Height: 13}}, | |||

| {"P1", bcBlockRequestMessage{Height: 20}}, | |||

| {"P1", bcBlockRequestMessage{Height: 22}}, | |||

| }, | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| tt := tt | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| reactor := newTestReactor(params) | |||

| reactor.Start() | |||

| mockSwitch := &mockSwitchIo{switchedToConsensus: false} | |||

| reactor.io = mockSwitch | |||

| for i := 0; i < len(tt.msgs); i++ { | |||

| step := tt.msgs[i] | |||

| switch ev := step.event.(type) { | |||

| case bcStatusRequestMessage: | |||

| old := mockSwitch.numStatusResponse | |||

| reactor.Receive(channelID, mockPeer{id: p2p.ID(step.peer)}, cdc.MustMarshalBinaryBare(ev)) | |||

| assert.Equal(t, old+1, mockSwitch.numStatusResponse) | |||

| case bcBlockRequestMessage: | |||

| if ev.Height > params.startHeight { | |||

| old := mockSwitch.numNoBlockResponse | |||

| reactor.Receive(channelID, mockPeer{id: p2p.ID(step.peer)}, cdc.MustMarshalBinaryBare(ev)) | |||

| assert.Equal(t, old+1, mockSwitch.numNoBlockResponse) | |||

| } else { | |||

| old := mockSwitch.numBlockResponse | |||

| reactor.Receive(channelID, mockPeer{id: p2p.ID(step.peer)}, cdc.MustMarshalBinaryBare(ev)) | |||

| assert.Equal(t, old+1, mockSwitch.numBlockResponse) | |||

| } | |||

| } | |||

| } | |||

| reactor.Stop() | |||

| }) | |||

| } | |||

| } | |||

| //---------------------------------------------- | |||

| // utility funcs | |||

| func makeTxs(height int64) (txs []types.Tx) { | |||

| for i := 0; i < 10; i++ { | |||

| txs = append(txs, types.Tx([]byte{byte(height), byte(i)})) | |||

| } | |||

| return txs | |||

| } | |||

| func makeBlock(height int64, state sm.State, lastCommit *types.Commit) *types.Block { | |||

| block, _ := state.MakeBlock(height, makeTxs(height), lastCommit, nil, state.Validators.GetProposer().Address) | |||

| return block | |||

| } | |||

| type testApp struct { | |||

| abci.BaseApplication | |||

| } | |||

| func randGenesisDoc(chainID string, numValidators int, randPower bool, minPower int64) ( | |||

| *types.GenesisDoc, []types.PrivValidator) { | |||

| validators := make([]types.GenesisValidator, numValidators) | |||

| privValidators := make([]types.PrivValidator, numValidators) | |||

| for i := 0; i < numValidators; i++ { | |||

| val, privVal := types.RandValidator(randPower, minPower) | |||

| validators[i] = types.GenesisValidator{ | |||

| PubKey: val.PubKey, | |||

| Power: val.VotingPower, | |||

| } | |||

| privValidators[i] = privVal | |||

| } | |||

| sort.Sort(types.PrivValidatorsByAddress(privValidators)) | |||

| return &types.GenesisDoc{ | |||

| GenesisTime: tmtime.Now(), | |||

| ChainID: chainID, | |||

| Validators: validators, | |||

| }, privValidators | |||

| } | |||

| // Why are we importing the entire blockExecutor dependency graph here | |||

| // when we have the facilities to | |||

| func newReactorStore( | |||

| genDoc *types.GenesisDoc, | |||

| privVals []types.PrivValidator, | |||

| maxBlockHeight int64) (*store.BlockStore, sm.State, *sm.BlockExecutor) { | |||

| if len(privVals) != 1 { | |||

| panic("only support one validator") | |||

| } | |||

| app := &testApp{} | |||

| cc := proxy.NewLocalClientCreator(app) | |||

| proxyApp := proxy.NewAppConns(cc) | |||

| err := proxyApp.Start() | |||

| if err != nil { | |||

| panic(errors.Wrap(err, "error start app")) | |||

| } | |||

| stateDB := dbm.NewMemDB() | |||

| blockStore := store.NewBlockStore(dbm.NewMemDB()) | |||

| state, err := sm.LoadStateFromDBOrGenesisDoc(stateDB, genDoc) | |||

| if err != nil { | |||

| panic(errors.Wrap(err, "error constructing state from genesis file")) | |||

| } | |||

| db := dbm.NewMemDB() | |||

| blockExec := sm.NewBlockExecutor(db, log.TestingLogger(), proxyApp.Consensus(), | |||

| mock.Mempool{}, sm.MockEvidencePool{}) | |||

| sm.SaveState(db, state) | |||

| // add blocks in | |||

| for blockHeight := int64(1); blockHeight <= maxBlockHeight; blockHeight++ { | |||

| lastCommit := types.NewCommit(blockHeight-1, 0, types.BlockID{}, nil) | |||

| if blockHeight > 1 { | |||

| lastBlockMeta := blockStore.LoadBlockMeta(blockHeight - 1) | |||

| lastBlock := blockStore.LoadBlock(blockHeight - 1) | |||

| vote, err := types.MakeVote( | |||

| lastBlock.Header.Height, | |||

| lastBlockMeta.BlockID, | |||

| state.Validators, | |||

| privVals[0], | |||

| lastBlock.Header.ChainID) | |||

| if err != nil { | |||

| panic(err) | |||

| } | |||

| lastCommit = types.NewCommit(vote.Height, vote.Round, | |||

| lastBlockMeta.BlockID, []types.CommitSig{vote.CommitSig()}) | |||

| } | |||

| thisBlock := makeBlock(blockHeight, state, lastCommit) | |||

| thisParts := thisBlock.MakePartSet(types.BlockPartSizeBytes) | |||

| blockID := types.BlockID{Hash: thisBlock.Hash(), PartsHeader: thisParts.Header()} | |||

| state, err = blockExec.ApplyBlock(state, blockID, thisBlock) | |||

| if err != nil { | |||

| panic(errors.Wrap(err, "error apply block")) | |||

| } | |||

| for _, event := range script { | |||

| reactor.Receive(event) | |||

| blockStore.SaveBlock(thisBlock, thisParts, lastCommit) | |||

| } | |||

| reactor.Stop() | |||

| return blockStore, state, blockExec | |||

| } | |||