No known key found for this signature in database

GPG Key ID: 7B6881D965918214

117 changed files with 7174 additions and 1715 deletions

Split View

Diff Options

-

+38 -5.circleci/config.yml

-

+2 -0.gitignore

-

+0 -1.golangci.yml

-

+31 -4CHANGELOG.md

-

+1 -1CHANGELOG_PENDING.md

-

+37 -25CONTRIBUTING.md

-

+18 -2Makefile

-

+0 -23ROADMAP.md

-

+7 -5abci/cmd/abci-cli/abci-cli.go

-

+1 -1abci/example/kvstore/kvstore_test.go

-

+1 -1abci/types/protoreplace/protoreplace.go

-

+4 -3blockchain/v0/pool.go

-

+80 -4blockchain/v0/reactor_test.go

-

+80 -4blockchain/v1/reactor_test.go

-

+387 -0blockchain/v2/schedule.go

-

+272 -0blockchain/v2/schedule_test.go

-

+34 -0cmd/contract_tests/main.go

-

+5 -3cmd/priv_val_server/main.go

-

+3 -2cmd/tendermint/commands/lite.go

-

+4 -4config/config.go

-

+114 -0config/config_test.go

-

+4 -3config/toml.go

-

+252 -0consensus/reactor_test.go

-

+15 -11consensus/replay.go

-

+1 -2consensus/replay_test.go

-

+14 -10consensus/state.go

-

+8 -8crypto/merkle/proof.go

-

+4 -4crypto/merkle/proof_key_path.go

-

+6 -5crypto/merkle/proof_simple_value.go

-

+4 -4crypto/merkle/proof_test.go

-

+7 -7crypto/merkle/simple_proof.go

-

+239 -0docs/architecture/adr-042-state-sync.md

-

+141 -0docs/architecture/adr-044-lite-client-with-weak-subjectivity.md

-

BINdocs/architecture/img/state-sync.png

-

+600 -0docs/guides/java.md

-

+31 -32docs/guides/kotlin.md

-

+2 -2docs/spec/abci/apps.md

-

+319 -103docs/spec/consensus/light-client.md

-

+1 -1docs/spec/p2p/connection.md

-

+3 -3docs/spec/reactors/mempool/messages.md

-

+25 -0docs/spec/rpc/index.html

-

+2679 -0docs/spec/rpc/swagger.yaml

-

+4 -3docs/tendermint-core/configuration.md

-

+33 -0dredd.yml

-

+1 -0go.mod

-

+2 -2go.sum

-

+4 -3libs/autofile/group.go

-

+8 -6libs/common/async_test.go

-

+1 -1libs/common/errors.go

-

+5 -4libs/log/tmfmt_logger.go

-

+4 -2lite/base_verifier.go

-

+13 -25lite/errors/errors.go

-

+4 -7lite/proxy/errors.go

-

+4 -3lite/proxy/query.go

-

+4 -3lite/proxy/verifier.go

-

+2 -2mempool/clist_mempool.go

-

+3 -3mempool/clist_mempool_test.go

-

+6 -5mempool/reactor.go

-

+17 -29node/node.go

-

+18 -11node/node_test.go

-

+6 -3p2p/conn/connection.go

-

+4 -3p2p/fuzz.go

-

+15 -12p2p/netaddress.go

-

+1 -1p2p/node_info_test.go

-

+4 -3p2p/peer_test.go

-

+2 -3p2p/switch.go

-

+3 -1p2p/test_util.go

-

+6 -6privval/doc.go

-

+13 -2privval/errors.go

-

+4 -4privval/file_deprecated_test.go

-

+2 -1privval/file_test.go

-

+13 -10privval/messages.go

-

+131 -0privval/signer_client.go

-

+257 -0privval/signer_client_test.go

-

+84 -0privval/signer_dialer_endpoint.go

-

+156 -0privval/signer_endpoint.go

-

+198 -0privval/signer_listener_endpoint.go

-

+198 -0privval/signer_listener_endpoint_test.go

-

+0 -192privval/signer_remote.go

-

+0 -68privval/signer_remote_test.go

-

+44 -0privval/signer_requestHandler.go

-

+107 -0privval/signer_server.go

-

+0 -139privval/signer_service_endpoint.go

-

+0 -230privval/signer_validator_endpoint.go

-

+0 -506privval/signer_validator_endpoint_test.go

-

+27 -4privval/socket_dialers_test.go

-

+2 -2privval/socket_listeners.go

-

+49 -7privval/utils.go

-

+3 -4privval/utils_test.go

-

+2 -0rpc/client/httpclient.go

-

+1 -1rpc/core/routes.go

-

+4 -3rpc/lib/client/http_client.go

-

+4 -3rpc/lib/server/handlers.go

-

+14 -0scripts/get_nodejs.sh

-

+1 -0scripts/get_tools.sh

-

+1 -1scripts/gitian-build.sh

-

+2 -2scripts/gitian-descriptors/gitian-darwin.yml

-

+2 -2scripts/gitian-descriptors/gitian-linux.yml

-

+2 -2scripts/gitian-descriptors/gitian-windows.yml

-

+1 -2state/execution.go

+ 38

- 5

.circleci/config.yml

View File

+ 2

- 0

.gitignore

View File

+ 0

- 1

.golangci.yml

View File

+ 31

- 4

CHANGELOG.md

View File

+ 1

- 1

CHANGELOG_PENDING.md

View File

+ 37

- 25

CONTRIBUTING.md

View File

+ 18

- 2

Makefile

View File

+ 0

- 23

ROADMAP.md

View File

| @ -1,23 +0,0 @@ | |||

| # Roadmap | |||

| BREAKING CHANGES: | |||

| - Better support for injecting randomness | |||

| - Upgrade consensus for more real-time use of evidence | |||

| FEATURES: | |||

| - Use the chain as its own CA for nodes and validators | |||

| - Tooling to run multiple blockchains/apps, possibly in a single process | |||

| - State syncing (without transaction replay) | |||

| - Add authentication and rate-limitting to the RPC | |||

| IMPROVEMENTS: | |||

| - Improve subtleties around mempool caching and logic | |||

| - Consensus optimizations: | |||

| - cache block parts for faster agreement after round changes | |||

| - propagate block parts rarest first | |||

| - Better testing of the consensus state machine (ie. use a DSL) | |||

| - Auto compiled serialization/deserialization code instead of go-wire reflection | |||

| BUG FIXES: | |||

| - Graceful handling/recovery for apps that have non-determinism or fail to halt | |||

| - Graceful handling/recovery for violations of safety, or liveness | |||

+ 7

- 5

abci/cmd/abci-cli/abci-cli.go

View File

+ 1

- 1

abci/example/kvstore/kvstore_test.go

View File

+ 1

- 1

abci/types/protoreplace/protoreplace.go

View File

+ 4

- 3

blockchain/v0/pool.go

View File

+ 80

- 4

blockchain/v0/reactor_test.go

View File

+ 80

- 4

blockchain/v1/reactor_test.go

View File

+ 387

- 0

blockchain/v2/schedule.go

View File

| @ -0,0 +1,387 @@ | |||

| // nolint:unused | |||

| package v2 | |||

| import ( | |||

| "fmt" | |||

| "math" | |||

| "math/rand" | |||

| "time" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| ) | |||

| type Event interface{} | |||

| type blockState int | |||

| const ( | |||

| blockStateUnknown blockState = iota | |||

| blockStateNew | |||

| blockStatePending | |||

| blockStateReceived | |||

| blockStateProcessed | |||

| ) | |||

| func (e blockState) String() string { | |||

| switch e { | |||

| case blockStateUnknown: | |||

| return "Unknown" | |||

| case blockStateNew: | |||

| return "New" | |||

| case blockStatePending: | |||

| return "Pending" | |||

| case blockStateReceived: | |||

| return "Received" | |||

| case blockStateProcessed: | |||

| return "Processed" | |||

| default: | |||

| return fmt.Sprintf("unknown blockState: %d", e) | |||

| } | |||

| } | |||

| type peerState int | |||

| const ( | |||

| peerStateNew = iota | |||

| peerStateReady | |||

| peerStateRemoved | |||

| ) | |||

| func (e peerState) String() string { | |||

| switch e { | |||

| case peerStateNew: | |||

| return "New" | |||

| case peerStateReady: | |||

| return "Ready" | |||

| case peerStateRemoved: | |||

| return "Removed" | |||

| default: | |||

| return fmt.Sprintf("unknown peerState: %d", e) | |||

| } | |||

| } | |||

| type scPeer struct { | |||

| peerID p2p.ID | |||

| state peerState | |||

| height int64 | |||

| lastTouched time.Time | |||

| lastRate int64 | |||

| } | |||

| func newScPeer(peerID p2p.ID) *scPeer { | |||

| return &scPeer{ | |||

| peerID: peerID, | |||

| state: peerStateNew, | |||

| height: -1, | |||

| lastTouched: time.Time{}, | |||

| } | |||

| } | |||

| // The schedule is a composite data structure which allows a scheduler to keep | |||

| // track of which blocks have been scheduled into which state. | |||

| type schedule struct { | |||

| initHeight int64 | |||

| // a list of blocks in which blockState | |||

| blockStates map[int64]blockState | |||

| // a map of peerID to schedule specific peer struct `scPeer` used to keep | |||

| // track of peer specific state | |||

| peers map[p2p.ID]*scPeer | |||

| // a map of heights to the peer we are waiting for a response from | |||

| pendingBlocks map[int64]p2p.ID | |||

| // the time at which a block was put in blockStatePending | |||

| pendingTime map[int64]time.Time | |||

| // the peerID of the peer which put the block in blockStateReceived | |||

| receivedBlocks map[int64]p2p.ID | |||

| } | |||

| func newSchedule(initHeight int64) *schedule { | |||

| sc := schedule{ | |||

| initHeight: initHeight, | |||

| blockStates: make(map[int64]blockState), | |||

| peers: make(map[p2p.ID]*scPeer), | |||

| pendingBlocks: make(map[int64]p2p.ID), | |||

| pendingTime: make(map[int64]time.Time), | |||

| receivedBlocks: make(map[int64]p2p.ID), | |||

| } | |||

| sc.setStateAtHeight(initHeight, blockStateNew) | |||

| return &sc | |||

| } | |||

| func (sc *schedule) addPeer(peerID p2p.ID) error { | |||

| if _, ok := sc.peers[peerID]; ok { | |||

| return fmt.Errorf("Cannot add duplicate peer %s", peerID) | |||

| } | |||

| sc.peers[peerID] = newScPeer(peerID) | |||

| return nil | |||

| } | |||

| func (sc *schedule) touchPeer(peerID p2p.ID, time time.Time) error { | |||

| peer, ok := sc.peers[peerID] | |||

| if !ok { | |||

| return fmt.Errorf("Couldn't find peer %s", peerID) | |||

| } | |||

| if peer.state == peerStateRemoved { | |||

| return fmt.Errorf("Tried to touch peer in peerStateRemoved") | |||

| } | |||

| peer.lastTouched = time | |||

| return nil | |||

| } | |||

| func (sc *schedule) removePeer(peerID p2p.ID) error { | |||

| peer, ok := sc.peers[peerID] | |||

| if !ok { | |||

| return fmt.Errorf("Couldn't find peer %s", peerID) | |||

| } | |||

| if peer.state == peerStateRemoved { | |||

| return fmt.Errorf("Tried to remove peer %s in peerStateRemoved", peerID) | |||

| } | |||

| for height, pendingPeerID := range sc.pendingBlocks { | |||

| if pendingPeerID == peerID { | |||

| sc.setStateAtHeight(height, blockStateNew) | |||

| delete(sc.pendingTime, height) | |||

| delete(sc.pendingBlocks, height) | |||

| } | |||

| } | |||

| for height, rcvPeerID := range sc.receivedBlocks { | |||

| if rcvPeerID == peerID { | |||

| sc.setStateAtHeight(height, blockStateNew) | |||

| delete(sc.receivedBlocks, height) | |||

| } | |||

| } | |||

| peer.state = peerStateRemoved | |||

| return nil | |||

| } | |||

| func (sc *schedule) setPeerHeight(peerID p2p.ID, height int64) error { | |||

| peer, ok := sc.peers[peerID] | |||

| if !ok { | |||

| return fmt.Errorf("Can't find peer %s", peerID) | |||

| } | |||

| if peer.state == peerStateRemoved { | |||

| return fmt.Errorf("Cannot set peer height for a peer in peerStateRemoved") | |||

| } | |||

| if height < peer.height { | |||

| return fmt.Errorf("Cannot move peer height lower. from %d to %d", peer.height, height) | |||

| } | |||

| peer.height = height | |||

| peer.state = peerStateReady | |||

| for i := sc.minHeight(); i <= height; i++ { | |||

| if sc.getStateAtHeight(i) == blockStateUnknown { | |||

| sc.setStateAtHeight(i, blockStateNew) | |||

| } | |||

| } | |||

| return nil | |||

| } | |||

| func (sc *schedule) getStateAtHeight(height int64) blockState { | |||

| if height < sc.initHeight { | |||

| return blockStateProcessed | |||

| } else if state, ok := sc.blockStates[height]; ok { | |||

| return state | |||

| } else { | |||

| return blockStateUnknown | |||

| } | |||

| } | |||

| func (sc *schedule) getPeersAtHeight(height int64) []*scPeer { | |||

| peers := []*scPeer{} | |||

| for _, peer := range sc.peers { | |||

| if peer.height >= height { | |||

| peers = append(peers, peer) | |||

| } | |||

| } | |||

| return peers | |||

| } | |||

| func (sc *schedule) peersInactiveSince(duration time.Duration, now time.Time) []p2p.ID { | |||

| peers := []p2p.ID{} | |||

| for _, peer := range sc.peers { | |||

| if now.Sub(peer.lastTouched) > duration { | |||

| peers = append(peers, peer.peerID) | |||

| } | |||

| } | |||

| return peers | |||

| } | |||

| func (sc *schedule) peersSlowerThan(minSpeed int64) []p2p.ID { | |||

| peers := []p2p.ID{} | |||

| for _, peer := range sc.peers { | |||

| if peer.lastRate < minSpeed { | |||

| peers = append(peers, peer.peerID) | |||

| } | |||

| } | |||

| return peers | |||

| } | |||

| func (sc *schedule) setStateAtHeight(height int64, state blockState) { | |||

| sc.blockStates[height] = state | |||

| } | |||

| func (sc *schedule) markReceived(peerID p2p.ID, height int64, size int64, now time.Time) error { | |||

| peer, ok := sc.peers[peerID] | |||

| if !ok { | |||

| return fmt.Errorf("Can't find peer %s", peerID) | |||

| } | |||

| if peer.state == peerStateRemoved { | |||

| return fmt.Errorf("Cannot receive blocks from removed peer %s", peerID) | |||

| } | |||

| if state := sc.getStateAtHeight(height); state != blockStatePending || sc.pendingBlocks[height] != peerID { | |||

| return fmt.Errorf("Received block %d from peer %s without being requested", height, peerID) | |||

| } | |||

| pendingTime, ok := sc.pendingTime[height] | |||

| if !ok || now.Sub(pendingTime) <= 0 { | |||

| return fmt.Errorf("Clock error. Block %d received at %s but requested at %s", | |||

| height, pendingTime, now) | |||

| } | |||

| peer.lastRate = size / int64(now.Sub(pendingTime).Seconds()) | |||

| sc.setStateAtHeight(height, blockStateReceived) | |||

| delete(sc.pendingBlocks, height) | |||

| delete(sc.pendingTime, height) | |||

| sc.receivedBlocks[height] = peerID | |||

| return nil | |||

| } | |||

| func (sc *schedule) markPending(peerID p2p.ID, height int64, time time.Time) error { | |||

| peer, ok := sc.peers[peerID] | |||

| if !ok { | |||

| return fmt.Errorf("Can't find peer %s", peerID) | |||

| } | |||

| state := sc.getStateAtHeight(height) | |||

| if state != blockStateNew { | |||

| return fmt.Errorf("Block %d should be in blockStateNew but was %s", height, state) | |||

| } | |||

| if peer.state != peerStateReady { | |||

| return fmt.Errorf("Cannot schedule %d from %s in %s", height, peerID, peer.state) | |||

| } | |||

| if height > peer.height { | |||

| return fmt.Errorf("Cannot request height %d from peer %s who is at height %d", | |||

| height, peerID, peer.height) | |||

| } | |||

| sc.setStateAtHeight(height, blockStatePending) | |||

| sc.pendingBlocks[height] = peerID | |||

| // XXX: to make this more accurate we can introduce a message from | |||

| // the IO routine which indicates the time the request was put on the wire | |||

| sc.pendingTime[height] = time | |||

| return nil | |||

| } | |||

| func (sc *schedule) markProcessed(height int64) error { | |||

| state := sc.getStateAtHeight(height) | |||

| if state != blockStateReceived { | |||

| return fmt.Errorf("Can't mark height %d received from block state %s", height, state) | |||

| } | |||

| delete(sc.receivedBlocks, height) | |||

| sc.setStateAtHeight(height, blockStateProcessed) | |||

| return nil | |||

| } | |||

| // allBlockProcessed returns true if all blocks are in blockStateProcessed and | |||

| // determines if the schedule has been completed | |||

| func (sc *schedule) allBlocksProcessed() bool { | |||

| for _, state := range sc.blockStates { | |||

| if state != blockStateProcessed { | |||

| return false | |||

| } | |||

| } | |||

| return true | |||

| } | |||

| // highest block | state == blockStateNew | |||

| func (sc *schedule) maxHeight() int64 { | |||

| var max int64 = 0 | |||

| for height, state := range sc.blockStates { | |||

| if state == blockStateNew && height > max { | |||

| max = height | |||

| } | |||

| } | |||

| return max | |||

| } | |||

| // lowest block | state == blockStateNew | |||

| func (sc *schedule) minHeight() int64 { | |||

| var min int64 = math.MaxInt64 | |||

| for height, state := range sc.blockStates { | |||

| if state == blockStateNew && height < min { | |||

| min = height | |||

| } | |||

| } | |||

| return min | |||

| } | |||

| func (sc *schedule) pendingFrom(peerID p2p.ID) []int64 { | |||

| heights := []int64{} | |||

| for height, pendingPeerID := range sc.pendingBlocks { | |||

| if pendingPeerID == peerID { | |||

| heights = append(heights, height) | |||

| } | |||

| } | |||

| return heights | |||

| } | |||

| func (sc *schedule) selectPeer(peers []*scPeer) *scPeer { | |||

| // FIXME: properPeerSelector | |||

| s := rand.NewSource(time.Now().Unix()) | |||

| r := rand.New(s) | |||

| return peers[r.Intn(len(peers))] | |||

| } | |||

| // XXX: this duplicates the logic of peersInactiveSince and peersSlowerThan | |||

| func (sc *schedule) prunablePeers(peerTimout time.Duration, minRecvRate int64, now time.Time) []p2p.ID { | |||

| prunable := []p2p.ID{} | |||

| for peerID, peer := range sc.peers { | |||

| if now.Sub(peer.lastTouched) > peerTimout || peer.lastRate < minRecvRate { | |||

| prunable = append(prunable, peerID) | |||

| } | |||

| } | |||

| return prunable | |||

| } | |||

| func (sc *schedule) numBlockInState(targetState blockState) uint32 { | |||

| var num uint32 = 0 | |||

| for _, state := range sc.blockStates { | |||

| if state == targetState { | |||

| num++ | |||

| } | |||

| } | |||

| return num | |||

| } | |||

+ 272

- 0

blockchain/v2/schedule_test.go

View File

| @ -0,0 +1,272 @@ | |||

| package v2 | |||

| import ( | |||

| "testing" | |||

| "time" | |||

| "github.com/stretchr/testify/assert" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| ) | |||

| func TestScheduleInit(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| sc = newSchedule(initHeight) | |||

| ) | |||

| assert.Equal(t, blockStateNew, sc.getStateAtHeight(initHeight)) | |||

| assert.Equal(t, blockStateProcessed, sc.getStateAtHeight(initHeight-1)) | |||

| assert.Equal(t, blockStateUnknown, sc.getStateAtHeight(initHeight+1)) | |||

| } | |||

| func TestAddPeer(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| peerID p2p.ID = "1" | |||

| peerIDTwo p2p.ID = "2" | |||

| sc = newSchedule(initHeight) | |||

| ) | |||

| assert.Nil(t, sc.addPeer(peerID)) | |||

| assert.Nil(t, sc.addPeer(peerIDTwo)) | |||

| assert.Error(t, sc.addPeer(peerID)) | |||

| } | |||

| func TestTouchPeer(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| peerID p2p.ID = "1" | |||

| sc = newSchedule(initHeight) | |||

| now = time.Now() | |||

| ) | |||

| assert.Error(t, sc.touchPeer(peerID, now), | |||

| "Touching an unknown peer should return errPeerNotFound") | |||

| assert.Nil(t, sc.addPeer(peerID), | |||

| "Adding a peer should return no error") | |||

| assert.Nil(t, sc.touchPeer(peerID, now), | |||

| "Touching a peer should return no error") | |||

| threshold := 10 * time.Second | |||

| assert.Empty(t, sc.peersInactiveSince(threshold, now.Add(9*time.Second)), | |||

| "Expected no peers to have been touched over 9 seconds") | |||

| assert.Containsf(t, sc.peersInactiveSince(threshold, now.Add(11*time.Second)), peerID, | |||

| "Expected one %s to have been touched over 10 seconds ago", peerID) | |||

| } | |||

| func TestPeerHeight(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| peerID p2p.ID = "1" | |||

| peerHeight int64 = 20 | |||

| sc = newSchedule(initHeight) | |||

| ) | |||

| assert.NoError(t, sc.addPeer(peerID), | |||

| "Adding a peer should return no error") | |||

| assert.NoError(t, sc.setPeerHeight(peerID, peerHeight)) | |||

| for i := initHeight; i <= peerHeight; i++ { | |||

| assert.Equal(t, sc.getStateAtHeight(i), blockStateNew, | |||

| "Expected all blocks to be in blockStateNew") | |||

| peerIDs := []p2p.ID{} | |||

| for _, peer := range sc.getPeersAtHeight(i) { | |||

| peerIDs = append(peerIDs, peer.peerID) | |||

| } | |||

| assert.Containsf(t, peerIDs, peerID, | |||

| "Expected %s to have block %d", peerID, i) | |||

| } | |||

| } | |||

| func TestTransitionPending(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| peerID p2p.ID = "1" | |||

| peerIDTwo p2p.ID = "2" | |||

| peerHeight int64 = 20 | |||

| sc = newSchedule(initHeight) | |||

| now = time.Now() | |||

| ) | |||

| assert.NoError(t, sc.addPeer(peerID), | |||

| "Adding a peer should return no error") | |||

| assert.Nil(t, sc.addPeer(peerIDTwo), | |||

| "Adding a peer should return no error") | |||

| assert.Error(t, sc.markPending(peerID, peerHeight, now), | |||

| "Expected scheduling a block from a peer in peerStateNew to fail") | |||

| assert.NoError(t, sc.setPeerHeight(peerID, peerHeight), | |||

| "Expected setPeerHeight to return no error") | |||

| assert.NoError(t, sc.setPeerHeight(peerIDTwo, peerHeight), | |||

| "Expected setPeerHeight to return no error") | |||

| assert.NoError(t, sc.markPending(peerID, peerHeight, now), | |||

| "Expected markingPending new block to succeed") | |||

| assert.Error(t, sc.markPending(peerIDTwo, peerHeight, now), | |||

| "Expected markingPending by a second peer to fail") | |||

| assert.Equal(t, blockStatePending, sc.getStateAtHeight(peerHeight), | |||

| "Expected the block to to be in blockStatePending") | |||

| assert.NoError(t, sc.removePeer(peerID), | |||

| "Expected removePeer to return no error") | |||

| assert.Equal(t, blockStateNew, sc.getStateAtHeight(peerHeight), | |||

| "Expected the block to to be in blockStateNew") | |||

| assert.Error(t, sc.markPending(peerID, peerHeight, now), | |||

| "Expected markingPending removed peer to fail") | |||

| assert.NoError(t, sc.markPending(peerIDTwo, peerHeight, now), | |||

| "Expected markingPending on a ready peer to succeed") | |||

| assert.Equal(t, blockStatePending, sc.getStateAtHeight(peerHeight), | |||

| "Expected the block to to be in blockStatePending") | |||

| } | |||

| func TestTransitionReceived(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| peerID p2p.ID = "1" | |||

| peerIDTwo p2p.ID = "2" | |||

| peerHeight int64 = 20 | |||

| blockSize int64 = 1024 | |||

| sc = newSchedule(initHeight) | |||

| now = time.Now() | |||

| receivedAt = now.Add(1 * time.Second) | |||

| ) | |||

| assert.NoError(t, sc.addPeer(peerID), | |||

| "Expected adding peer %s to succeed", peerID) | |||

| assert.NoError(t, sc.addPeer(peerIDTwo), | |||

| "Expected adding peer %s to succeed", peerIDTwo) | |||

| assert.NoError(t, sc.setPeerHeight(peerID, peerHeight), | |||

| "Expected setPeerHeight to return no error") | |||

| assert.NoErrorf(t, sc.setPeerHeight(peerIDTwo, peerHeight), | |||

| "Expected setPeerHeight on %s to %d to succeed", peerIDTwo, peerHeight) | |||

| assert.NoError(t, sc.markPending(peerID, initHeight, now), | |||

| "Expected markingPending new block to succeed") | |||

| assert.Error(t, sc.markReceived(peerIDTwo, initHeight, blockSize, receivedAt), | |||

| "Expected marking markReceived from a non requesting peer to fail") | |||

| assert.NoError(t, sc.markReceived(peerID, initHeight, blockSize, receivedAt), | |||

| "Expected marking markReceived on a pending block to succeed") | |||

| assert.Error(t, sc.markReceived(peerID, initHeight, blockSize, receivedAt), | |||

| "Expected marking markReceived on received block to fail") | |||

| assert.Equalf(t, blockStateReceived, sc.getStateAtHeight(initHeight), | |||

| "Expected block %d to be blockHeightReceived", initHeight) | |||

| assert.NoErrorf(t, sc.removePeer(peerID), | |||

| "Expected removePeer removing %s to succeed", peerID) | |||

| assert.Equalf(t, blockStateNew, sc.getStateAtHeight(initHeight), | |||

| "Expected block %d to be blockStateNew", initHeight) | |||

| assert.NoErrorf(t, sc.markPending(peerIDTwo, initHeight, now), | |||

| "Expected markingPending %d from %s to succeed", initHeight, peerIDTwo) | |||

| assert.NoErrorf(t, sc.markReceived(peerIDTwo, initHeight, blockSize, receivedAt), | |||

| "Expected marking markReceived %d from %s to succeed", initHeight, peerIDTwo) | |||

| assert.Equalf(t, blockStateReceived, sc.getStateAtHeight(initHeight), | |||

| "Expected block %d to be blockStateReceived", initHeight) | |||

| } | |||

| func TestTransitionProcessed(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| peerID p2p.ID = "1" | |||

| peerHeight int64 = 20 | |||

| blockSize int64 = 1024 | |||

| sc = newSchedule(initHeight) | |||

| now = time.Now() | |||

| receivedAt = now.Add(1 * time.Second) | |||

| ) | |||

| assert.NoError(t, sc.addPeer(peerID), | |||

| "Expected adding peer %s to succeed", peerID) | |||

| assert.NoErrorf(t, sc.setPeerHeight(peerID, peerHeight), | |||

| "Expected setPeerHeight on %s to %d to succeed", peerID, peerHeight) | |||

| assert.NoError(t, sc.markPending(peerID, initHeight, now), | |||

| "Expected markingPending new block to succeed") | |||

| assert.NoError(t, sc.markReceived(peerID, initHeight, blockSize, receivedAt), | |||

| "Expected marking markReceived on a pending block to succeed") | |||

| assert.Error(t, sc.markProcessed(initHeight+1), | |||

| "Expected marking %d as processed to fail", initHeight+1) | |||

| assert.NoError(t, sc.markProcessed(initHeight), | |||

| "Expected marking %d as processed to succeed", initHeight) | |||

| assert.Equalf(t, blockStateProcessed, sc.getStateAtHeight(initHeight), | |||

| "Expected block %d to be blockStateProcessed", initHeight) | |||

| assert.NoError(t, sc.removePeer(peerID), | |||

| "Expected removing peer %s to succeed", peerID) | |||

| assert.Equalf(t, blockStateProcessed, sc.getStateAtHeight(initHeight), | |||

| "Expected block %d to be blockStateProcessed", initHeight) | |||

| } | |||

| func TestMinMaxHeight(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| peerID p2p.ID = "1" | |||

| peerHeight int64 = 20 | |||

| sc = newSchedule(initHeight) | |||

| now = time.Now() | |||

| ) | |||

| assert.Equal(t, initHeight, sc.minHeight(), | |||

| "Expected min height to be the initialized height") | |||

| assert.Equal(t, initHeight, sc.maxHeight(), | |||

| "Expected max height to be the initialized height") | |||

| assert.NoError(t, sc.addPeer(peerID), | |||

| "Adding a peer should return no error") | |||

| assert.NoError(t, sc.setPeerHeight(peerID, peerHeight), | |||

| "Expected setPeerHeight to return no error") | |||

| assert.Equal(t, peerHeight, sc.maxHeight(), | |||

| "Expected max height to increase to peerHeight") | |||

| assert.Nil(t, sc.markPending(peerID, initHeight, now.Add(1*time.Second)), | |||

| "Expected marking initHeight as pending to return no error") | |||

| assert.Equal(t, initHeight+1, sc.minHeight(), | |||

| "Expected marking initHeight as pending to move minHeight forward") | |||

| } | |||

| func TestPeersSlowerThan(t *testing.T) { | |||

| var ( | |||

| initHeight int64 = 5 | |||

| peerID p2p.ID = "1" | |||

| peerHeight int64 = 20 | |||

| blockSize int64 = 1024 | |||

| sc = newSchedule(initHeight) | |||

| now = time.Now() | |||

| receivedAt = now.Add(1 * time.Second) | |||

| ) | |||

| assert.NoError(t, sc.addPeer(peerID), | |||

| "Adding a peer should return no error") | |||

| assert.NoError(t, sc.setPeerHeight(peerID, peerHeight), | |||

| "Expected setPeerHeight to return no error") | |||

| assert.NoError(t, sc.markPending(peerID, peerHeight, now), | |||

| "Expected markingPending on to return no error") | |||

| assert.NoError(t, sc.markReceived(peerID, peerHeight, blockSize, receivedAt), | |||

| "Expected markingPending on to return no error") | |||

| assert.Empty(t, sc.peersSlowerThan(blockSize-1), | |||

| "expected no peers to be slower than blockSize-1 bytes/sec") | |||

| assert.Containsf(t, sc.peersSlowerThan(blockSize+1), peerID, | |||

| "expected %s to be slower than blockSize+1 bytes/sec", peerID) | |||

| } | |||

+ 34

- 0

cmd/contract_tests/main.go

View File

| @ -0,0 +1,34 @@ | |||

| package main | |||

| import ( | |||

| "fmt" | |||

| "strings" | |||

| "github.com/snikch/goodman/hooks" | |||

| "github.com/snikch/goodman/transaction" | |||

| ) | |||

| func main() { | |||

| // This must be compiled beforehand and given to dredd as parameter, in the meantime the server should be running | |||

| h := hooks.NewHooks() | |||

| server := hooks.NewServer(hooks.NewHooksRunner(h)) | |||

| h.BeforeAll(func(t []*transaction.Transaction) { | |||

| fmt.Println(t[0].Name) | |||

| }) | |||

| h.BeforeEach(func(t *transaction.Transaction) { | |||

| if strings.HasPrefix(t.Name, "Tx") || | |||

| // We need a proper example of evidence to broadcast | |||

| strings.HasPrefix(t.Name, "Info > /broadcast_evidence") || | |||

| // We need a proper example of path and data | |||

| strings.HasPrefix(t.Name, "ABCI > /abci_query") || | |||

| // We need to find a way to make a transaction before starting the tests, | |||

| // that hash should replace the dummy one in hte swagger file | |||

| strings.HasPrefix(t.Name, "Info > /tx") { | |||

| t.Skip = true | |||

| fmt.Printf("%s Has been skipped\n", t.Name) | |||

| } | |||

| }) | |||

| server.Serve() | |||

| defer server.Listener.Close() | |||

| fmt.Print("FINE") | |||

| } | |||

+ 5

- 3

cmd/priv_val_server/main.go

View File

+ 3

- 2

cmd/tendermint/commands/lite.go

View File

+ 4

- 4

config/config.go

View File

+ 114

- 0

config/config_test.go

View File

+ 4

- 3

config/toml.go

View File

+ 252

- 0

consensus/reactor_test.go

View File

+ 15

- 11

consensus/replay.go

View File

+ 1

- 2

consensus/replay_test.go

View File

+ 14

- 10

consensus/state.go

View File

+ 8

- 8

crypto/merkle/proof.go

View File

+ 4

- 4

crypto/merkle/proof_key_path.go

View File

+ 6

- 5

crypto/merkle/proof_simple_value.go

View File

+ 4

- 4

crypto/merkle/proof_test.go

View File

+ 7

- 7

crypto/merkle/simple_proof.go

View File

+ 239

- 0

docs/architecture/adr-042-state-sync.md

View File

| @ -0,0 +1,239 @@ | |||

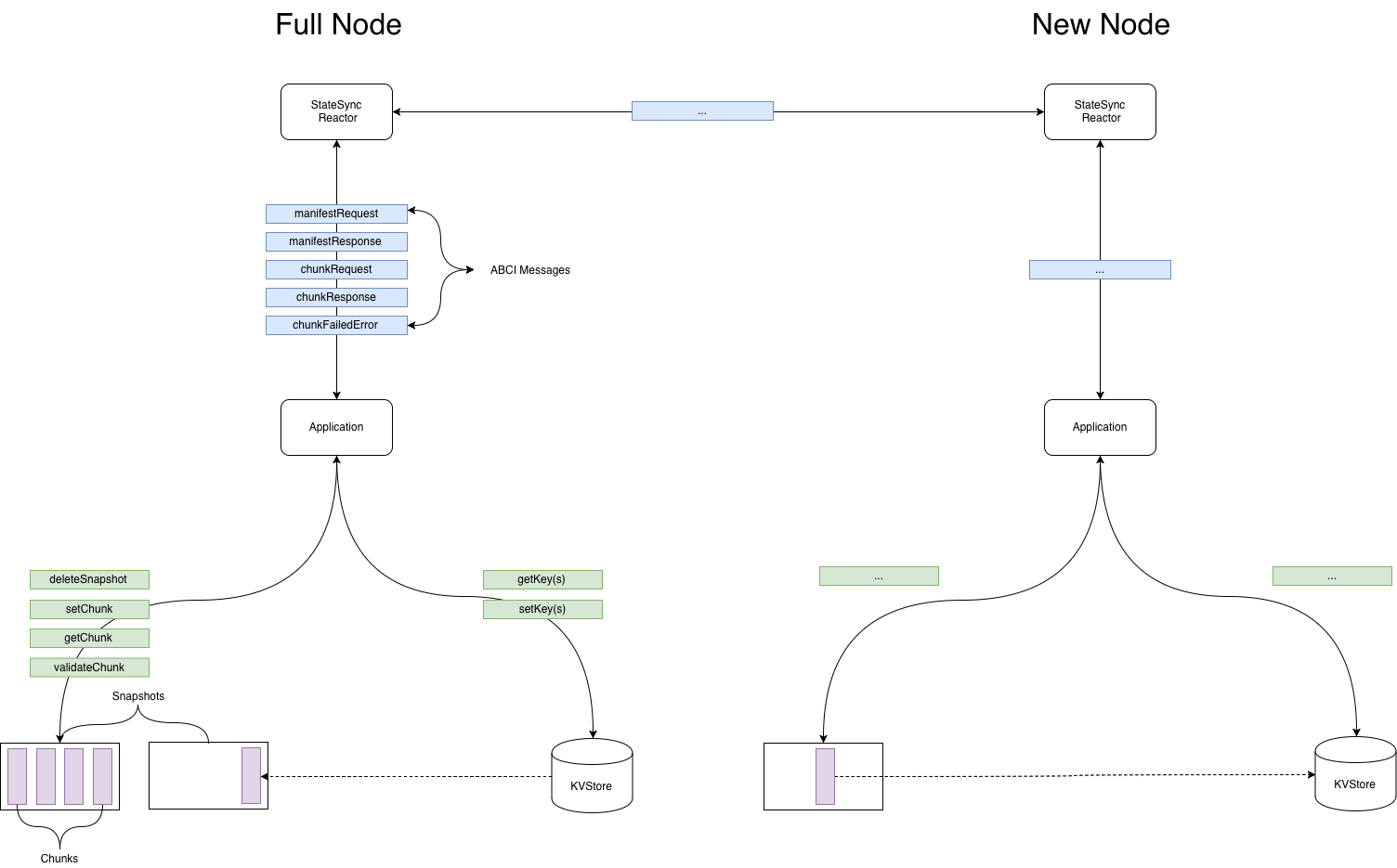

| # ADR 042: State Sync Design | |||

| ## Changelog | |||

| 2019-06-27: Init by EB | |||

| 2019-07-04: Follow up by brapse | |||

| ## Context | |||

| StateSync is a feature which would allow a new node to receive a | |||

| snapshot of the application state without downloading blocks or going | |||

| through consensus. Once downloaded, the node could switch to FastSync | |||

| and eventually participate in consensus. The goal of StateSync is to | |||

| facilitate setting up a new node as quickly as possible. | |||

| ## Considerations | |||

| Because Tendermint doesn't know anything about the application state, | |||

| StateSync will broker messages between nodes and through | |||

| the ABCI to an opaque applicaton. The implementation will have multiple | |||

| touch points on both the tendermint code base and ABCI application. | |||

| * A StateSync reactor to facilitate peer communication - Tendermint | |||

| * A Set of ABCI messages to transmit application state to the reactor - Tendermint | |||

| * A Set of MultiStore APIs for exposing snapshot data to the ABCI - ABCI application | |||

| * A Storage format with validation and performance considerations - ABCI application | |||

| ### Implementation Properties | |||

| Beyond the approach, any implementation of StateSync can be evaluated | |||

| across different criteria: | |||

| * Speed: Expected throughput of producing and consuming snapshots | |||

| * Safety: Cost of pushing invalid snapshots to a node | |||

| * Liveness: Cost of preventing a node from receiving/constructing a snapshot | |||

| * Effort: How much effort does an implementation require | |||

| ### Implementation Question | |||

| * What is the format of a snapshot | |||

| * Complete snapshot | |||

| * Ordered IAVL key ranges | |||

| * Compressed individually chunks which can be validated | |||

| * How is data validated | |||

| * Trust a peer with it's data blindly | |||

| * Trust a majority of peers | |||

| * Use light client validation to validate each chunk against consensus | |||

| produced merkle tree root | |||

| * What are the performance characteristics | |||

| * Random vs sequential reads | |||

| * How parallelizeable is the scheduling algorithm | |||

| ### Proposals | |||

| Broadly speaking there are two approaches to this problem which have had | |||

| varying degrees of discussion and progress. These approach can be | |||

| summarized as: | |||

| **Lazy:** Where snapshots are produced dynamically at request time. This | |||

| solution would use the existing data structure. | |||

| **Eager:** Where snapshots are produced periodically and served from disk at | |||

| request time. This solution would create an auxiliary data structure | |||

| optimized for batch read/writes. | |||

| Additionally the propsosals tend to vary on how they provide safety | |||

| properties. | |||

| **LightClient** Where a client can aquire the merkle root from the block | |||

| headers synchronized from a trusted validator set. Subsets of the application state, | |||

| called chunks can therefore be validated on receipt to ensure each chunk | |||

| is part of the merkle root. | |||

| **Majority of Peers** Where manifests of chunks along with checksums are | |||

| downloaded and compared against versions provided by a majority of | |||

| peers. | |||

| #### Lazy StateSync | |||

| An [initial specification](https://docs.google.com/document/d/15MFsQtNA0MGBv7F096FFWRDzQ1vR6_dics5Y49vF8JU/edit?ts=5a0f3629) was published by Alexis Sellier. | |||

| In this design, the state has a given `size` of primitive elements (like | |||

| keys or nodes), each element is assigned a number from 0 to `size-1`, | |||

| and chunks consists of a range of such elements. Ackratos raised | |||

| [some concerns](https://docs.google.com/document/d/1npGTAa1qxe8EQZ1wG0a0Sip9t5oX2vYZNUDwr_LVRR4/edit) | |||

| about this design, somewhat specific to the IAVL tree, and mainly concerning | |||

| performance of random reads and of iterating through the tree to determine element numbers | |||

| (ie. elements aren't indexed by the element number). | |||

| An alternative design was suggested by Jae Kwon in | |||

| [#3639](https://github.com/tendermint/tendermint/issues/3639) where chunking | |||

| happens lazily and in a dynamic way: nodes request key ranges from their peers, | |||

| and peers respond with some subset of the | |||

| requested range and with notes on how to request the rest in parallel from other | |||

| peers. Unlike chunk numbers, keys can be verified directly. And if some keys in the | |||

| range are ommitted, proofs for the range will fail to verify. | |||

| This way a node can start by requesting the entire tree from one peer, | |||

| and that peer can respond with say the first few keys, and the ranges to request | |||

| from other peers. | |||

| Additionally, per chunk validation tends to come more naturally to the | |||

| Lazy approach since it tends to use the existing structure of the tree | |||

| (ie. keys or nodes) rather than state-sync specific chunks. Such a | |||

| design for tendermint was originally tracked in | |||

| [#828](https://github.com/tendermint/tendermint/issues/828). | |||

| #### Eager StateSync | |||

| Warp Sync as implemented in Parity | |||

| ["Warp Sync"](https://wiki.parity.io/Warp-Sync-Snapshot-Format.html) to rapidly | |||

| download both blocks and state snapshots from peers. Data is carved into ~4MB | |||

| chunks and snappy compressed. Hashes of snappy compressed chunks are stored in a | |||

| manifest file which co-ordinates the state-sync. Obtaining a correct manifest | |||

| file seems to require an honest majority of peers. This means you may not find | |||

| out the state is incorrect until you download the whole thing and compare it | |||

| with a verified block header. | |||

| A similar solution was implemented by Binance in | |||

| [#3594](https://github.com/tendermint/tendermint/pull/3594) | |||

| based on their initial implementation in | |||

| [PR #3243](https://github.com/tendermint/tendermint/pull/3243) | |||

| and [some learnings](https://docs.google.com/document/d/1npGTAa1qxe8EQZ1wG0a0Sip9t5oX2vYZNUDwr_LVRR4/edit). | |||

| Note this still requires the honest majority peer assumption. | |||

| As an eager protocol, warp-sync can efficiently compress larger, more | |||

| predicatable chunks once per snapshot and service many new peers. By | |||

| comparison lazy chunkers would have to compress each chunk at request | |||

| time. | |||

| ### Analysis of Lazy vs Eager | |||

| Lazy vs Eager have more in common than they differ. They all require | |||

| reactors on the tendermint side, a set of ABCI messages and a method for | |||

| serializing/deserializing snapshots facilitated by a SnapshotFormat. | |||

| The biggest difference between Lazy and Eager proposals is in the | |||

| read/write patterns necessitated by serving a snapshot chunk. | |||

| Specifically, Lazy State Sync performs random reads to the underlying data | |||

| structure while Eager can optimize for sequential reads. | |||

| This distinctin between approaches was demonstrated by Binance's | |||

| [ackratos](https://github.com/ackratos) in their implementation of [Lazy | |||

| State sync](https://github.com/tendermint/tendermint/pull/3243), The | |||

| [analysis](https://docs.google.com/document/d/1npGTAa1qxe8EQZ1wG0a0Sip9t5oX2vYZNUDwr_LVRR4/) | |||

| of the performance, and follow up implementation of [Warp | |||

| Sync](http://github.com/tendermint/tendermint/pull/3594). | |||

| #### Compairing Security Models | |||

| There are several different security models which have been | |||

| discussed/proposed in the past but generally fall into two categories. | |||

| Light client validation: In which the node receiving data is expected to | |||

| first perform a light client sync and have all the nessesary block | |||

| headers. Within the trusted block header (trusted in terms of from a | |||

| validator set subject to [weak | |||

| subjectivity](https://github.com/tendermint/tendermint/pull/3795)) and | |||

| can compare any subset of keys called a chunk against the merkle root. | |||

| The advantage of light client validation is that the block headers are | |||

| signed by validators which have something to lose for malicious | |||

| behaviour. If a validator were to provide an invalid proof, they can be | |||

| slashed. | |||

| Majority of peer validation: A manifest file containing a list of chunks | |||

| along with checksums of each chunk is downloaded from a | |||

| trusted source. That source can be a community resource similar to | |||

| [sum.golang.org](https://sum.golang.org) or downloaded from the majority | |||

| of peers. One disadantage of the majority of peer security model is the | |||

| vuliberability to eclipse attacks in which a malicious users looks to | |||

| saturate a target node's peer list and produce a manufactured picture of | |||

| majority. | |||

| A third option would be to include snapshot related data in the | |||

| block header. This could include the manifest with related checksums and be | |||

| secured through consensus. One challenge of this approach is to | |||

| ensure that creating snapshots does not put undo burden on block | |||

| propsers by synchronizing snapshot creation and block creation. One | |||

| approach to minimizing the burden is for snapshots for height | |||

| `H` to be included in block `H+n` where `n` is some `n` block away, | |||

| giving the block propser enough time to complete the snapshot | |||

| asynchronousy. | |||

| ## Proposal: Eager StateSync With Per Chunk Light Client Validation | |||

| The conclusion after some concideration of the advantages/disadvances of | |||

| eager/lazy and different security models is to produce a state sync | |||

| which eagerly produces snapshots and uses light client validation. This | |||

| approach has the performance advantages of pre-computing efficient | |||

| snapshots which can streamed to new nodes on demand using sequential IO. | |||

| Secondly, by using light client validation we cna validate each chunk on | |||

| receipt and avoid the potential eclipse attack of majority of peer based | |||

| security. | |||

| ### Implementation | |||

| Tendermint is responsible for downloading and verifying chunks of | |||

| AppState from peers. ABCI Application is responsible for taking | |||

| AppStateChunk objects from TM and constructing a valid state tree whose | |||

| root corresponds with the AppHash of syncing block. In particular we | |||

| will need implement: | |||

| * Build new StateSync reactor brokers message transmission between the peers | |||

| and the ABCI application | |||

| * A set of ABCI Messages | |||

| * Design SnapshotFormat as an interface which can: | |||

| * validate chunks | |||

| * read/write chunks from file | |||

| * read/write chunks to/from application state store | |||

| * convert manifests into chunkRequest ABCI messages | |||

| * Implement SnapshotFormat for cosmos-hub with concrete implementation for: | |||

| * read/write chunks in a way which can be: | |||

| * parallelized across peers | |||

| * validated on receipt | |||

| * read/write to/from IAVL+ tree | |||

|  | |||

| ## Implementation Path | |||

| * Create StateSync reactor based on [#3753](https://github.com/tendermint/tendermint/pull/3753) | |||

| * Design SnapshotFormat with an eye towards cosmos-hub implementation | |||

| * ABCI message to send/receive SnapshotFormat | |||

| * IAVL+ changes to support SnapshotFormat | |||

| * Deliver Warp sync (no chunk validation) | |||

| * light client implementation for weak subjectivity | |||

| * Deliver StateSync with chunk validation | |||

| ## Status | |||

| Proposed | |||

| ## Concequences | |||

| ### Neutral | |||

| ### Positive | |||

| * Safe & performant state sync design substantiated with real world implementation experience | |||

| * General interfaces allowing application specific innovation | |||

| * Parallizable implementation trajectory with reasonable engineering effort | |||

| ### Negative | |||

| * Static Scheduling lacks opportunity for real time chunk availability optimizations | |||

| ## References | |||

| [sync: Sync current state without full replay for Applications](https://github.com/tendermint/tendermint/issues/828) - original issue | |||

| [tendermint state sync proposal](https://docs.google.com/document/d/15MFsQtNA0MGBv7F096FFWRDzQ1vR6_dics5Y49vF8JU/edit?ts=5a0f3629) - Cloudhead proposal | |||

| [tendermint state sync proposal 2](https://docs.google.com/document/d/1npGTAa1qxe8EQZ1wG0a0Sip9t5oX2vYZNUDwr_LVRR4/edit) - ackratos proposal | |||

| [proposal 2 implementation](https://github.com/tendermint/tendermint/pull/3243) - ackratos implementation | |||

| [WIP General/Lazy State-Sync pseudo-spec](https://github.com/tendermint/tendermint/issues/3639) - Jae Proposal | |||

| [Warp Sync Implementation](https://github.com/tendermint/tendermint/pull/3594) - ackratos | |||

| [Chunk Proposal](https://github.com/tendermint/tendermint/pull/3799) - Bucky proposed | |||

+ 141

- 0

docs/architecture/adr-044-lite-client-with-weak-subjectivity.md

View File

| @ -0,0 +1,141 @@ | |||

| # ADR 044: Lite Client with Weak Subjectivity | |||

| ## Changelog | |||

| * 13-07-2019: Initial draft | |||

| * 14-08-2019: Address cwgoes comments | |||

| ## Context | |||

| The concept of light clients was introduced in the Bitcoin white paper. It | |||

| describes a watcher of distributed consensus process that only validates the | |||

| consensus algorithm and not the state machine transactions within. | |||

| Tendermint light clients allow bandwidth & compute-constrained devices, such as smartphones, low-power embedded chips, or other blockchains to | |||

| efficiently verify the consensus of a Tendermint blockchain. This forms the | |||

| basis of safe and efficient state synchronization for new network nodes and | |||

| inter-blockchain communication (where a light client of one Tendermint instance | |||

| runs in another chain's state machine). | |||

| In a network that is expected to reliably punish validators for misbehavior | |||

| by slashing bonded stake and where the validator set changes | |||

| infrequently, clients can take advantage of this assumption to safely | |||

| synchronize a lite client without downloading the intervening headers. | |||

| Light clients (and full nodes) operating in the Proof Of Stake context need a | |||

| trusted block height from a trusted source that is no older than 1 unbonding | |||

| window plus a configurable evidence submission synchrony bound. This is called “weak subjectivity”. | |||

| Weak subjectivity is required in Proof of Stake blockchains because it is | |||

| costless for an attacker to buy up voting keys that are no longer bonded and | |||

| fork the network at some point in its prior history. See Vitalik’s post at | |||

| [Proof of Stake: How I Learned to Love Weak | |||

| Subjectivity](https://blog.ethereum.org/2014/11/25/proof-stake-learned-love-weak-subjectivity/). | |||

| Currently, Tendermint provides a lite client implementation in the | |||

| [lite](https://github.com/tendermint/tendermint/tree/master/lite) package. This | |||

| lite client implements a bisection algorithm that tries to use a binary search | |||

| to find the minimum number of block headers where the validator set voting | |||

| power changes are less than < 1/3rd. This interface does not support weak | |||

| subjectivity at this time. The Cosmos SDK also does not support counterfactual | |||

| slashing, nor does the lite client have any capacity to report evidence making | |||

| these systems *theoretically unsafe*. | |||

| NOTE: Tendermint provides a somewhat different (stronger) light client model | |||

| than Bitcoin under eclipse, since the eclipsing node(s) can only fool the light | |||

| client if they have two-thirds of the private keys from the last root-of-trust. | |||

| ## Decision | |||

| ### The Weak Subjectivity Interface | |||

| Add the weak subjectivity interface for when a new light client connects to the | |||

| network or when a light client that has been offline for longer than the | |||

| unbonding period connects to the network. Specifically, the node needs to | |||

| initialize the following structure before syncing from user input: | |||

| ``` | |||

| type TrustOptions struct { | |||

| // Required: only trust commits up to this old. | |||

| // Should be equal to the unbonding period minus some delta for evidence reporting. | |||

| TrustPeriod time.Duration `json:"trust-period"` | |||

| // Option 1: TrustHeight and TrustHash can both be provided | |||

| // to force the trusting of a particular height and hash. | |||

| // If the latest trusted height/hash is more recent, then this option is | |||

| // ignored. | |||

| TrustHeight int64 `json:"trust-height"` | |||

| TrustHash []byte `json:"trust-hash"` | |||

| // Option 2: Callback can be set to implement a confirmation | |||

| // step if the trust store is uninitialized, or expired. | |||

| Callback func(height int64, hash []byte) error | |||

| } | |||

| ``` | |||

| The expectation is the user will get this information from a trusted source | |||

| like a validator, a friend, or a secure website. A more user friendly | |||

| solution with trust tradeoffs is that we establish an https based protocol with | |||

| a default end point that populates this information. Also an on-chain registry | |||

| of roots-of-trust (e.g. on the Cosmos Hub) seems likely in the future. | |||

| ### Linear Verification | |||

| The linear verification algorithm requires downloading all headers | |||

| between the `TrustHeight` and the `LatestHeight`. The lite client downloads the | |||

| full header for the provided `TrustHeight` and then proceeds to download `N+1` | |||

| headers and applies the [Tendermint validation | |||

| rules](https://github.com/tendermint/tendermint/blob/master/docs/spec/blockchain/blockchain.md#validation) | |||

| to each block. | |||

| ### Bisecting Verification | |||

| Bisecting Verification is a more bandwidth and compute intensive mechanism that | |||

| in the most optimistic case requires a light client to only download two block | |||

| headers to come into synchronization. | |||

| The bisection algorithm proceeds in the following fashion. The client downloads | |||

| and verifies the full block header for `TrustHeight` and then fetches | |||

| `LatestHeight` blocker header. The client then verifies the `LatestHeight` | |||

| header. Finally the client attempts to verify the `LatestHeight` header with | |||

| voting powers taken from `NextValidatorSet` in the `TrustHeight` header. This | |||

| verification will succeed if the validators from `TrustHeight` still have > 2/3 | |||

| +1 of voting power in the `LatestHeight`. If this succeeds, the client is fully | |||

| synchronized. If this fails, then following Bisection Algorithm should be | |||

| executed. | |||

| The Client tries to download the block at the mid-point block between | |||

| `LatestHeight` and `TrustHeight` and attempts that same algorithm as above | |||

| using `MidPointHeight` instead of `LatestHeight` and a different threshold - | |||

| 1/3 +1 of voting power for *non-adjacent headers*. In the case the of failure, | |||

| recursively perform the `MidPoint` verification until success then start over | |||

| with an updated `NextValidatorSet` and `TrustHeight`. | |||

| If the client encounters a forged header, it should submit the header along | |||

| with some other intermediate headers as the evidence of misbehavior to other | |||

| full nodes. After that, it can retry the bisection using another full node. An | |||

| optimal client will cache trusted headers from the previous run to minimize | |||

| network usage. | |||

| --- | |||

| Check out the formal specification | |||

| [here](https://github.com/tendermint/tendermint/blob/master/docs/spec/consensus/light-client.md). | |||

| ## Status | |||

| Accepted. | |||

| ## Consequences | |||

| ### Positive | |||

| * light client which is safe to use (it can go offline, but not for too long) | |||

| ### Negative | |||

| * complexity of bisection | |||

| ### Neutral | |||

| * social consensus can be prone to errors (for cases where a new light client | |||

| joins a network or it has been offline for too long) | |||

BIN

docs/architecture/img/state-sync.png

View File

+ 600

- 0

docs/guides/java.md

View File

| @ -0,0 +1,600 @@ | |||

| # Creating an application in Java | |||

| ## Guide Assumptions | |||

| This guide is designed for beginners who want to get started with a Tendermint | |||

| Core application from scratch. It does not assume that you have any prior | |||

| experience with Tendermint Core. | |||

| Tendermint Core is Byzantine Fault Tolerant (BFT) middleware that takes a state | |||

| transition machine (your application) - written in any programming language - and securely | |||

| replicates it on many machines. | |||

| By following along with this guide, you'll create a Tendermint Core project | |||

| called kvstore, a (very) simple distributed BFT key-value store. The application (which should | |||

| implementing the blockchain interface (ABCI)) will be written in Java. | |||

| This guide assumes that you are not new to JVM world. If you are new please see [JVM Minimal Survival Guide](https://hadihariri.com/2013/12/29/jvm-minimal-survival-guide-for-the-dotnet-developer/#java-the-language-java-the-ecosystem-java-the-jvm) and [Gradle Docs](https://docs.gradle.org/current/userguide/userguide.html). | |||

| ## Built-in app vs external app | |||

| If you use Golang, you can run your app and Tendermint Core in the same process to get maximum performance. | |||

| [Cosmos SDK](https://github.com/cosmos/cosmos-sdk) is written this way. | |||

| Please refer to [Writing a built-in Tendermint Core application in Go](./go-built-in.md) guide for details. | |||

| If you choose another language, like we did in this guide, you have to write a separate app, | |||

| which will communicate with Tendermint Core via a socket (UNIX or TCP) or gRPC. | |||

| This guide will show you how to build external application using RPC server. | |||

| Having a separate application might give you better security guarantees as two | |||

| processes would be communicating via established binary protocol. Tendermint | |||

| Core will not have access to application's state. | |||

| ## 1.1 Installing Java and Gradle | |||

| Please refer to [the Oracle's guide for installing JDK](https://www.oracle.com/technetwork/java/javase/downloads/index.html). | |||

| Verify that you have installed Java successfully: | |||

| ```sh | |||

| $ java -version | |||

| java version "12.0.2" 2019-07-16 | |||

| Java(TM) SE Runtime Environment (build 12.0.2+10) | |||

| Java HotSpot(TM) 64-Bit Server VM (build 12.0.2+10, mixed mode, sharing) | |||

| ``` | |||

| You can choose any version of Java higher or equal to 8. | |||

| This guide is written using Java SE Development Kit 12. | |||

| Make sure you have `$JAVA_HOME` environment variable set: | |||

| ```sh | |||

| $ echo $JAVA_HOME | |||

| /Library/Java/JavaVirtualMachines/jdk-12.0.2.jdk/Contents/Home | |||

| ``` | |||

| For Gradle installation, please refer to [their official guide](https://gradle.org/install/). | |||

| ## 1.2 Creating a new Java project | |||

| We'll start by creating a new Gradle project. | |||

| ```sh | |||

| $ export KVSTORE_HOME=~/kvstore | |||

| $ mkdir $KVSTORE_HOME | |||

| $ cd $KVSTORE_HOME | |||

| ``` | |||

| Inside the example directory run: | |||

| ```sh | |||

| gradle init --dsl groovy --package io.example --project-name example --type java-application --test-framework junit | |||

| ``` | |||

| This will create a new project for you. The tree of files should look like: | |||

| ```sh | |||

| $ tree | |||

| . | |||

| |-- build.gradle | |||

| |-- gradle | |||

| | `-- wrapper | |||

| | |-- gradle-wrapper.jar | |||

| | `-- gradle-wrapper.properties | |||

| |-- gradlew | |||

| |-- gradlew.bat | |||

| |-- settings.gradle | |||

| `-- src | |||

| |-- main | |||

| | |-- java | |||

| | | `-- io | |||

| | | `-- example | |||

| | | `-- App.java | |||

| | `-- resources | |||

| `-- test | |||

| |-- java | |||

| | `-- io | |||

| | `-- example | |||

| | `-- AppTest.java | |||

| `-- resources | |||

| ``` | |||

| When run, this should print "Hello world." to the standard output. | |||

| ```sh | |||

| $ ./gradlew run | |||

| > Task :run | |||

| Hello world. | |||

| ``` | |||

| ## 1.3 Writing a Tendermint Core application | |||

| Tendermint Core communicates with the application through the Application | |||

| BlockChain Interface (ABCI). All message types are defined in the [protobuf | |||

| file](https://github.com/tendermint/tendermint/blob/develop/abci/types/types.proto). | |||

| This allows Tendermint Core to run applications written in any programming | |||

| language. | |||

| ### 1.3.1 Compile .proto files | |||

| Add the following piece to the top of the `build.gradle`: | |||

| ```groovy | |||

| buildscript { | |||

| repositories { | |||

| mavenCentral() | |||

| } | |||

| dependencies { | |||

| classpath 'com.google.protobuf:protobuf-gradle-plugin:0.8.8' | |||

| } | |||

| } | |||

| ``` | |||

| Enable the protobuf plugin in the `plugins` section of the `build.gradle`: | |||

| ```groovy | |||

| plugins { | |||

| id 'com.google.protobuf' version '0.8.8' | |||

| } | |||

| ``` | |||

| Add the following code to `build.gradle`: | |||

| ```groovy | |||

| protobuf { | |||

| protoc { | |||

| artifact = "com.google.protobuf:protoc:3.7.1" | |||

| } | |||

| plugins { | |||

| grpc { | |||

| artifact = 'io.grpc:protoc-gen-grpc-java:1.22.1' | |||

| } | |||

| } | |||

| generateProtoTasks { | |||

| all()*.plugins { | |||

| grpc {} | |||

| } | |||

| } | |||

| } | |||

| ``` | |||

| Now we should be ready to compile the `*.proto` files. | |||

| Copy the necessary `.proto` files to your project: | |||

| ```sh | |||

| mkdir -p \ | |||

| $KVSTORE_HOME/src/main/proto/github.com/tendermint/tendermint/abci/types \ | |||

| $KVSTORE_HOME/src/main/proto/github.com/tendermint/tendermint/crypto/merkle \ | |||

| $KVSTORE_HOME/src/main/proto/github.com/tendermint/tendermint/libs/common \ | |||

| $KVSTORE_HOME/src/main/proto/github.com/gogo/protobuf/gogoproto | |||

| cp $GOPATH/src/github.com/tendermint/tendermint/abci/types/types.proto \ | |||

| $KVSTORE_HOME/src/main/proto/github.com/tendermint/tendermint/abci/types/types.proto | |||

| cp $GOPATH/src/github.com/tendermint/tendermint/crypto/merkle/merkle.proto \ | |||

| $KVSTORE_HOME/src/main/proto/github.com/tendermint/tendermint/crypto/merkle/merkle.proto | |||

| cp $GOPATH/src/github.com/tendermint/tendermint/libs/common/types.proto \ | |||

| $KVSTORE_HOME/src/main/proto/github.com/tendermint/tendermint/libs/common/types.proto | |||

| cp $GOPATH/src/github.com/gogo/protobuf/gogoproto/gogo.proto \ | |||

| $KVSTORE_HOME/src/main/proto/github.com/gogo/protobuf/gogoproto/gogo.proto | |||

| ``` | |||

| Add these dependencies to `build.gradle`: | |||

| ```groovy | |||

| dependencies { | |||

| implementation 'io.grpc:grpc-protobuf:1.22.1' | |||

| implementation 'io.grpc:grpc-netty-shaded:1.22.1' | |||

| implementation 'io.grpc:grpc-stub:1.22.1' | |||

| } | |||

| ``` | |||

| To generate all protobuf-type classes run: | |||

| ```sh | |||

| ./gradlew generateProto | |||

| ``` | |||

| To verify that everything went smoothly, you can inspect the `build/generated/` directory: | |||

| ```sh | |||

| $ tree build/generated/ | |||

| build/generated/ | |||

| |-- source | |||

| | `-- proto | |||

| | `-- main | |||

| | |-- grpc | |||

| | | `-- types | |||

| | | `-- ABCIApplicationGrpc.java | |||

| | `-- java | |||

| | |-- com | |||

| | | `-- protobuf | |||

| | | `-- GoGoProtos.java | |||

| | |-- common | |||

| | | `-- Types.java | |||

| | |-- merkle | |||

| | | `-- Merkle.java | |||

| | `-- types | |||

| | `-- Types.java | |||

| ``` | |||

| ### 1.3.2 Implementing ABCI | |||

| The resulting `$KVSTORE_HOME/build/generated/source/proto/main/grpc/types/ABCIApplicationGrpc.java` file | |||

| contains the abstract class `ABCIApplicationImplBase`, which is an interface we'll need to implement. | |||

| Create `$KVSTORE_HOME/src/main/java/io/example/KVStoreApp.java` file with the following content: | |||

| ```java | |||

| package io.example; | |||

| import io.grpc.stub.StreamObserver; | |||

| import types.ABCIApplicationGrpc; | |||

| import types.Types.*; | |||

| class KVStoreApp extends ABCIApplicationGrpc.ABCIApplicationImplBase { | |||

| // methods implementation | |||

| } | |||

| ``` | |||

| Now I will go through each method of `ABCIApplicationImplBase` explaining when it's called and adding | |||

| required business logic. | |||

| ### 1.3.3 CheckTx | |||

| When a new transaction is added to the Tendermint Core, it will ask the | |||

| application to check it (validate the format, signatures, etc.). | |||

| ```java | |||

| @Override | |||

| public void checkTx(RequestCheckTx req, StreamObserver<ResponseCheckTx> responseObserver) { | |||

| var tx = req.getTx(); | |||

| int code = validate(tx); | |||

| var resp = ResponseCheckTx.newBuilder() | |||

| .setCode(code) | |||

| .setGasWanted(1) | |||

| .build(); | |||

| responseObserver.onNext(resp); | |||

| responseObserver.onCompleted(); | |||

| } | |||

| private int validate(ByteString tx) { | |||

| List<byte[]> parts = split(tx, '='); | |||

| if (parts.size() != 2) { | |||

| return 1; | |||

| } | |||

| byte[] key = parts.get(0); | |||

| byte[] value = parts.get(1); | |||

| // check if the same key=value already exists | |||

| var stored = getPersistedValue(key); | |||

| if (stored != null && Arrays.equals(stored, value)) { | |||

| return 2; | |||

| } | |||

| return 0; | |||

| } | |||

| private List<byte[]> split(ByteString tx, char separator) { | |||

| var arr = tx.toByteArray(); | |||

| int i; | |||

| for (i = 0; i < tx.size(); i++) { | |||

| if (arr[i] == (byte)separator) { | |||

| break; | |||

| } | |||

| } | |||

| if (i == tx.size()) { | |||

| return Collections.emptyList(); | |||

| } | |||

| return List.of( | |||

| tx.substring(0, i).toByteArray(), | |||

| tx.substring(i + 1).toByteArray() | |||

| ); | |||

| } | |||

| ``` | |||

| Don't worry if this does not compile yet. | |||

| If the transaction does not have a form of `{bytes}={bytes}`, we return `1` | |||

| code. When the same key=value already exist (same key and value), we return `2` | |||

| code. For others, we return a zero code indicating that they are valid. | |||

| Note that anything with non-zero code will be considered invalid (`-1`, `100`, | |||

| etc.) by Tendermint Core. | |||

| Valid transactions will eventually be committed given they are not too big and | |||

| have enough gas. To learn more about gas, check out ["the | |||

| specification"](https://tendermint.com/docs/spec/abci/apps.html#gas). | |||

| For the underlying key-value store we'll use | |||

| [JetBrains Xodus](https://github.com/JetBrains/xodus), which is a transactional schema-less embedded high-performance database written in Java. | |||

| `build.gradle`: | |||

| ```groovy | |||

| dependencies { | |||

| implementation 'org.jetbrains.xodus:xodus-environment:1.3.91' | |||

| } | |||

| ``` | |||

| ```java | |||

| ... | |||

| import jetbrains.exodus.ArrayByteIterable; | |||

| import jetbrains.exodus.ByteIterable; | |||

| import jetbrains.exodus.env.Environment; | |||

| import jetbrains.exodus.env.Store; | |||

| import jetbrains.exodus.env.StoreConfig; | |||

| import jetbrains.exodus.env.Transaction; | |||

| class KVStoreApp extends ABCIApplicationGrpc.ABCIApplicationImplBase { | |||

| private Environment env; | |||

| private Transaction txn = null; | |||

| private Store store = null; | |||

| KVStoreApp(Environment env) { | |||

| this.env = env; | |||

| } | |||

| ... | |||

| private byte[] getPersistedValue(byte[] k) { | |||

| return env.computeInReadonlyTransaction(txn -> { | |||

| var store = env.openStore("store", StoreConfig.WITHOUT_DUPLICATES, txn); | |||

| ByteIterable byteIterable = store.get(txn, new ArrayByteIterable(k)); | |||

| if (byteIterable == null) { | |||

| return null; | |||

| } | |||

| return byteIterable.getBytesUnsafe(); | |||

| }); | |||

| } | |||

| } | |||

| ``` | |||

| ### 1.3.4 BeginBlock -> DeliverTx -> EndBlock -> Commit | |||

| When Tendermint Core has decided on the block, it's transferred to the | |||

| application in 3 parts: `BeginBlock`, one `DeliverTx` per transaction and | |||

| `EndBlock` in the end. `DeliverTx` are being transferred asynchronously, but the | |||

| responses are expected to come in order. | |||

| ```java | |||

| @Override | |||

| public void beginBlock(RequestBeginBlock req, StreamObserver<ResponseBeginBlock> responseObserver) { | |||

| txn = env.beginTransaction(); | |||

| store = env.openStore("store", StoreConfig.WITHOUT_DUPLICATES, txn); | |||

| var resp = ResponseBeginBlock.newBuilder().build(); | |||

| responseObserver.onNext(resp); | |||

| responseObserver.onCompleted(); | |||

| } | |||

| ``` | |||

| Here we begin a new transaction, which will accumulate the block's transactions and open the corresponding store. | |||

| ```java | |||

| @Override | |||

| public void deliverTx(RequestDeliverTx req, StreamObserver<ResponseDeliverTx> responseObserver) { | |||

| var tx = req.getTx(); | |||

| int code = validate(tx); | |||

| if (code == 0) { | |||

| List<byte[]> parts = split(tx, '='); | |||

| var key = new ArrayByteIterable(parts.get(0)); | |||

| var value = new ArrayByteIterable(parts.get(1)); | |||

| store.put(txn, key, value); | |||

| } | |||

| var resp = ResponseDeliverTx.newBuilder() | |||

| .setCode(code) | |||

| .build(); | |||

| responseObserver.onNext(resp); | |||

| responseObserver.onCompleted(); | |||

| } | |||

| ``` | |||

| If the transaction is badly formatted or the same key=value already exist, we | |||

| again return the non-zero code. Otherwise, we add it to the store. | |||

| In the current design, a block can include incorrect transactions (those who | |||

| passed `CheckTx`, but failed `DeliverTx` or transactions included by the proposer | |||

| directly). This is done for performance reasons. | |||

| Note we can't commit transactions inside the `DeliverTx` because in such case | |||

| `Query`, which may be called in parallel, will return inconsistent data (i.e. | |||

| it will report that some value already exist even when the actual block was not | |||

| yet committed). | |||

| `Commit` instructs the application to persist the new state. | |||

| ```java | |||

| @Override | |||

| public void commit(RequestCommit req, StreamObserver<ResponseCommit> responseObserver) { | |||

| txn.commit(); | |||

| var resp = ResponseCommit.newBuilder() | |||

| .setData(ByteString.copyFrom(new byte[8])) | |||

| .build(); | |||

| responseObserver.onNext(resp); | |||

| responseObserver.onCompleted(); | |||

| } | |||

| ``` | |||

| ### 1.3.5 Query | |||

| Now, when the client wants to know whenever a particular key/value exist, it | |||

| will call Tendermint Core RPC `/abci_query` endpoint, which in turn will call | |||

| the application's `Query` method. | |||

| Applications are free to provide their own APIs. But by using Tendermint Core | |||

| as a proxy, clients (including [light client | |||

| package](https://godoc.org/github.com/tendermint/tendermint/lite)) can leverage | |||

| the unified API across different applications. Plus they won't have to call the | |||

| otherwise separate Tendermint Core API for additional proofs. | |||

| Note we don't include a proof here. | |||

| ```java | |||

| @Override | |||

| public void query(RequestQuery req, StreamObserver<ResponseQuery> responseObserver) { | |||

| var k = req.getData().toByteArray(); | |||

| var v = getPersistedValue(k); | |||

| var builder = ResponseQuery.newBuilder(); | |||

| if (v == null) { | |||

| builder.setLog("does not exist"); | |||

| } else { | |||

| builder.setLog("exists"); | |||

| builder.setKey(ByteString.copyFrom(k)); | |||

| builder.setValue(ByteString.copyFrom(v)); | |||

| } | |||

| responseObserver.onNext(builder.build()); | |||

| responseObserver.onCompleted(); | |||

| } | |||

| ``` | |||

| The complete specification can be found | |||

| [here](https://tendermint.com/docs/spec/abci/). | |||

| ## 1.4 Starting an application and a Tendermint Core instances | |||

| Put the following code into the `$KVSTORE_HOME/src/main/java/io/example/App.java` file: | |||

| ```java | |||

| package io.example; | |||

| import jetbrains.exodus.env.Environment; | |||

| import jetbrains.exodus.env.Environments; | |||

| import java.io.IOException; | |||

| public class App { | |||

| public static void main(String[] args) throws IOException, InterruptedException { | |||

| try (Environment env = Environments.newInstance("tmp/storage")) { | |||

| var app = new KVStoreApp(env); | |||

| var server = new GrpcServer(app, 26658); | |||

| server.start(); | |||

| server.blockUntilShutdown(); | |||

| } | |||

| } | |||

| } | |||

| ``` | |||

| It is the entry point of the application. | |||

| Here we create a special object `Environment`, which knows where to store the application state. | |||

| Then we create and start the gRPC server to handle Tendermint Core requests. | |||

| Create the `$KVSTORE_HOME/src/main/java/io/example/GrpcServer.java` file with the following content: | |||

| ```java | |||

| package io.example; | |||

| import io.grpc.BindableService; | |||

| import io.grpc.Server; | |||

| import io.grpc.ServerBuilder; | |||

| import java.io.IOException; | |||

| class GrpcServer { | |||

| private Server server; | |||

| GrpcServer(BindableService service, int port) { | |||

| this.server = ServerBuilder.forPort(port) | |||

| .addService(service) | |||

| .build(); | |||

| } | |||

| void start() throws IOException { | |||

| server.start(); | |||

| System.out.println("gRPC server started, listening on $port"); | |||

| Runtime.getRuntime().addShutdownHook(new Thread(() -> { | |||

| System.out.println("shutting down gRPC server since JVM is shutting down"); | |||

| GrpcServer.this.stop(); | |||

| System.out.println("server shut down"); | |||

| })); | |||

| } | |||

| private void stop() { | |||

| server.shutdown(); | |||

| } | |||

| /** | |||

| * Await termination on the main thread since the grpc library uses daemon threads. | |||

| */ | |||

| void blockUntilShutdown() throws InterruptedException { | |||

| server.awaitTermination(); | |||

| } | |||

| } | |||

| ``` | |||

| ## 1.5 Getting Up and Running | |||

| To create a default configuration, nodeKey and private validator files, let's | |||

| execute `tendermint init`. But before we do that, we will need to install | |||

| Tendermint Core. | |||

| ```sh | |||

| $ rm -rf /tmp/example | |||

| $ cd $GOPATH/src/github.com/tendermint/tendermint | |||

| $ make install | |||

| $ TMHOME="/tmp/example" tendermint init | |||

| I[2019-07-16|18:20:36.480] Generated private validator module=main keyFile=/tmp/example/config/priv_validator_key.json stateFile=/tmp/example2/data/priv_validator_state.json | |||

| I[2019-07-16|18:20:36.481] Generated node key module=main path=/tmp/example/config/node_key.json | |||

| I[2019-07-16|18:20:36.482] Generated genesis file module=main path=/tmp/example/config/genesis.json | |||

| ``` | |||

| Feel free to explore the generated files, which can be found at | |||

| `/tmp/example/config` directory. Documentation on the config can be found | |||

| [here](https://tendermint.com/docs/tendermint-core/configuration.html). | |||

| We are ready to start our application: | |||

| ```sh | |||

| ./gradlew run | |||

| gRPC server started, listening on 26658 | |||

| ``` | |||

| Then we need to start Tendermint Core and point it to our application. Staying | |||

| within the application directory execute: | |||

| ```sh | |||

| $ TMHOME="/tmp/example" tendermint node --abci grpc --proxy_app tcp://127.0.0.1:26658 | |||

| I[2019-07-28|15:44:53.632] Version info module=main software=0.32.1 block=10 p2p=7 | |||

| I[2019-07-28|15:44:53.677] Starting Node module=main impl=Node | |||

| I[2019-07-28|15:44:53.681] Started node module=main nodeInfo="{ProtocolVersion:{P2P:7 Block:10 App:0} ID_:7639e2841ccd47d5ae0f5aad3011b14049d3f452 ListenAddr:tcp://0.0.0.0:26656 Network:test-chain-Nhl3zk Version:0.32.1 Channels:4020212223303800 Moniker:Ivans-MacBook-Pro.local Other:{TxIndex:on RPCAddress:tcp://127.0.0.1:26657}}" | |||

| I[2019-07-28|15:44:54.801] Executed block module=state height=8 validTxs=0 invalidTxs=0 | |||

| I[2019-07-28|15:44:54.814] Committed state module=state height=8 txs=0 appHash=0000000000000000 | |||

| ``` | |||

| Now open another tab in your terminal and try sending a transaction: | |||

| ```sh | |||

| $ curl -s 'localhost:26657/broadcast_tx_commit?tx="tendermint=rocks"' | |||

| { | |||