Browse Source

blockchain: Reorg reactor (#3561)

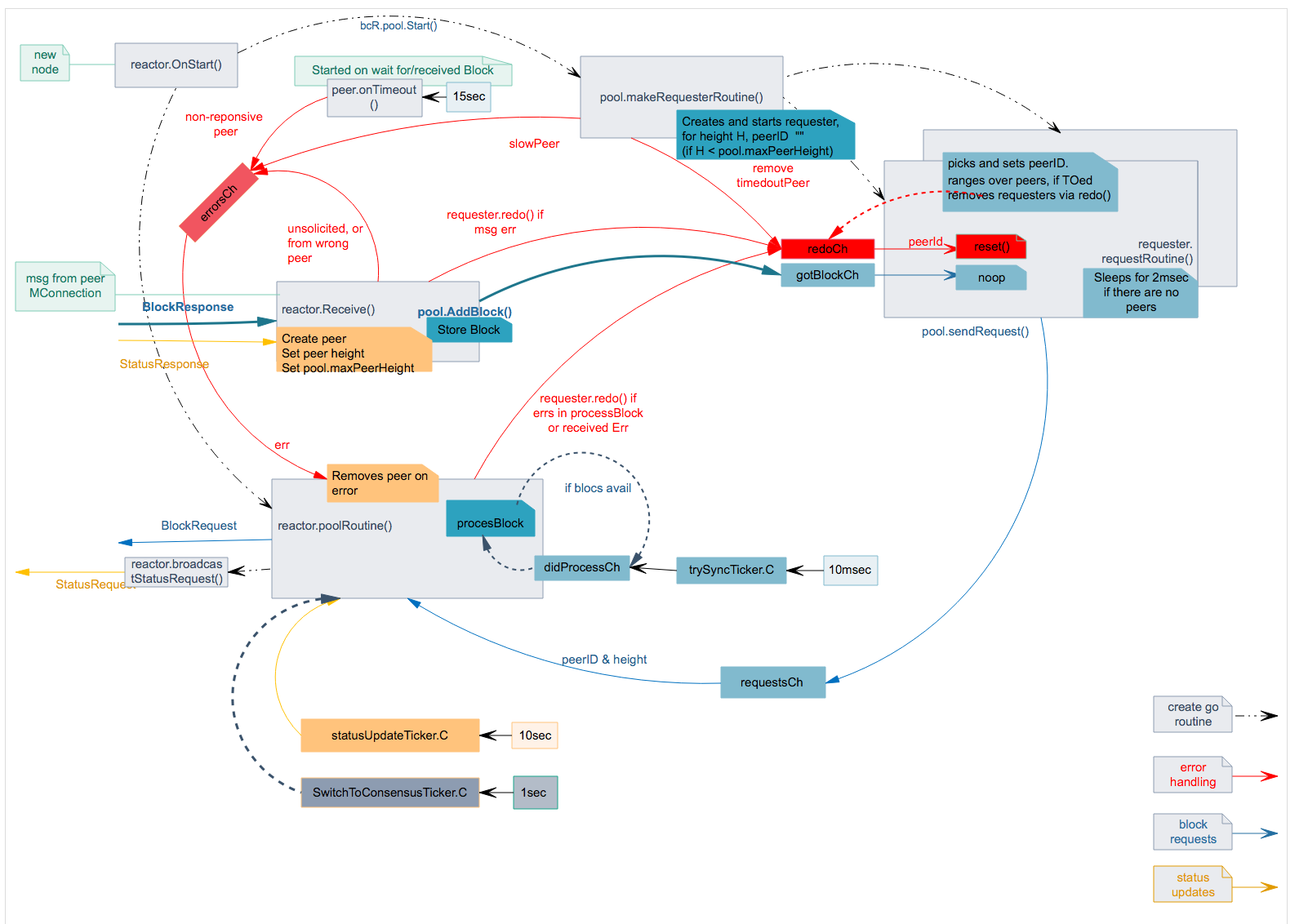

* go routines in blockchain reactor * Added reference to the go routine diagram * Initial commit * cleanup * Undo testing_logger change, committed by mistake * Fix the test loggers * pulled some fsm code into pool.go * added pool tests * changes to the design added block requests under peer moved the request trigger in the reactor poolRoutine, triggered now by a ticker in general moved everything required for making block requests smarter in the poolRoutine added a simple map of heights to keep track of what will need to be requested next added a few more tests * send errors to FSM in a different channel than blocks send errors (RemovePeer) from switch on a different channel than the one receiving blocks renamed channels added more pool tests * more pool tests * lint errors * more tests * more tests * switch fast sync to new implementation * fixed data race in tests * cleanup * finished fsm tests * address golangci comments :) * address golangci comments :) * Added timeout on next block needed to advance * updating docs and cleanup * fix issue in test from previous cleanup * cleanup * Added termination scenarios, tests and more cleanup * small fixes to adr, comments and cleanup * Fix bug in sendRequest() If we tried to send a request to a peer not present in the switch, a missing continue statement caused the request to be blackholed in a peer that was removed and never retried. While this bug was manifesting, the reactor kept asking for other blocks that would be stored and never consumed. Added the number of unconsumed blocks in the math for requesting blocks ahead of current processing height so eventually there will be no more blocks requested until the already received ones are consumed. * remove bpPeer's didTimeout field * Use distinct err codes for peer timeout and FSM timeouts * Don't allow peers to update with lower height * review comments from Ethan and Zarko * some cleanup, renaming, comments * Move block execution in separate goroutine * Remove pool's numPending * review comments * fix lint, remove old blockchain reactor and duplicates in fsm tests * small reorg around peer after review comments * add the reactor spec * verify block only once * review comments * change to int for max number of pending requests * cleanup and godoc * Add configuration flag fast sync version * golangci fixes * fix config template * move both reactor versions under blockchain * cleanup, golint, renaming stuff * updated documentation, fixed more golint warnings * integrate with behavior package * sync with master * gofmt * add changelog_pending entry * move to improvments * suggestion to changelog entrypull/3830/head

committed by

Marko

Marko

34 changed files with 4275 additions and 54 deletions

Split View

Diff Options

-

+1 -0CHANGELOG_PENDING.md

-

+10 -7blockchain/v0/pool.go

-

+1 -1blockchain/v0/pool_test.go

-

+7 -5blockchain/v0/reactor.go

-

+4 -2blockchain/v0/reactor_test.go

-

+1 -1blockchain/v0/wire.go

-

+209 -0blockchain/v1/peer.go

-

+278 -0blockchain/v1/peer_test.go

-

+369 -0blockchain/v1/pool.go

-

+650 -0blockchain/v1/pool_test.go

-

+620 -0blockchain/v1/reactor.go

-

+450 -0blockchain/v1/reactor_fsm.go

-

+938 -0blockchain/v1/reactor_fsm_test.go

-

+337 -0blockchain/v1/reactor_test.go

-

+13 -0blockchain/v1/wire.go

-

+1 -1cmd/tendermint/commands/run_node.go

-

+41 -3config/config.go

-

+9 -1config/toml.go

-

+2 -2consensus/common_test.go

-

+2 -2consensus/reactor_test.go

-

+2 -2consensus/replay_file.go

-

+3 -2consensus/wal_generator.go

-

BINdocs/spec/reactors/block_sync/bcv1/img/bc-reactor-new-datastructs.png

-

BINdocs/spec/reactors/block_sync/bcv1/img/bc-reactor-new-fsm.png

-

BINdocs/spec/reactors/block_sync/bcv1/img/bc-reactor-new-goroutines.png

-

+237 -0docs/spec/reactors/block_sync/bcv1/impl-v1.md

-

BINdocs/spec/reactors/block_sync/img/bc-reactor-routines.png

-

+8 -5docs/spec/reactors/block_sync/impl.md

-

+8 -0docs/tendermint-core/configuration.md

-

+0 -5go.sum

-

+47 -13node/node.go

-

+2 -1store/store.go

-

+13 -1store/store_test.go

-

+12 -0store/wire.go

+ 1

- 0

CHANGELOG_PENDING.md

View File

blockchain/pool.go → blockchain/v0/pool.go

View File

blockchain/pool_test.go → blockchain/v0/pool_test.go

View File

blockchain/reactor.go → blockchain/v0/reactor.go

View File

blockchain/reactor_test.go → blockchain/v0/reactor_test.go

View File

blockchain/wire.go → blockchain/v0/wire.go

View File

+ 209

- 0

blockchain/v1/peer.go

View File

| @ -0,0 +1,209 @@ | |||

| package v1 | |||

| import ( | |||

| "fmt" | |||

| "math" | |||

| "time" | |||

| flow "github.com/tendermint/tendermint/libs/flowrate" | |||

| "github.com/tendermint/tendermint/libs/log" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| "github.com/tendermint/tendermint/types" | |||

| ) | |||

| //-------- | |||

| // Peer | |||

| // BpPeerParams stores the peer parameters that are used when creating a peer. | |||

| type BpPeerParams struct { | |||

| timeout time.Duration | |||

| minRecvRate int64 | |||

| sampleRate time.Duration | |||

| windowSize time.Duration | |||

| } | |||

| // BpPeer is the datastructure associated with a fast sync peer. | |||

| type BpPeer struct { | |||

| logger log.Logger | |||

| ID p2p.ID | |||

| Height int64 // the peer reported height | |||

| NumPendingBlockRequests int // number of requests still waiting for block responses | |||

| blocks map[int64]*types.Block // blocks received or expected to be received from this peer | |||

| blockResponseTimer *time.Timer | |||

| recvMonitor *flow.Monitor | |||

| params *BpPeerParams // parameters for timer and monitor | |||

| onErr func(err error, peerID p2p.ID) // function to call on error | |||

| } | |||

| // NewBpPeer creates a new peer. | |||

| func NewBpPeer( | |||

| peerID p2p.ID, height int64, onErr func(err error, peerID p2p.ID), params *BpPeerParams) *BpPeer { | |||

| if params == nil { | |||

| params = BpPeerDefaultParams() | |||

| } | |||

| return &BpPeer{ | |||

| ID: peerID, | |||

| Height: height, | |||

| blocks: make(map[int64]*types.Block, maxRequestsPerPeer), | |||

| logger: log.NewNopLogger(), | |||

| onErr: onErr, | |||

| params: params, | |||

| } | |||

| } | |||

| // String returns a string representation of a peer. | |||

| func (peer *BpPeer) String() string { | |||

| return fmt.Sprintf("peer: %v height: %v pending: %v", peer.ID, peer.Height, peer.NumPendingBlockRequests) | |||

| } | |||

| // SetLogger sets the logger of the peer. | |||

| func (peer *BpPeer) SetLogger(l log.Logger) { | |||

| peer.logger = l | |||

| } | |||

| // Cleanup performs cleanup of the peer, removes blocks, requests, stops timer and monitor. | |||

| func (peer *BpPeer) Cleanup() { | |||

| if peer.blockResponseTimer != nil { | |||

| peer.blockResponseTimer.Stop() | |||

| } | |||

| if peer.NumPendingBlockRequests != 0 { | |||

| peer.logger.Info("peer with pending requests is being cleaned", "peer", peer.ID) | |||

| } | |||

| if len(peer.blocks)-peer.NumPendingBlockRequests != 0 { | |||

| peer.logger.Info("peer with pending blocks is being cleaned", "peer", peer.ID) | |||

| } | |||

| for h := range peer.blocks { | |||

| delete(peer.blocks, h) | |||

| } | |||

| peer.NumPendingBlockRequests = 0 | |||

| peer.recvMonitor = nil | |||

| } | |||

| // BlockAtHeight returns the block at a given height if available and errMissingBlock otherwise. | |||

| func (peer *BpPeer) BlockAtHeight(height int64) (*types.Block, error) { | |||

| block, ok := peer.blocks[height] | |||

| if !ok { | |||

| return nil, errMissingBlock | |||

| } | |||

| if block == nil { | |||

| return nil, errMissingBlock | |||

| } | |||

| return peer.blocks[height], nil | |||

| } | |||

| // AddBlock adds a block at peer level. Block must be non-nil and recvSize a positive integer | |||

| // The peer must have a pending request for this block. | |||

| func (peer *BpPeer) AddBlock(block *types.Block, recvSize int) error { | |||

| if block == nil || recvSize < 0 { | |||

| panic("bad parameters") | |||

| } | |||

| existingBlock, ok := peer.blocks[block.Height] | |||

| if !ok { | |||

| peer.logger.Error("unsolicited block", "blockHeight", block.Height, "peer", peer.ID) | |||

| return errMissingBlock | |||

| } | |||

| if existingBlock != nil { | |||

| peer.logger.Error("already have a block for height", "height", block.Height) | |||

| return errDuplicateBlock | |||

| } | |||

| if peer.NumPendingBlockRequests == 0 { | |||

| panic("peer does not have pending requests") | |||

| } | |||

| peer.blocks[block.Height] = block | |||

| peer.NumPendingBlockRequests-- | |||

| if peer.NumPendingBlockRequests == 0 { | |||

| peer.stopMonitor() | |||

| peer.stopBlockResponseTimer() | |||

| } else { | |||

| peer.recvMonitor.Update(recvSize) | |||

| peer.resetBlockResponseTimer() | |||

| } | |||

| return nil | |||

| } | |||

| // RemoveBlock removes the block of given height | |||

| func (peer *BpPeer) RemoveBlock(height int64) { | |||

| delete(peer.blocks, height) | |||

| } | |||

| // RequestSent records that a request was sent, and starts the peer timer and monitor if needed. | |||

| func (peer *BpPeer) RequestSent(height int64) { | |||

| peer.blocks[height] = nil | |||

| if peer.NumPendingBlockRequests == 0 { | |||

| peer.startMonitor() | |||

| peer.resetBlockResponseTimer() | |||

| } | |||

| peer.NumPendingBlockRequests++ | |||

| } | |||

| // CheckRate verifies that the response rate of the peer is acceptable (higher than the minimum allowed). | |||

| func (peer *BpPeer) CheckRate() error { | |||

| if peer.NumPendingBlockRequests == 0 { | |||

| return nil | |||

| } | |||

| curRate := peer.recvMonitor.Status().CurRate | |||

| // curRate can be 0 on start | |||

| if curRate != 0 && curRate < peer.params.minRecvRate { | |||

| err := errSlowPeer | |||

| peer.logger.Error("SendTimeout", "peer", peer, | |||

| "reason", err, | |||

| "curRate", fmt.Sprintf("%d KB/s", curRate/1024), | |||

| "minRate", fmt.Sprintf("%d KB/s", peer.params.minRecvRate/1024)) | |||

| return err | |||

| } | |||

| return nil | |||

| } | |||

| func (peer *BpPeer) onTimeout() { | |||

| peer.onErr(errNoPeerResponse, peer.ID) | |||

| } | |||

| func (peer *BpPeer) stopMonitor() { | |||

| peer.recvMonitor.Done() | |||

| peer.recvMonitor = nil | |||

| } | |||

| func (peer *BpPeer) startMonitor() { | |||

| peer.recvMonitor = flow.New(peer.params.sampleRate, peer.params.windowSize) | |||

| initialValue := float64(peer.params.minRecvRate) * math.E | |||

| peer.recvMonitor.SetREMA(initialValue) | |||

| } | |||

| func (peer *BpPeer) resetBlockResponseTimer() { | |||

| if peer.blockResponseTimer == nil { | |||

| peer.blockResponseTimer = time.AfterFunc(peer.params.timeout, peer.onTimeout) | |||

| } else { | |||

| peer.blockResponseTimer.Reset(peer.params.timeout) | |||

| } | |||

| } | |||

| func (peer *BpPeer) stopBlockResponseTimer() bool { | |||

| if peer.blockResponseTimer == nil { | |||

| return false | |||

| } | |||

| return peer.blockResponseTimer.Stop() | |||

| } | |||

| // BpPeerDefaultParams returns the default peer parameters. | |||

| func BpPeerDefaultParams() *BpPeerParams { | |||

| return &BpPeerParams{ | |||

| // Timeout for a peer to respond to a block request. | |||

| timeout: 15 * time.Second, | |||

| // Minimum recv rate to ensure we're receiving blocks from a peer fast | |||

| // enough. If a peer is not sending data at at least that rate, we | |||

| // consider them to have timedout and we disconnect. | |||

| // | |||

| // Assuming a DSL connection (not a good choice) 128 Kbps (upload) ~ 15 KB/s, | |||

| // sending data across atlantic ~ 7.5 KB/s. | |||

| minRecvRate: int64(7680), | |||

| // Monitor parameters | |||

| sampleRate: time.Second, | |||

| windowSize: 40 * time.Second, | |||

| } | |||

| } | |||

+ 278

- 0

blockchain/v1/peer_test.go

View File

| @ -0,0 +1,278 @@ | |||

| package v1 | |||

| import ( | |||

| "sync" | |||

| "testing" | |||

| "time" | |||

| "github.com/stretchr/testify/assert" | |||

| "github.com/stretchr/testify/require" | |||

| cmn "github.com/tendermint/tendermint/libs/common" | |||

| "github.com/tendermint/tendermint/libs/log" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| "github.com/tendermint/tendermint/types" | |||

| ) | |||

| func TestPeerMonitor(t *testing.T) { | |||

| peer := NewBpPeer( | |||

| p2p.ID(cmn.RandStr(12)), 10, | |||

| func(err error, _ p2p.ID) {}, | |||

| nil) | |||

| peer.SetLogger(log.TestingLogger()) | |||

| peer.startMonitor() | |||

| assert.NotNil(t, peer.recvMonitor) | |||

| peer.stopMonitor() | |||

| assert.Nil(t, peer.recvMonitor) | |||

| } | |||

| func TestPeerResetBlockResponseTimer(t *testing.T) { | |||

| var ( | |||

| numErrFuncCalls int // number of calls to the errFunc | |||

| lastErr error // last generated error | |||

| peerTestMtx sync.Mutex // modifications of ^^ variables are also done from timer handler goroutine | |||

| ) | |||

| params := &BpPeerParams{timeout: 2 * time.Millisecond} | |||

| peer := NewBpPeer( | |||

| p2p.ID(cmn.RandStr(12)), 10, | |||

| func(err error, _ p2p.ID) { | |||

| peerTestMtx.Lock() | |||

| defer peerTestMtx.Unlock() | |||

| lastErr = err | |||

| numErrFuncCalls++ | |||

| }, | |||

| params) | |||

| peer.SetLogger(log.TestingLogger()) | |||

| checkByStoppingPeerTimer(t, peer, false) | |||

| // initial reset call with peer having a nil timer | |||

| peer.resetBlockResponseTimer() | |||

| assert.NotNil(t, peer.blockResponseTimer) | |||

| // make sure timer is running and stop it | |||

| checkByStoppingPeerTimer(t, peer, true) | |||

| // reset with running timer | |||

| peer.resetBlockResponseTimer() | |||

| time.Sleep(time.Millisecond) | |||

| peer.resetBlockResponseTimer() | |||

| assert.NotNil(t, peer.blockResponseTimer) | |||

| // let the timer expire and ... | |||

| time.Sleep(3 * time.Millisecond) | |||

| // ... check timer is not running | |||

| checkByStoppingPeerTimer(t, peer, false) | |||

| peerTestMtx.Lock() | |||

| // ... check errNoPeerResponse has been sent | |||

| assert.Equal(t, 1, numErrFuncCalls) | |||

| assert.Equal(t, lastErr, errNoPeerResponse) | |||

| peerTestMtx.Unlock() | |||

| } | |||

| func TestPeerRequestSent(t *testing.T) { | |||

| params := &BpPeerParams{timeout: 2 * time.Millisecond} | |||

| peer := NewBpPeer( | |||

| p2p.ID(cmn.RandStr(12)), 10, | |||

| func(err error, _ p2p.ID) {}, | |||

| params) | |||

| peer.SetLogger(log.TestingLogger()) | |||

| peer.RequestSent(1) | |||

| assert.NotNil(t, peer.recvMonitor) | |||

| assert.NotNil(t, peer.blockResponseTimer) | |||

| assert.Equal(t, 1, peer.NumPendingBlockRequests) | |||

| peer.RequestSent(1) | |||

| assert.NotNil(t, peer.recvMonitor) | |||

| assert.NotNil(t, peer.blockResponseTimer) | |||

| assert.Equal(t, 2, peer.NumPendingBlockRequests) | |||

| } | |||

| func TestPeerGetAndRemoveBlock(t *testing.T) { | |||

| peer := NewBpPeer( | |||

| p2p.ID(cmn.RandStr(12)), 100, | |||

| func(err error, _ p2p.ID) {}, | |||

| nil) | |||

| // Change peer height | |||

| peer.Height = int64(10) | |||

| assert.Equal(t, int64(10), peer.Height) | |||

| // request some blocks and receive few of them | |||

| for i := 1; i <= 10; i++ { | |||

| peer.RequestSent(int64(i)) | |||

| if i > 5 { | |||

| // only receive blocks 1..5 | |||

| continue | |||

| } | |||

| _ = peer.AddBlock(makeSmallBlock(i), 10) | |||

| } | |||

| tests := []struct { | |||

| name string | |||

| height int64 | |||

| wantErr error | |||

| blockPresent bool | |||

| }{ | |||

| {"no request", 100, errMissingBlock, false}, | |||

| {"no block", 6, errMissingBlock, false}, | |||

| {"block 1 present", 1, nil, true}, | |||

| {"block max present", 5, nil, true}, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| // try to get the block | |||

| b, err := peer.BlockAtHeight(tt.height) | |||

| assert.Equal(t, tt.wantErr, err) | |||

| assert.Equal(t, tt.blockPresent, b != nil) | |||

| // remove the block | |||

| peer.RemoveBlock(tt.height) | |||

| _, err = peer.BlockAtHeight(tt.height) | |||

| assert.Equal(t, errMissingBlock, err) | |||

| }) | |||

| } | |||

| } | |||

| func TestPeerAddBlock(t *testing.T) { | |||

| peer := NewBpPeer( | |||

| p2p.ID(cmn.RandStr(12)), 100, | |||

| func(err error, _ p2p.ID) {}, | |||

| nil) | |||

| // request some blocks, receive one | |||

| for i := 1; i <= 10; i++ { | |||

| peer.RequestSent(int64(i)) | |||

| if i == 5 { | |||

| // receive block 5 | |||

| _ = peer.AddBlock(makeSmallBlock(i), 10) | |||

| } | |||

| } | |||

| tests := []struct { | |||

| name string | |||

| height int64 | |||

| wantErr error | |||

| blockPresent bool | |||

| }{ | |||

| {"no request", 50, errMissingBlock, false}, | |||

| {"duplicate block", 5, errDuplicateBlock, true}, | |||

| {"block 1 successfully received", 1, nil, true}, | |||

| {"block max successfully received", 10, nil, true}, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| // try to get the block | |||

| err := peer.AddBlock(makeSmallBlock(int(tt.height)), 10) | |||

| assert.Equal(t, tt.wantErr, err) | |||

| _, err = peer.BlockAtHeight(tt.height) | |||

| assert.Equal(t, tt.blockPresent, err == nil) | |||

| }) | |||

| } | |||

| } | |||

| func TestPeerOnErrFuncCalledDueToExpiration(t *testing.T) { | |||

| params := &BpPeerParams{timeout: 2 * time.Millisecond} | |||

| var ( | |||

| numErrFuncCalls int // number of calls to the onErr function | |||

| lastErr error // last generated error | |||

| peerTestMtx sync.Mutex // modifications of ^^ variables are also done from timer handler goroutine | |||

| ) | |||

| peer := NewBpPeer( | |||

| p2p.ID(cmn.RandStr(12)), 10, | |||

| func(err error, _ p2p.ID) { | |||

| peerTestMtx.Lock() | |||

| defer peerTestMtx.Unlock() | |||

| lastErr = err | |||

| numErrFuncCalls++ | |||

| }, | |||

| params) | |||

| peer.SetLogger(log.TestingLogger()) | |||

| peer.RequestSent(1) | |||

| time.Sleep(4 * time.Millisecond) | |||

| // timer should have expired by now, check that the on error function was called | |||

| peerTestMtx.Lock() | |||

| assert.Equal(t, 1, numErrFuncCalls) | |||

| assert.Equal(t, errNoPeerResponse, lastErr) | |||

| peerTestMtx.Unlock() | |||

| } | |||

| func TestPeerCheckRate(t *testing.T) { | |||

| params := &BpPeerParams{ | |||

| timeout: time.Second, | |||

| minRecvRate: int64(100), // 100 bytes/sec exponential moving average | |||

| } | |||

| peer := NewBpPeer( | |||

| p2p.ID(cmn.RandStr(12)), 10, | |||

| func(err error, _ p2p.ID) {}, | |||

| params) | |||

| peer.SetLogger(log.TestingLogger()) | |||

| require.Nil(t, peer.CheckRate()) | |||

| for i := 0; i < 40; i++ { | |||

| peer.RequestSent(int64(i)) | |||

| } | |||

| // monitor starts with a higher rEMA (~ 2*minRecvRate), wait for it to go down | |||

| time.Sleep(900 * time.Millisecond) | |||

| // normal peer - send a bit more than 100 bytes/sec, > 10 bytes/100msec, check peer is not considered slow | |||

| for i := 0; i < 10; i++ { | |||

| _ = peer.AddBlock(makeSmallBlock(i), 11) | |||

| time.Sleep(100 * time.Millisecond) | |||

| require.Nil(t, peer.CheckRate()) | |||

| } | |||

| // slow peer - send a bit less than 10 bytes/100msec | |||

| for i := 10; i < 20; i++ { | |||

| _ = peer.AddBlock(makeSmallBlock(i), 9) | |||

| time.Sleep(100 * time.Millisecond) | |||

| } | |||

| // check peer is considered slow | |||

| assert.Equal(t, errSlowPeer, peer.CheckRate()) | |||

| } | |||

| func TestPeerCleanup(t *testing.T) { | |||

| params := &BpPeerParams{timeout: 2 * time.Millisecond} | |||

| peer := NewBpPeer( | |||

| p2p.ID(cmn.RandStr(12)), 10, | |||

| func(err error, _ p2p.ID) {}, | |||

| params) | |||

| peer.SetLogger(log.TestingLogger()) | |||

| assert.Nil(t, peer.blockResponseTimer) | |||

| peer.RequestSent(1) | |||

| assert.NotNil(t, peer.blockResponseTimer) | |||

| peer.Cleanup() | |||

| checkByStoppingPeerTimer(t, peer, false) | |||

| } | |||

| // Check if peer timer is running or not (a running timer can be successfully stopped). | |||

| // Note: stops the timer. | |||

| func checkByStoppingPeerTimer(t *testing.T, peer *BpPeer, running bool) { | |||

| assert.NotPanics(t, func() { | |||

| stopped := peer.stopBlockResponseTimer() | |||

| if running { | |||

| assert.True(t, stopped) | |||

| } else { | |||

| assert.False(t, stopped) | |||

| } | |||

| }) | |||

| } | |||

| func makeSmallBlock(height int) *types.Block { | |||

| return types.MakeBlock(int64(height), []types.Tx{types.Tx("foo")}, nil, nil) | |||

| } | |||

+ 369

- 0

blockchain/v1/pool.go

View File

| @ -0,0 +1,369 @@ | |||

| package v1 | |||

| import ( | |||

| "sort" | |||

| "github.com/tendermint/tendermint/libs/log" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| "github.com/tendermint/tendermint/types" | |||

| ) | |||

| // BlockPool keeps track of the fast sync peers, block requests and block responses. | |||

| type BlockPool struct { | |||

| logger log.Logger | |||

| // Set of peers that have sent status responses, with height bigger than pool.Height | |||

| peers map[p2p.ID]*BpPeer | |||

| // Set of block heights and the corresponding peers from where a block response is expected or has been received. | |||

| blocks map[int64]p2p.ID | |||

| plannedRequests map[int64]struct{} // list of blocks to be assigned peers for blockRequest | |||

| nextRequestHeight int64 // next height to be added to plannedRequests | |||

| Height int64 // height of next block to execute | |||

| MaxPeerHeight int64 // maximum height of all peers | |||

| toBcR bcReactor | |||

| } | |||

| // NewBlockPool creates a new BlockPool. | |||

| func NewBlockPool(height int64, toBcR bcReactor) *BlockPool { | |||

| return &BlockPool{ | |||

| Height: height, | |||

| MaxPeerHeight: 0, | |||

| peers: make(map[p2p.ID]*BpPeer), | |||

| blocks: make(map[int64]p2p.ID), | |||

| plannedRequests: make(map[int64]struct{}), | |||

| nextRequestHeight: height, | |||

| toBcR: toBcR, | |||

| } | |||

| } | |||

| // SetLogger sets the logger of the pool. | |||

| func (pool *BlockPool) SetLogger(l log.Logger) { | |||

| pool.logger = l | |||

| } | |||

| // ReachedMaxHeight check if the pool has reached the maximum peer height. | |||

| func (pool *BlockPool) ReachedMaxHeight() bool { | |||

| return pool.Height >= pool.MaxPeerHeight | |||

| } | |||

| func (pool *BlockPool) rescheduleRequest(peerID p2p.ID, height int64) { | |||

| pool.logger.Info("reschedule requests made to peer for height ", "peerID", peerID, "height", height) | |||

| pool.plannedRequests[height] = struct{}{} | |||

| delete(pool.blocks, height) | |||

| pool.peers[peerID].RemoveBlock(height) | |||

| } | |||

| // Updates the pool's max height. If no peers are left MaxPeerHeight is set to 0. | |||

| func (pool *BlockPool) updateMaxPeerHeight() { | |||

| var newMax int64 | |||

| for _, peer := range pool.peers { | |||

| peerHeight := peer.Height | |||

| if peerHeight > newMax { | |||

| newMax = peerHeight | |||

| } | |||

| } | |||

| pool.MaxPeerHeight = newMax | |||

| } | |||

| // UpdatePeer adds a new peer or updates an existing peer with a new height. | |||

| // If a peer is short it is not added. | |||

| func (pool *BlockPool) UpdatePeer(peerID p2p.ID, height int64) error { | |||

| peer := pool.peers[peerID] | |||

| if peer == nil { | |||

| if height < pool.Height { | |||

| pool.logger.Info("Peer height too small", | |||

| "peer", peerID, "height", height, "fsm_height", pool.Height) | |||

| return errPeerTooShort | |||

| } | |||

| // Add new peer. | |||

| peer = NewBpPeer(peerID, height, pool.toBcR.sendPeerError, nil) | |||

| peer.SetLogger(pool.logger.With("peer", peerID)) | |||

| pool.peers[peerID] = peer | |||

| pool.logger.Info("added peer", "peerID", peerID, "height", height, "num_peers", len(pool.peers)) | |||

| } else { | |||

| // Check if peer is lowering its height. This is not allowed. | |||

| if height < peer.Height { | |||

| pool.RemovePeer(peerID, errPeerLowersItsHeight) | |||

| return errPeerLowersItsHeight | |||

| } | |||

| // Update existing peer. | |||

| peer.Height = height | |||

| } | |||

| // Update the pool's MaxPeerHeight if needed. | |||

| pool.updateMaxPeerHeight() | |||

| return nil | |||

| } | |||

| // Cleans and deletes the peer. Recomputes the max peer height. | |||

| func (pool *BlockPool) deletePeer(peer *BpPeer) { | |||

| if peer == nil { | |||

| return | |||

| } | |||

| peer.Cleanup() | |||

| delete(pool.peers, peer.ID) | |||

| if peer.Height == pool.MaxPeerHeight { | |||

| pool.updateMaxPeerHeight() | |||

| } | |||

| } | |||

| // RemovePeer removes the blocks and requests from the peer, reschedules them and deletes the peer. | |||

| func (pool *BlockPool) RemovePeer(peerID p2p.ID, err error) { | |||

| peer := pool.peers[peerID] | |||

| if peer == nil { | |||

| return | |||

| } | |||

| pool.logger.Info("removing peer", "peerID", peerID, "error", err) | |||

| // Reschedule the block requests made to the peer, or received and not processed yet. | |||

| // Note that some of the requests may be removed further down. | |||

| for h := range pool.peers[peerID].blocks { | |||

| pool.rescheduleRequest(peerID, h) | |||

| } | |||

| oldMaxPeerHeight := pool.MaxPeerHeight | |||

| // Delete the peer. This operation may result in the pool's MaxPeerHeight being lowered. | |||

| pool.deletePeer(peer) | |||

| // Check if the pool's MaxPeerHeight has been lowered. | |||

| // This may happen if the tallest peer has been removed. | |||

| if oldMaxPeerHeight > pool.MaxPeerHeight { | |||

| // Remove any planned requests for heights over the new MaxPeerHeight. | |||

| for h := range pool.plannedRequests { | |||

| if h > pool.MaxPeerHeight { | |||

| delete(pool.plannedRequests, h) | |||

| } | |||

| } | |||

| // Adjust the nextRequestHeight to the new max plus one. | |||

| if pool.nextRequestHeight > pool.MaxPeerHeight { | |||

| pool.nextRequestHeight = pool.MaxPeerHeight + 1 | |||

| } | |||

| } | |||

| } | |||

| func (pool *BlockPool) removeShortPeers() { | |||

| for _, peer := range pool.peers { | |||

| if peer.Height < pool.Height { | |||

| pool.RemovePeer(peer.ID, nil) | |||

| } | |||

| } | |||

| } | |||

| func (pool *BlockPool) removeBadPeers() { | |||

| pool.removeShortPeers() | |||

| for _, peer := range pool.peers { | |||

| if err := peer.CheckRate(); err != nil { | |||

| pool.RemovePeer(peer.ID, err) | |||

| pool.toBcR.sendPeerError(err, peer.ID) | |||

| } | |||

| } | |||

| } | |||

| // MakeNextRequests creates more requests if the block pool is running low. | |||

| func (pool *BlockPool) MakeNextRequests(maxNumRequests int) { | |||

| heights := pool.makeRequestBatch(maxNumRequests) | |||

| if len(heights) != 0 { | |||

| pool.logger.Info("makeNextRequests will make following requests", | |||

| "number", len(heights), "heights", heights) | |||

| } | |||

| for _, height := range heights { | |||

| h := int64(height) | |||

| if !pool.sendRequest(h) { | |||

| // If a good peer was not found for sending the request at height h then return, | |||

| // as it shouldn't be possible to find a peer for h+1. | |||

| return | |||

| } | |||

| delete(pool.plannedRequests, h) | |||

| } | |||

| } | |||

| // Makes a batch of requests sorted by height such that the block pool has up to maxNumRequests entries. | |||

| func (pool *BlockPool) makeRequestBatch(maxNumRequests int) []int { | |||

| pool.removeBadPeers() | |||

| // At this point pool.requests may include heights for requests to be redone due to removal of peers: | |||

| // - peers timed out or were removed by switch | |||

| // - FSM timed out on waiting to advance the block execution due to missing blocks at h or h+1 | |||

| // Determine the number of requests needed by subtracting the number of requests already made from the maximum | |||

| // allowed | |||

| numNeeded := int(maxNumRequests) - len(pool.blocks) | |||

| for len(pool.plannedRequests) < numNeeded { | |||

| if pool.nextRequestHeight > pool.MaxPeerHeight { | |||

| break | |||

| } | |||

| pool.plannedRequests[pool.nextRequestHeight] = struct{}{} | |||

| pool.nextRequestHeight++ | |||

| } | |||

| heights := make([]int, 0, len(pool.plannedRequests)) | |||

| for k := range pool.plannedRequests { | |||

| heights = append(heights, int(k)) | |||

| } | |||

| sort.Ints(heights) | |||

| return heights | |||

| } | |||

| func (pool *BlockPool) sendRequest(height int64) bool { | |||

| for _, peer := range pool.peers { | |||

| if peer.NumPendingBlockRequests >= maxRequestsPerPeer { | |||

| continue | |||

| } | |||

| if peer.Height < height { | |||

| continue | |||

| } | |||

| err := pool.toBcR.sendBlockRequest(peer.ID, height) | |||

| if err == errNilPeerForBlockRequest { | |||

| // Switch does not have this peer, remove it and continue to look for another peer. | |||

| pool.logger.Error("switch does not have peer..removing peer selected for height", "peer", | |||

| peer.ID, "height", height) | |||

| pool.RemovePeer(peer.ID, err) | |||

| continue | |||

| } | |||

| if err == errSendQueueFull { | |||

| pool.logger.Error("peer queue is full", "peer", peer.ID, "height", height) | |||

| continue | |||

| } | |||

| pool.logger.Info("assigned request to peer", "peer", peer.ID, "height", height) | |||

| pool.blocks[height] = peer.ID | |||

| peer.RequestSent(height) | |||

| return true | |||

| } | |||

| pool.logger.Error("could not find peer to send request for block at height", "height", height) | |||

| return false | |||

| } | |||

| // AddBlock validates that the block comes from the peer it was expected from and stores it in the 'blocks' map. | |||

| func (pool *BlockPool) AddBlock(peerID p2p.ID, block *types.Block, blockSize int) error { | |||

| peer, ok := pool.peers[peerID] | |||

| if !ok { | |||

| pool.logger.Error("block from unknown peer", "height", block.Height, "peer", peerID) | |||

| return errBadDataFromPeer | |||

| } | |||

| if wantPeerID, ok := pool.blocks[block.Height]; ok && wantPeerID != peerID { | |||

| pool.logger.Error("block received from wrong peer", "height", block.Height, | |||

| "peer", peerID, "expected_peer", wantPeerID) | |||

| return errBadDataFromPeer | |||

| } | |||

| return peer.AddBlock(block, blockSize) | |||

| } | |||

| // BlockData stores the peer responsible to deliver a block and the actual block if delivered. | |||

| type BlockData struct { | |||

| block *types.Block | |||

| peer *BpPeer | |||

| } | |||

| // BlockAndPeerAtHeight retrieves the block and delivery peer at specified height. | |||

| // Returns errMissingBlock if a block was not found | |||

| func (pool *BlockPool) BlockAndPeerAtHeight(height int64) (bData *BlockData, err error) { | |||

| peerID := pool.blocks[height] | |||

| peer := pool.peers[peerID] | |||

| if peer == nil { | |||

| return nil, errMissingBlock | |||

| } | |||

| block, err := peer.BlockAtHeight(height) | |||

| if err != nil { | |||

| return nil, err | |||

| } | |||

| return &BlockData{peer: peer, block: block}, nil | |||

| } | |||

| // FirstTwoBlocksAndPeers returns the blocks and the delivery peers at pool's height H and H+1. | |||

| func (pool *BlockPool) FirstTwoBlocksAndPeers() (first, second *BlockData, err error) { | |||

| first, err = pool.BlockAndPeerAtHeight(pool.Height) | |||

| second, err2 := pool.BlockAndPeerAtHeight(pool.Height + 1) | |||

| if err == nil { | |||

| err = err2 | |||

| } | |||

| return | |||

| } | |||

| // InvalidateFirstTwoBlocks removes the peers that sent us the first two blocks, blocks are removed by RemovePeer(). | |||

| func (pool *BlockPool) InvalidateFirstTwoBlocks(err error) { | |||

| first, err1 := pool.BlockAndPeerAtHeight(pool.Height) | |||

| second, err2 := pool.BlockAndPeerAtHeight(pool.Height + 1) | |||

| if err1 == nil { | |||

| pool.RemovePeer(first.peer.ID, err) | |||

| } | |||

| if err2 == nil { | |||

| pool.RemovePeer(second.peer.ID, err) | |||

| } | |||

| } | |||

| // ProcessedCurrentHeightBlock performs cleanup after a block is processed. It removes block at pool height and | |||

| // the peers that are now short. | |||

| func (pool *BlockPool) ProcessedCurrentHeightBlock() { | |||

| peerID, peerOk := pool.blocks[pool.Height] | |||

| if peerOk { | |||

| pool.peers[peerID].RemoveBlock(pool.Height) | |||

| } | |||

| delete(pool.blocks, pool.Height) | |||

| pool.logger.Debug("removed block at height", "height", pool.Height) | |||

| pool.Height++ | |||

| pool.removeShortPeers() | |||

| } | |||

| // RemovePeerAtCurrentHeights checks if a block at pool's height H exists and if not, it removes the | |||

| // delivery peer and returns. If a block at height H exists then the check and peer removal is done for H+1. | |||

| // This function is called when the FSM is not able to make progress for some time. | |||

| // This happens if either the block H or H+1 have not been delivered. | |||

| func (pool *BlockPool) RemovePeerAtCurrentHeights(err error) { | |||

| peerID := pool.blocks[pool.Height] | |||

| peer, ok := pool.peers[peerID] | |||

| if ok { | |||

| if _, err := peer.BlockAtHeight(pool.Height); err != nil { | |||

| pool.logger.Info("remove peer that hasn't sent block at pool.Height", | |||

| "peer", peerID, "height", pool.Height) | |||

| pool.RemovePeer(peerID, err) | |||

| return | |||

| } | |||

| } | |||

| peerID = pool.blocks[pool.Height+1] | |||

| peer, ok = pool.peers[peerID] | |||

| if ok { | |||

| if _, err := peer.BlockAtHeight(pool.Height + 1); err != nil { | |||

| pool.logger.Info("remove peer that hasn't sent block at pool.Height+1", | |||

| "peer", peerID, "height", pool.Height+1) | |||

| pool.RemovePeer(peerID, err) | |||

| return | |||

| } | |||

| } | |||

| } | |||

| // Cleanup performs pool and peer cleanup | |||

| func (pool *BlockPool) Cleanup() { | |||

| for id, peer := range pool.peers { | |||

| peer.Cleanup() | |||

| delete(pool.peers, id) | |||

| } | |||

| pool.plannedRequests = make(map[int64]struct{}) | |||

| pool.blocks = make(map[int64]p2p.ID) | |||

| pool.nextRequestHeight = 0 | |||

| pool.Height = 0 | |||

| pool.MaxPeerHeight = 0 | |||

| } | |||

| // NumPeers returns the number of peers in the pool | |||

| func (pool *BlockPool) NumPeers() int { | |||

| return len(pool.peers) | |||

| } | |||

| // NeedsBlocks returns true if more blocks are required. | |||

| func (pool *BlockPool) NeedsBlocks() bool { | |||

| return len(pool.blocks) < maxNumRequests | |||

| } | |||

+ 650

- 0

blockchain/v1/pool_test.go

View File

| @ -0,0 +1,650 @@ | |||

| package v1 | |||

| import ( | |||

| "testing" | |||

| "time" | |||

| "github.com/stretchr/testify/assert" | |||

| "github.com/tendermint/tendermint/libs/log" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| "github.com/tendermint/tendermint/types" | |||

| ) | |||

| type testPeer struct { | |||

| id p2p.ID | |||

| height int64 | |||

| } | |||

| type testBcR struct { | |||

| logger log.Logger | |||

| } | |||

| type testValues struct { | |||

| numRequestsSent int | |||

| } | |||

| var testResults testValues | |||

| func resetPoolTestResults() { | |||

| testResults.numRequestsSent = 0 | |||

| } | |||

| func (testR *testBcR) sendPeerError(err error, peerID p2p.ID) { | |||

| } | |||

| func (testR *testBcR) sendStatusRequest() { | |||

| } | |||

| func (testR *testBcR) sendBlockRequest(peerID p2p.ID, height int64) error { | |||

| testResults.numRequestsSent++ | |||

| return nil | |||

| } | |||

| func (testR *testBcR) resetStateTimer(name string, timer **time.Timer, timeout time.Duration) { | |||

| } | |||

| func (testR *testBcR) switchToConsensus() { | |||

| } | |||

| func newTestBcR() *testBcR { | |||

| testBcR := &testBcR{logger: log.TestingLogger()} | |||

| return testBcR | |||

| } | |||

| type tPBlocks struct { | |||

| id p2p.ID | |||

| create bool | |||

| } | |||

| // Makes a block pool with specified current height, list of peers, block requests and block responses | |||

| func makeBlockPool(bcr *testBcR, height int64, peers []BpPeer, blocks map[int64]tPBlocks) *BlockPool { | |||

| bPool := NewBlockPool(height, bcr) | |||

| bPool.SetLogger(bcr.logger) | |||

| txs := []types.Tx{types.Tx("foo"), types.Tx("bar")} | |||

| var maxH int64 | |||

| for _, p := range peers { | |||

| if p.Height > maxH { | |||

| maxH = p.Height | |||

| } | |||

| bPool.peers[p.ID] = NewBpPeer(p.ID, p.Height, bcr.sendPeerError, nil) | |||

| bPool.peers[p.ID].SetLogger(bcr.logger) | |||

| } | |||

| bPool.MaxPeerHeight = maxH | |||

| for h, p := range blocks { | |||

| bPool.blocks[h] = p.id | |||

| bPool.peers[p.id].RequestSent(int64(h)) | |||

| if p.create { | |||

| // simulate that a block at height h has been received | |||

| _ = bPool.peers[p.id].AddBlock(types.MakeBlock(int64(h), txs, nil, nil), 100) | |||

| } | |||

| } | |||

| return bPool | |||

| } | |||

| func assertPeerSetsEquivalent(t *testing.T, set1 map[p2p.ID]*BpPeer, set2 map[p2p.ID]*BpPeer) { | |||

| assert.Equal(t, len(set1), len(set2)) | |||

| for peerID, peer1 := range set1 { | |||

| peer2 := set2[peerID] | |||

| assert.NotNil(t, peer2) | |||

| assert.Equal(t, peer1.NumPendingBlockRequests, peer2.NumPendingBlockRequests) | |||

| assert.Equal(t, peer1.Height, peer2.Height) | |||

| assert.Equal(t, len(peer1.blocks), len(peer2.blocks)) | |||

| for h, block1 := range peer1.blocks { | |||

| block2 := peer2.blocks[h] | |||

| // block1 and block2 could be nil if a request was made but no block was received | |||

| assert.Equal(t, block1, block2) | |||

| } | |||

| } | |||

| } | |||

| func assertBlockPoolEquivalent(t *testing.T, poolWanted, pool *BlockPool) { | |||

| assert.Equal(t, poolWanted.blocks, pool.blocks) | |||

| assertPeerSetsEquivalent(t, poolWanted.peers, pool.peers) | |||

| assert.Equal(t, poolWanted.MaxPeerHeight, pool.MaxPeerHeight) | |||

| assert.Equal(t, poolWanted.Height, pool.Height) | |||

| } | |||

| func TestBlockPoolUpdatePeer(t *testing.T) { | |||

| testBcR := newTestBcR() | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| args testPeer | |||

| poolWanted *BlockPool | |||

| errWanted error | |||

| }{ | |||

| { | |||

| name: "add a first short peer", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| args: testPeer{"P1", 50}, | |||

| errWanted: errPeerTooShort, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "add a first good peer", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| args: testPeer{"P1", 101}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 101}}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "increase the height of P1 from 120 to 123", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, map[int64]tPBlocks{}), | |||

| args: testPeer{"P1", 123}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 123}}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "decrease the height of P1 from 120 to 110", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, map[int64]tPBlocks{}), | |||

| args: testPeer{"P1", 110}, | |||

| errWanted: errPeerLowersItsHeight, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "decrease the height of P1 from 105 to 102 with blocks", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 105}}, | |||

| map[int64]tPBlocks{ | |||

| 100: {"P1", true}, 101: {"P1", true}, 102: {"P1", true}}), | |||

| args: testPeer{"P1", 102}, | |||

| errWanted: errPeerLowersItsHeight, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{}, | |||

| map[int64]tPBlocks{}), | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| pool := tt.pool | |||

| err := pool.UpdatePeer(tt.args.id, tt.args.height) | |||

| assert.Equal(t, tt.errWanted, err) | |||

| assert.Equal(t, tt.poolWanted.blocks, tt.pool.blocks) | |||

| assertPeerSetsEquivalent(t, tt.poolWanted.peers, tt.pool.peers) | |||

| assert.Equal(t, tt.poolWanted.MaxPeerHeight, tt.pool.MaxPeerHeight) | |||

| }) | |||

| } | |||

| } | |||

| func TestBlockPoolRemovePeer(t *testing.T) { | |||

| testBcR := newTestBcR() | |||

| type args struct { | |||

| peerID p2p.ID | |||

| err error | |||

| } | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| args args | |||

| poolWanted *BlockPool | |||

| }{ | |||

| { | |||

| name: "attempt to delete non-existing peer", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, map[int64]tPBlocks{}), | |||

| args: args{"P99", nil}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "delete the only peer without blocks", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, map[int64]tPBlocks{}), | |||

| args: args{"P1", nil}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "delete the shortest of two peers without blocks", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 120}}, map[int64]tPBlocks{}), | |||

| args: args{"P1", nil}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P2", Height: 120}}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "delete the tallest of two peers without blocks", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 120}}, map[int64]tPBlocks{}), | |||

| args: args{"P2", nil}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 100}}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "delete the only peer with block requests sent and blocks received", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, | |||

| map[int64]tPBlocks{100: {"P1", true}, 101: {"P1", false}}), | |||

| args: args{"P1", nil}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "delete the shortest of two peers with block requests sent and blocks received", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}, {ID: "P2", Height: 200}}, | |||

| map[int64]tPBlocks{100: {"P1", true}, 101: {"P1", false}}), | |||

| args: args{"P1", nil}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P2", Height: 200}}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "delete the tallest of two peers with block requests sent and blocks received", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}, {ID: "P2", Height: 110}}, | |||

| map[int64]tPBlocks{100: {"P1", true}, 101: {"P1", false}}), | |||

| args: args{"P1", nil}, | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P2", Height: 110}}, map[int64]tPBlocks{}), | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| tt.pool.RemovePeer(tt.args.peerID, tt.args.err) | |||

| assertBlockPoolEquivalent(t, tt.poolWanted, tt.pool) | |||

| }) | |||

| } | |||

| } | |||

| func TestBlockPoolRemoveShortPeers(t *testing.T) { | |||

| testBcR := newTestBcR() | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| poolWanted *BlockPool | |||

| }{ | |||

| { | |||

| name: "no short peers", | |||

| pool: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 110}, {ID: "P3", Height: 120}}, map[int64]tPBlocks{}), | |||

| poolWanted: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 110}, {ID: "P3", Height: 120}}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "one short peer", | |||

| pool: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 90}, {ID: "P3", Height: 120}}, map[int64]tPBlocks{}), | |||

| poolWanted: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P3", Height: 120}}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "all short peers", | |||

| pool: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 90}, {ID: "P2", Height: 91}, {ID: "P3", Height: 92}}, map[int64]tPBlocks{}), | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| pool := tt.pool | |||

| pool.removeShortPeers() | |||

| assertBlockPoolEquivalent(t, tt.poolWanted, tt.pool) | |||

| }) | |||

| } | |||

| } | |||

| func TestBlockPoolSendRequestBatch(t *testing.T) { | |||

| type testPeerResult struct { | |||

| id p2p.ID | |||

| numPendingBlockRequests int | |||

| } | |||

| testBcR := newTestBcR() | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| maxRequestsPerPeer int | |||

| expRequests map[int64]bool | |||

| expPeerResults []testPeerResult | |||

| expnumPendingBlockRequests int | |||

| }{ | |||

| { | |||

| name: "one peer - send up to maxRequestsPerPeer block requests", | |||

| pool: makeBlockPool(testBcR, 10, []BpPeer{{ID: "P1", Height: 100}}, map[int64]tPBlocks{}), | |||

| maxRequestsPerPeer: 2, | |||

| expRequests: map[int64]bool{10: true, 11: true}, | |||

| expPeerResults: []testPeerResult{{id: "P1", numPendingBlockRequests: 2}}, | |||

| expnumPendingBlockRequests: 2, | |||

| }, | |||

| { | |||

| name: "n peers - send n*maxRequestsPerPeer block requests", | |||

| pool: makeBlockPool(testBcR, 10, []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, map[int64]tPBlocks{}), | |||

| maxRequestsPerPeer: 2, | |||

| expRequests: map[int64]bool{10: true, 11: true}, | |||

| expPeerResults: []testPeerResult{ | |||

| {id: "P1", numPendingBlockRequests: 2}, | |||

| {id: "P2", numPendingBlockRequests: 2}}, | |||

| expnumPendingBlockRequests: 4, | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| resetPoolTestResults() | |||

| var pool = tt.pool | |||

| maxRequestsPerPeer = tt.maxRequestsPerPeer | |||

| pool.MakeNextRequests(10) | |||

| assert.Equal(t, testResults.numRequestsSent, maxRequestsPerPeer*len(pool.peers)) | |||

| for _, tPeer := range tt.expPeerResults { | |||

| var peer = pool.peers[tPeer.id] | |||

| assert.NotNil(t, peer) | |||

| assert.Equal(t, tPeer.numPendingBlockRequests, peer.NumPendingBlockRequests) | |||

| } | |||

| assert.Equal(t, testResults.numRequestsSent, maxRequestsPerPeer*len(pool.peers)) | |||

| }) | |||

| } | |||

| } | |||

| func TestBlockPoolAddBlock(t *testing.T) { | |||

| testBcR := newTestBcR() | |||

| txs := []types.Tx{types.Tx("foo"), types.Tx("bar")} | |||

| type args struct { | |||

| peerID p2p.ID | |||

| block *types.Block | |||

| blockSize int | |||

| } | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| args args | |||

| poolWanted *BlockPool | |||

| errWanted error | |||

| }{ | |||

| {name: "block from unknown peer", | |||

| pool: makeBlockPool(testBcR, 10, []BpPeer{{ID: "P1", Height: 100}}, map[int64]tPBlocks{}), | |||

| args: args{ | |||

| peerID: "P2", | |||

| block: types.MakeBlock(int64(10), txs, nil, nil), | |||

| blockSize: 100, | |||

| }, | |||

| poolWanted: makeBlockPool(testBcR, 10, []BpPeer{{ID: "P1", Height: 100}}, map[int64]tPBlocks{}), | |||

| errWanted: errBadDataFromPeer, | |||

| }, | |||

| {name: "unexpected block 11 from known peer - waiting for 10", | |||

| pool: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", false}}), | |||

| args: args{ | |||

| peerID: "P1", | |||

| block: types.MakeBlock(int64(11), txs, nil, nil), | |||

| blockSize: 100, | |||

| }, | |||

| poolWanted: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", false}}), | |||

| errWanted: errMissingBlock, | |||

| }, | |||

| {name: "unexpected block 10 from known peer - already have 10", | |||

| pool: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", true}, 11: {"P1", false}}), | |||

| args: args{ | |||

| peerID: "P1", | |||

| block: types.MakeBlock(int64(10), txs, nil, nil), | |||

| blockSize: 100, | |||

| }, | |||

| poolWanted: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", true}, 11: {"P1", false}}), | |||

| errWanted: errDuplicateBlock, | |||

| }, | |||

| {name: "unexpected block 10 from known peer P2 - expected 10 to come from P1", | |||

| pool: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", false}}), | |||

| args: args{ | |||

| peerID: "P2", | |||

| block: types.MakeBlock(int64(10), txs, nil, nil), | |||

| blockSize: 100, | |||

| }, | |||

| poolWanted: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", false}}), | |||

| errWanted: errBadDataFromPeer, | |||

| }, | |||

| {name: "expected block from known peer", | |||

| pool: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", false}}), | |||

| args: args{ | |||

| peerID: "P1", | |||

| block: types.MakeBlock(int64(10), txs, nil, nil), | |||

| blockSize: 100, | |||

| }, | |||

| poolWanted: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", true}}), | |||

| errWanted: nil, | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| err := tt.pool.AddBlock(tt.args.peerID, tt.args.block, tt.args.blockSize) | |||

| assert.Equal(t, tt.errWanted, err) | |||

| assertBlockPoolEquivalent(t, tt.poolWanted, tt.pool) | |||

| }) | |||

| } | |||

| } | |||

| func TestBlockPoolFirstTwoBlocksAndPeers(t *testing.T) { | |||

| testBcR := newTestBcR() | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| firstWanted int64 | |||

| secondWanted int64 | |||

| errWanted error | |||

| }{ | |||

| { | |||

| name: "both blocks missing", | |||

| pool: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{15: {"P1", true}, 16: {"P2", true}}), | |||

| errWanted: errMissingBlock, | |||

| }, | |||

| { | |||

| name: "second block missing", | |||

| pool: makeBlockPool(testBcR, 15, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{15: {"P1", true}, 18: {"P2", true}}), | |||

| firstWanted: 15, | |||

| errWanted: errMissingBlock, | |||

| }, | |||

| { | |||

| name: "first block missing", | |||

| pool: makeBlockPool(testBcR, 15, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{16: {"P2", true}, 18: {"P2", true}}), | |||

| secondWanted: 16, | |||

| errWanted: errMissingBlock, | |||

| }, | |||

| { | |||

| name: "both blocks present", | |||

| pool: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", true}, 11: {"P2", true}}), | |||

| firstWanted: 10, | |||

| secondWanted: 11, | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| pool := tt.pool | |||

| gotFirst, gotSecond, err := pool.FirstTwoBlocksAndPeers() | |||

| assert.Equal(t, tt.errWanted, err) | |||

| if tt.firstWanted != 0 { | |||

| peer := pool.blocks[tt.firstWanted] | |||

| block := pool.peers[peer].blocks[tt.firstWanted] | |||

| assert.Equal(t, block, gotFirst.block, | |||

| "BlockPool.FirstTwoBlocksAndPeers() gotFirst = %v, want %v", | |||

| tt.firstWanted, gotFirst.block.Height) | |||

| } | |||

| if tt.secondWanted != 0 { | |||

| peer := pool.blocks[tt.secondWanted] | |||

| block := pool.peers[peer].blocks[tt.secondWanted] | |||

| assert.Equal(t, block, gotSecond.block, | |||

| "BlockPool.FirstTwoBlocksAndPeers() gotFirst = %v, want %v", | |||

| tt.secondWanted, gotSecond.block.Height) | |||

| } | |||

| }) | |||

| } | |||

| } | |||

| func TestBlockPoolInvalidateFirstTwoBlocks(t *testing.T) { | |||

| testBcR := newTestBcR() | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| poolWanted *BlockPool | |||

| }{ | |||

| { | |||

| name: "both blocks missing", | |||

| pool: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{15: {"P1", true}, 16: {"P2", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{15: {"P1", true}, 16: {"P2", true}}), | |||

| }, | |||

| { | |||

| name: "second block missing", | |||

| pool: makeBlockPool(testBcR, 15, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{15: {"P1", true}, 18: {"P2", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 15, | |||

| []BpPeer{{ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{18: {"P2", true}}), | |||

| }, | |||

| { | |||

| name: "first block missing", | |||

| pool: makeBlockPool(testBcR, 15, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{18: {"P1", true}, 16: {"P2", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 15, | |||

| []BpPeer{{ID: "P1", Height: 100}}, | |||

| map[int64]tPBlocks{18: {"P1", true}}), | |||

| }, | |||

| { | |||

| name: "both blocks present", | |||

| pool: makeBlockPool(testBcR, 10, | |||

| []BpPeer{{ID: "P1", Height: 100}, {ID: "P2", Height: 100}}, | |||

| map[int64]tPBlocks{10: {"P1", true}, 11: {"P2", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 10, | |||

| []BpPeer{}, | |||

| map[int64]tPBlocks{}), | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| tt.pool.InvalidateFirstTwoBlocks(errNoPeerResponse) | |||

| assertBlockPoolEquivalent(t, tt.poolWanted, tt.pool) | |||

| }) | |||

| } | |||

| } | |||

| func TestProcessedCurrentHeightBlock(t *testing.T) { | |||

| testBcR := newTestBcR() | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| poolWanted *BlockPool | |||

| }{ | |||

| { | |||

| name: "one peer", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, | |||

| map[int64]tPBlocks{100: {"P1", true}, 101: {"P1", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 101, []BpPeer{{ID: "P1", Height: 120}}, | |||

| map[int64]tPBlocks{101: {"P1", true}}), | |||

| }, | |||

| { | |||

| name: "multiple peers", | |||

| pool: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 120}, {ID: "P2", Height: 120}, {ID: "P3", Height: 130}}, | |||

| map[int64]tPBlocks{ | |||

| 100: {"P1", true}, 104: {"P1", true}, 105: {"P1", false}, | |||

| 101: {"P2", true}, 103: {"P2", false}, | |||

| 102: {"P3", true}, 106: {"P3", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 101, | |||

| []BpPeer{{ID: "P1", Height: 120}, {ID: "P2", Height: 120}, {ID: "P3", Height: 130}}, | |||

| map[int64]tPBlocks{ | |||

| 104: {"P1", true}, 105: {"P1", false}, | |||

| 101: {"P2", true}, 103: {"P2", false}, | |||

| 102: {"P3", true}, 106: {"P3", true}}), | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| tt.pool.ProcessedCurrentHeightBlock() | |||

| assertBlockPoolEquivalent(t, tt.poolWanted, tt.pool) | |||

| }) | |||

| } | |||

| } | |||

| func TestRemovePeerAtCurrentHeight(t *testing.T) { | |||

| testBcR := newTestBcR() | |||

| tests := []struct { | |||

| name string | |||

| pool *BlockPool | |||

| poolWanted *BlockPool | |||

| }{ | |||

| { | |||

| name: "one peer, remove peer for block at H", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, | |||

| map[int64]tPBlocks{100: {"P1", false}, 101: {"P1", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "one peer, remove peer for block at H+1", | |||

| pool: makeBlockPool(testBcR, 100, []BpPeer{{ID: "P1", Height: 120}}, | |||

| map[int64]tPBlocks{100: {"P1", true}, 101: {"P1", false}}), | |||

| poolWanted: makeBlockPool(testBcR, 100, []BpPeer{}, map[int64]tPBlocks{}), | |||

| }, | |||

| { | |||

| name: "multiple peers, remove peer for block at H", | |||

| pool: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 120}, {ID: "P2", Height: 120}, {ID: "P3", Height: 130}}, | |||

| map[int64]tPBlocks{ | |||

| 100: {"P1", false}, 104: {"P1", true}, 105: {"P1", false}, | |||

| 101: {"P2", true}, 103: {"P2", false}, | |||

| 102: {"P3", true}, 106: {"P3", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P2", Height: 120}, {ID: "P3", Height: 130}}, | |||

| map[int64]tPBlocks{ | |||

| 101: {"P2", true}, 103: {"P2", false}, | |||

| 102: {"P3", true}, 106: {"P3", true}}), | |||

| }, | |||

| { | |||

| name: "multiple peers, remove peer for block at H+1", | |||

| pool: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 120}, {ID: "P2", Height: 120}, {ID: "P3", Height: 130}}, | |||

| map[int64]tPBlocks{ | |||

| 100: {"P1", true}, 104: {"P1", true}, 105: {"P1", false}, | |||

| 101: {"P2", false}, 103: {"P2", false}, | |||

| 102: {"P3", true}, 106: {"P3", true}}), | |||

| poolWanted: makeBlockPool(testBcR, 100, | |||

| []BpPeer{{ID: "P1", Height: 120}, {ID: "P3", Height: 130}}, | |||

| map[int64]tPBlocks{ | |||

| 100: {"P1", true}, 104: {"P1", true}, 105: {"P1", false}, | |||

| 102: {"P3", true}, 106: {"P3", true}}), | |||

| }, | |||

| } | |||

| for _, tt := range tests { | |||

| t.Run(tt.name, func(t *testing.T) { | |||

| tt.pool.RemovePeerAtCurrentHeights(errNoPeerResponse) | |||

| assertBlockPoolEquivalent(t, tt.poolWanted, tt.pool) | |||

| }) | |||

| } | |||

| } | |||

+ 620

- 0

blockchain/v1/reactor.go

View File

| @ -0,0 +1,620 @@ | |||

| package v1 | |||

| import ( | |||

| "errors" | |||

| "fmt" | |||

| "reflect" | |||

| "time" | |||

| amino "github.com/tendermint/go-amino" | |||

| "github.com/tendermint/tendermint/behaviour" | |||

| "github.com/tendermint/tendermint/libs/log" | |||

| "github.com/tendermint/tendermint/p2p" | |||

| sm "github.com/tendermint/tendermint/state" | |||

| "github.com/tendermint/tendermint/store" | |||

| "github.com/tendermint/tendermint/types" | |||

| ) | |||

| const ( | |||

| // BlockchainChannel is a channel for blocks and status updates (`BlockStore` height) | |||

| BlockchainChannel = byte(0x40) | |||

| trySyncIntervalMS = 10 | |||

| trySendIntervalMS = 10 | |||

| // ask for best height every 10s | |||

| statusUpdateIntervalSeconds = 10 | |||

| // NOTE: keep up to date with bcBlockResponseMessage | |||

| bcBlockResponseMessagePrefixSize = 4 | |||

| bcBlockResponseMessageFieldKeySize = 1 | |||

| maxMsgSize = types.MaxBlockSizeBytes + | |||

| bcBlockResponseMessagePrefixSize + | |||

| bcBlockResponseMessageFieldKeySize | |||

| ) | |||

| var ( | |||

| // Maximum number of requests that can be pending per peer, i.e. for which requests have been sent but blocks | |||

| // have not been received. | |||

| maxRequestsPerPeer = 20 | |||

| // Maximum number of block requests for the reactor, pending or for which blocks have been received. | |||

| maxNumRequests = 64 | |||

| ) | |||

| type consensusReactor interface { | |||

| // for when we switch from blockchain reactor and fast sync to | |||

| // the consensus machine | |||

| SwitchToConsensus(sm.State, int) | |||

| } | |||

| // BlockchainReactor handles long-term catchup syncing. | |||

| type BlockchainReactor struct { | |||

| p2p.BaseReactor | |||

| initialState sm.State // immutable | |||

| state sm.State | |||

| blockExec *sm.BlockExecutor | |||

| store *store.BlockStore | |||

| fastSync bool | |||

| fsm *BcReactorFSM | |||

| blocksSynced int | |||

| // Receive goroutine forwards messages to this channel to be processed in the context of the poolRoutine. | |||

| messagesForFSMCh chan bcReactorMessage | |||

| // Switch goroutine may send RemovePeer to the blockchain reactor. This is an error message that is relayed | |||

| // to this channel to be processed in the context of the poolRoutine. | |||

| errorsForFSMCh chan bcReactorMessage | |||

| // This channel is used by the FSM and indirectly the block pool to report errors to the blockchain reactor and | |||

| // the switch. | |||

| eventsFromFSMCh chan bcFsmMessage | |||

| swReporter *behaviour.SwitchReporter | |||

| } | |||

| // NewBlockchainReactor returns new reactor instance. | |||

| func NewBlockchainReactor(state sm.State, blockExec *sm.BlockExecutor, store *store.BlockStore, | |||

| fastSync bool) *BlockchainReactor { | |||

| if state.LastBlockHeight != store.Height() { | |||

| panic(fmt.Sprintf("state (%v) and store (%v) height mismatch", state.LastBlockHeight, | |||

| store.Height())) | |||

| } | |||

| const capacity = 1000 | |||

| eventsFromFSMCh := make(chan bcFsmMessage, capacity) | |||

| messagesForFSMCh := make(chan bcReactorMessage, capacity) | |||

| errorsForFSMCh := make(chan bcReactorMessage, capacity) | |||

| startHeight := store.Height() + 1 | |||

| bcR := &BlockchainReactor{ | |||

| initialState: state, | |||

| state: state, | |||

| blockExec: blockExec, | |||

| fastSync: fastSync, | |||

| store: store, | |||

| messagesForFSMCh: messagesForFSMCh, | |||

| eventsFromFSMCh: eventsFromFSMCh, | |||

| errorsForFSMCh: errorsForFSMCh, | |||

| } | |||

| fsm := NewFSM(startHeight, bcR) | |||

| bcR.fsm = fsm | |||

| bcR.BaseReactor = *p2p.NewBaseReactor("BlockchainReactor", bcR) | |||

| //bcR.swReporter = behaviour.NewSwitcReporter(bcR.BaseReactor.Switch) | |||

| return bcR | |||

| } | |||

| // bcReactorMessage is used by the reactor to send messages to the FSM. | |||

| type bcReactorMessage struct { | |||

| event bReactorEvent | |||

| data bReactorEventData | |||

| } | |||

| type bFsmEvent uint | |||

| const ( | |||

| // message type events | |||

| peerErrorEv = iota + 1 | |||

| syncFinishedEv | |||

| ) | |||

| type bFsmEventData struct { | |||

| peerID p2p.ID | |||

| err error | |||

| } | |||

| // bcFsmMessage is used by the FSM to send messages to the reactor | |||

| type bcFsmMessage struct { | |||

| event bFsmEvent | |||

| data bFsmEventData | |||

| } | |||

| // SetLogger implements cmn.Service by setting the logger on reactor and pool. | |||

| func (bcR *BlockchainReactor) SetLogger(l log.Logger) { | |||

| bcR.BaseService.Logger = l | |||

| bcR.fsm.SetLogger(l) | |||

| } | |||

| // OnStart implements cmn.Service. | |||

| func (bcR *BlockchainReactor) OnStart() error { | |||

| bcR.swReporter = behaviour.NewSwitcReporter(bcR.BaseReactor.Switch) | |||

| if bcR.fastSync { | |||

| go bcR.poolRoutine() | |||

| } | |||

| return nil | |||

| } | |||

| // OnStop implements cmn.Service. | |||

| func (bcR *BlockchainReactor) OnStop() { | |||

| _ = bcR.Stop() | |||

| } | |||

| // GetChannels implements Reactor | |||

| func (bcR *BlockchainReactor) GetChannels() []*p2p.ChannelDescriptor { | |||

| return []*p2p.ChannelDescriptor{ | |||

| { | |||

| ID: BlockchainChannel, | |||

| Priority: 10, | |||

| SendQueueCapacity: 2000, | |||

| RecvBufferCapacity: 50 * 4096, | |||

| RecvMessageCapacity: maxMsgSize, | |||

| }, | |||

| } | |||

| } | |||

| // AddPeer implements Reactor by sending our state to peer. | |||

| func (bcR *BlockchainReactor) AddPeer(peer p2p.Peer) { | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcStatusResponseMessage{bcR.store.Height()}) | |||

| if !peer.Send(BlockchainChannel, msgBytes) { | |||

| // doing nothing, will try later in `poolRoutine` | |||

| } | |||

| // peer is added to the pool once we receive the first | |||

| // bcStatusResponseMessage from the peer and call pool.updatePeer() | |||

| } | |||

| // sendBlockToPeer loads a block and sends it to the requesting peer. | |||

| // If the block doesn't exist a bcNoBlockResponseMessage is sent. | |||

| // If all nodes are honest, no node should be requesting for a block that doesn't exist. | |||

| func (bcR *BlockchainReactor) sendBlockToPeer(msg *bcBlockRequestMessage, | |||

| src p2p.Peer) (queued bool) { | |||

| block := bcR.store.LoadBlock(msg.Height) | |||

| if block != nil { | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcBlockResponseMessage{Block: block}) | |||

| return src.TrySend(BlockchainChannel, msgBytes) | |||

| } | |||

| bcR.Logger.Info("peer asking for a block we don't have", "src", src, "height", msg.Height) | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcNoBlockResponseMessage{Height: msg.Height}) | |||

| return src.TrySend(BlockchainChannel, msgBytes) | |||

| } | |||

| func (bcR *BlockchainReactor) sendStatusResponseToPeer(msg *bcStatusRequestMessage, src p2p.Peer) (queued bool) { | |||

| msgBytes := cdc.MustMarshalBinaryBare(&bcStatusResponseMessage{bcR.store.Height()}) | |||

| return src.TrySend(BlockchainChannel, msgBytes) | |||

| } | |||

| // RemovePeer implements Reactor by removing peer from the pool. | |||

| func (bcR *BlockchainReactor) RemovePeer(peer p2p.Peer, reason interface{}) { | |||

| msgData := bcReactorMessage{ | |||

| event: peerRemoveEv, | |||

| data: bReactorEventData{ | |||

| peerID: peer.ID(), | |||

| err: errSwitchRemovesPeer, | |||

| }, | |||

| } | |||

| bcR.errorsForFSMCh <- msgData | |||

| } | |||

| // Receive implements Reactor by handling 4 types of messages (look below). | |||

| func (bcR *BlockchainReactor) Receive(chID byte, src p2p.Peer, msgBytes []byte) { | |||

| msg, err := decodeMsg(msgBytes) | |||

| if err != nil { | |||

| bcR.Logger.Error("error decoding message", | |||

| "src", src, "chId", chID, "msg", msg, "err", err, "bytes", msgBytes) | |||

| _ = bcR.swReporter.Report(behaviour.BadMessage(src.ID(), err.Error())) | |||

| return | |||

| } | |||

| if err = msg.ValidateBasic(); err != nil { | |||

| bcR.Logger.Error("peer sent us invalid msg", "peer", src, "msg", msg, "err", err) | |||

| _ = bcR.swReporter.Report(behaviour.BadMessage(src.ID(), err.Error())) | |||

| return | |||

| } | |||

| bcR.Logger.Debug("Receive", "src", src, "chID", chID, "msg", msg) | |||

| switch msg := msg.(type) { | |||

| case *bcBlockRequestMessage: | |||

| if queued := bcR.sendBlockToPeer(msg, src); !queued { | |||

| // Unfortunately not queued since the queue is full. | |||

| bcR.Logger.Error("Could not send block message to peer", "src", src, "height", msg.Height) | |||

| } | |||

| case *bcStatusRequestMessage: | |||

| // Send peer our state. | |||

| if queued := bcR.sendStatusResponseToPeer(msg, src); !queued { | |||

| // Unfortunately not queued since the queue is full. | |||

| bcR.Logger.Error("Could not send status message to peer", "src", src) | |||

| } | |||

| case *bcBlockResponseMessage: | |||

| msgForFSM := bcReactorMessage{ | |||

| event: blockResponseEv, | |||

| data: bReactorEventData{ | |||

| peerID: src.ID(), | |||

| height: msg.Block.Height, | |||

| block: msg.Block, | |||

| length: len(msgBytes), | |||

| }, | |||

| } | |||

| bcR.Logger.Info("Received", "src", src, "height", msg.Block.Height) | |||

| bcR.messagesForFSMCh <- msgForFSM | |||

| case *bcStatusResponseMessage: | |||

| // Got a peer status. Unverified. | |||

| msgForFSM := bcReactorMessage{ | |||

| event: statusResponseEv, | |||

| data: bReactorEventData{ | |||

| peerID: src.ID(), | |||

| height: msg.Height, | |||

| length: len(msgBytes), | |||

| }, | |||

| } | |||

| bcR.messagesForFSMCh <- msgForFSM | |||

| default: | |||

| bcR.Logger.Error(fmt.Sprintf("unknown message type %v", reflect.TypeOf(msg))) | |||

| } | |||

| } | |||

| // processBlocksRoutine processes blocks until signlaed to stop over the stopProcessing channel | |||

| func (bcR *BlockchainReactor) processBlocksRoutine(stopProcessing chan struct{}) { | |||

| processReceivedBlockTicker := time.NewTicker(trySyncIntervalMS * time.Millisecond) | |||

| doProcessBlockCh := make(chan struct{}, 1) | |||

| lastHundred := time.Now() | |||

| lastRate := 0.0 | |||

| ForLoop: | |||

| for { | |||

| select { | |||

| case <-stopProcessing: | |||

| bcR.Logger.Info("finishing block execution") | |||

| break ForLoop | |||

| case <-processReceivedBlockTicker.C: // try to execute blocks | |||

| select { | |||

| case doProcessBlockCh <- struct{}{}: | |||

| default: | |||

| } | |||

| case <-doProcessBlockCh: | |||

| for { | |||

| err := bcR.processBlock() | |||

| if err == errMissingBlock { | |||

| break | |||

| } | |||

| // Notify FSM of block processing result. | |||

| msgForFSM := bcReactorMessage{ | |||

| event: processedBlockEv, | |||

| data: bReactorEventData{ | |||

| err: err, | |||

| }, | |||

| } | |||

| _ = bcR.fsm.Handle(&msgForFSM) | |||

| if err != nil { | |||

| break | |||

| } | |||

| bcR.blocksSynced++ | |||

| if bcR.blocksSynced%100 == 0 { | |||

| lastRate = 0.9*lastRate + 0.1*(100/time.Since(lastHundred).Seconds()) | |||

| height, maxPeerHeight := bcR.fsm.Status() | |||

| bcR.Logger.Info("Fast Sync Rate", "height", height, | |||

| "max_peer_height", maxPeerHeight, "blocks/s", lastRate) | |||

| lastHundred = time.Now() | |||

| } | |||

| } | |||

| } | |||

| } | |||

| } | |||

| // poolRoutine receives and handles messages from the Receive() routine and from the FSM. | |||

| func (bcR *BlockchainReactor) poolRoutine() { | |||

| bcR.fsm.Start() | |||

| sendBlockRequestTicker := time.NewTicker(trySendIntervalMS * time.Millisecond) | |||